Amir Daraie

@neuro_amir

Neuroengineer; Ph.D. student @JHUBME @LabSarma 🧠🇺🇸 photography 📸 prev. @DondersInst, @DreslerLab 🧪🇳🇱

ID: 1199035110085079041

http://adaraie.com 25-11-2019 18:40:39

1,1K Tweet

389 Followers

1,1K Following

Deep Brain Stimulation (DBS) for Depression: Can you name the relevant symptom markers, neuroimaging, electrophysiology, symptoms, sensors, network based targeting and evoked potentials? If NO, then you should read this comprehensive and fresh new review in molecularpsychiatry by

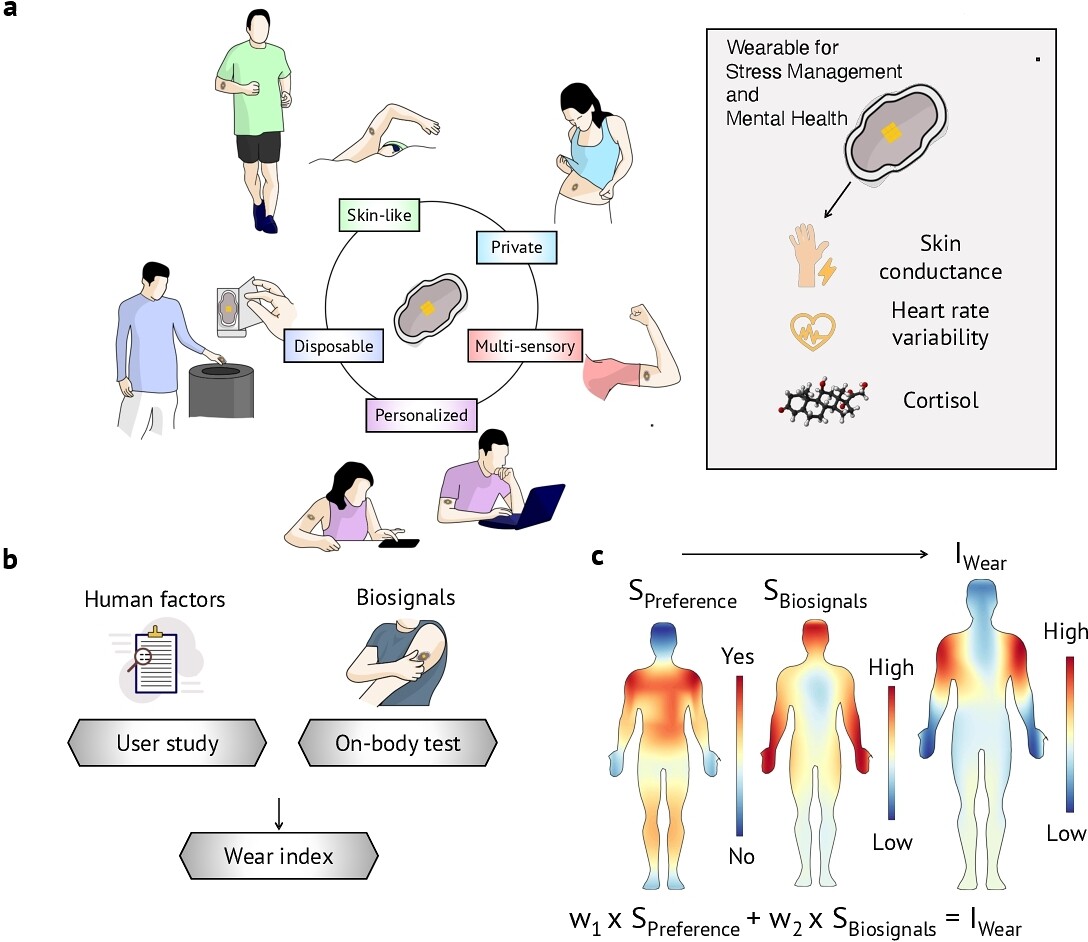

The first paper from my group. Yay! Published today in Nature Electronics, we review the literature and present a step-by-step guide for designing ingestible electronics. Link: rdcu.be/dyfg6 USC Viterbi School USC

Temporarily escaped the winter storm in Boston to give a talk on "Goal-Driven Models of Physical Understanding" at Stanford University's NeuroAI course (CS375). Slides here: anayebi.github.io/files/slides/A… Always good to be back in my intellectual home! ☀️

My final PhD chapter on improving seizure detection with hazyresearch and Daniel Rubin QILab was just published npj Digital Medicine. TL;DR We found that scaling two dimensions of model supervision: (1) coverage of training data and (2) granularity of class labels– has a large impact on

Looking forward to participating in this NIH workshop about human data & causality gap within in two weeks. Fantastic lineup w/ Dani S. Bassett Dora Hermes Lucina Uddin Nanthia Suthana Michael Fox Shan Siddiqi @shansiddiqi.bsky.social Sameer Sheth Earl K. Miller & more – register free: event.roseliassociates.com/AHNNSR-Worksho…

🚨New #preprints alert!🚨 Excited to share our work on #DeepBrainStimulation of the motor thalamus to enhance speech & swallowing. This continues our research led by Lilly Tang & me, with guidance from Elvira Pirondini & Jorge Gonzalez-Martinez. 🧠1/8 medrxiv.org/content/10.110…

A hardwired high-speed sweep mechanism by which #gridcell populations scan the environment during navigation: Now out in nature ! Thanks to Abraham Zelalem Vollan and Rich Gardner for this heroic work! European Research Council (ERC) May-Britt Moser Kavli Neuroscience NTNU Norges forskningsråd nature.com/articles/s4158…

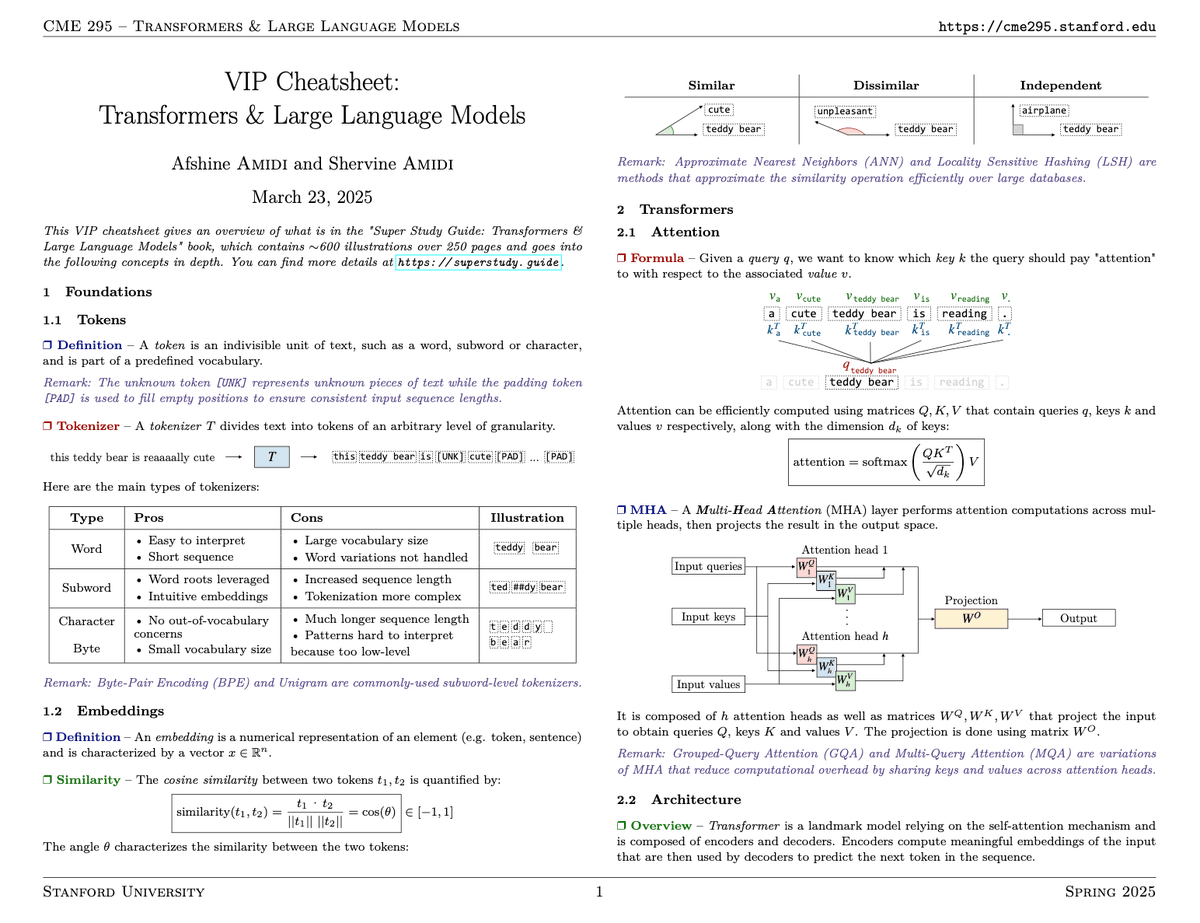

This Spring, my twin brother Shervine Amidi and I will be teaching a new class at Stanford called "Transformers & Large Language Models" (CME 295). The goal of this class is to understand where LLMs come from, how they are trained, and where they are most used. We will also explore