Nicola Cancedda

@nicola_cancedda

Research Scientist Manager @MetaAI

ID: 19602444

27-01-2009 17:15:11

43 Tweet

417 Followers

156 Following

So many applications need to index text content. Releasing BELA, the first end-to-end entity linking model that works in 97 languages! arxiv.org/abs/2306.08896 github.com/facebookresear… Nora Kassner Mike Kashyap Popat Louis Martin Frédéric Dreyer

Check out these new insights on transformers from Lena Voita, Javier Ferrando and Christoforos !

There is a common mechanism behind LLM jailbreaking, and it can be leveraged to make models safer! Check out our new work! With Lei Yu Virginie Do Karen Hambardzumyan .

I am at #ICLR and honored to present this work on Saturday afternoon at the poster session. Thanks Lei Yu Karen Hambardzumyan Nicola Cancedda for this wonderful collaboration! I am also happy to chat about Llama / agents / safety 👋

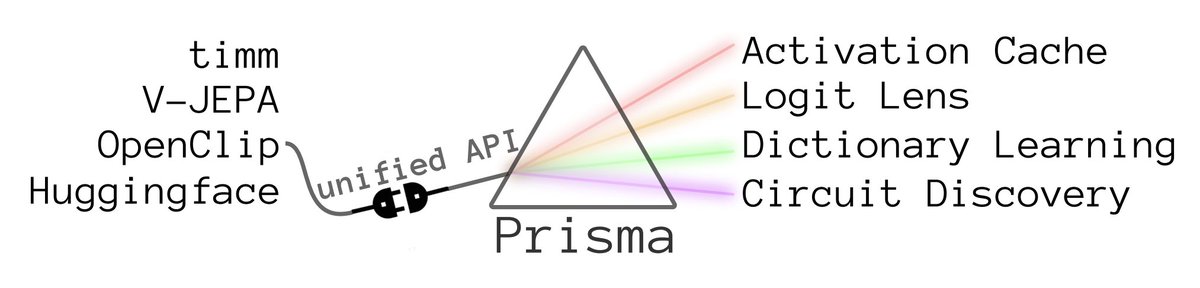

Our paper Prisma: An Open Source Toolkit for Mechanistic Interpretability in Vision and Video received an Oral at the Mechanistic Interpretability for Vision Workshop at CVPR 2025! 🎉 We’ll be in Nashville next week. Come say hi 👋 #CVPR2025 Mechanistic Interpretability for Vision @ CVPR2025