Marius Miron

@nkundiushuti

mediteromanian, signal processing and machine learning, ex-photographer, ex-music blogger, amateur musician, field recordist, cyborg

ID: 426721619

http://mariusmiron.com 02-12-2011 16:15:30

1,1K Tweet

522 Followers

517 Following

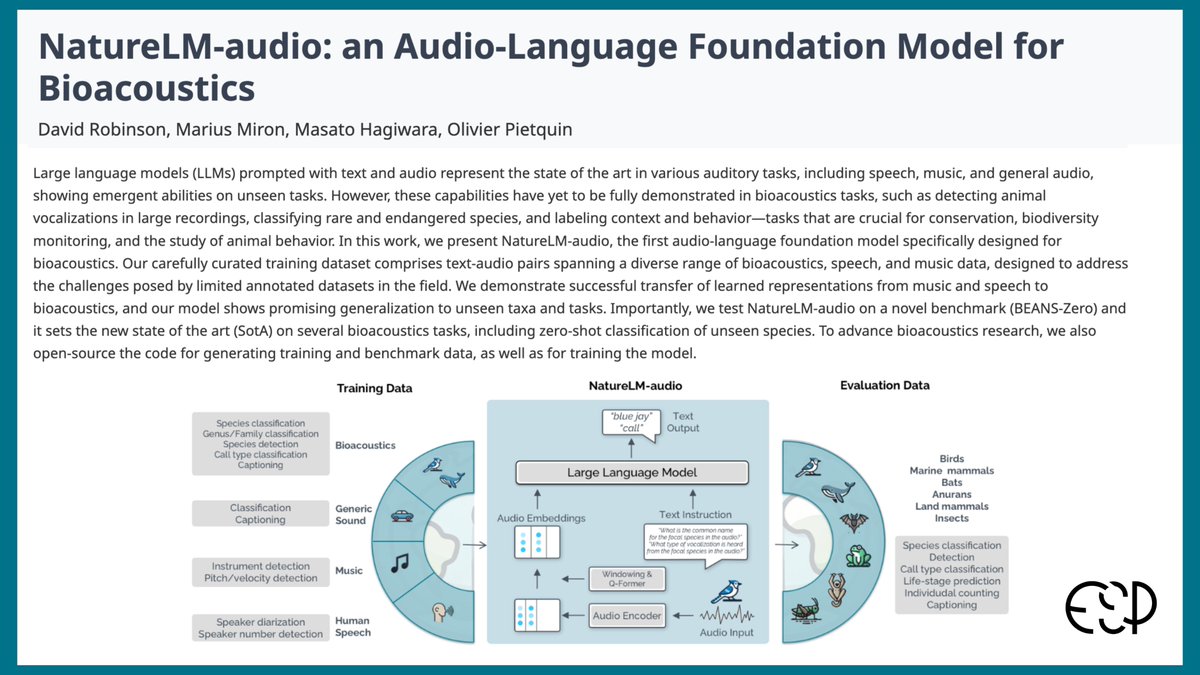

🎙️✨ Join me and the researchers behind NatureLM-audio (Marius Miron and David Robinson) as we present a live technical walkthrough on Nov 21 at 5pm GMT (12pm US Eastern / 9am US Pacific)! Interested? Head to our Discord community for more information: discord.com/invite/H2Y532a…

this neurips is really going to be remembered as the "end of pretraining" neurips notes from doctor Noam Brown's talk on scaling test time compute today (thank you Hattie Zhou for organizing)

ESP co-founder, Aza Raskin spoke with Kenneth Cukier on the Babbage podcast by The Economist, where he shared how we're leveraging AI to decode animal communication and working toward a future of interspecies understanding 🌍 economist.com/podcasts/2025/…

daré una charla por videoconferencia mañana a las 2PM hora de Colombia sobre cómo usar inteligencia artificial para decodificar el comportamiento animal Earth Species Project (ESP)