Noah Constant

@noahconst

Research Scientist @GoogleDeepMind

ID: 1371526495965831173

15-03-2021 18:20:08

23 Tweet

588 Followers

117 Following

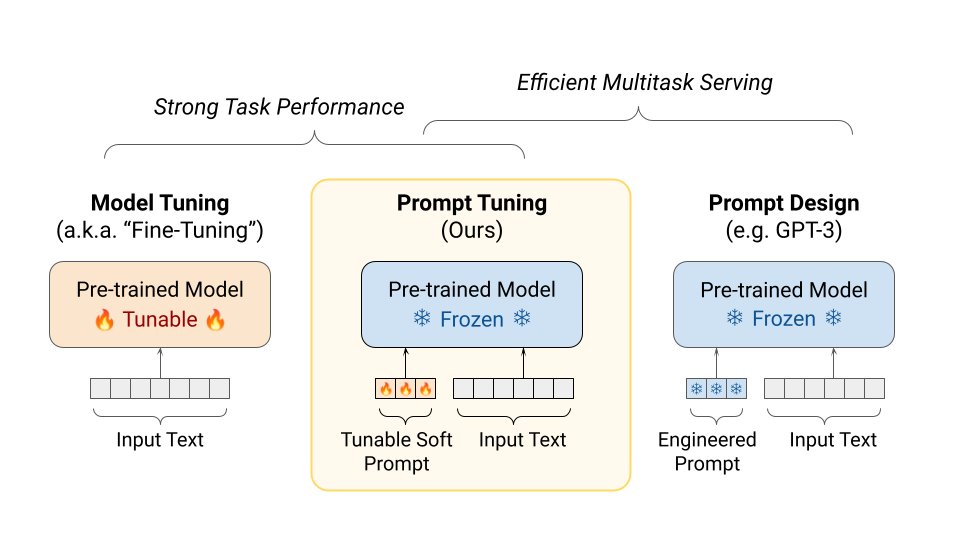

Happy to share our soft prompt transfer (SPoT) paper made it to #ACL2022 🎉. On the SuperGLUE leaderboard, SPoT is the first parameter-efficient approach that is competitive with methods that tune billions of parameters. w/ Brian Lester, Noah Constant, @aboSamoor, Daniel Cer

I love music most when it’s live, in the moment, and expressing something personal. This is why I’m psyched about the new “DJ mode” we developed for MusicFX: aitestkitchen.withgoogle.com/tools/music-fx… It’s an infinite AI jam that you control 🎛️. Try mixing your unique 🌀 of instruments, genres,

I’m so proud of the updated version of #MusicFXDJ we developed in collaboration with Jacob Collier, available today at labs.google/musicfx. Over the past year I’ve spent countless hours experimenting with our real-time music models, and it feels like I’ve learned to play a

We’ve just released Magenta RealTime, an open-weights live music model that lets you craft sounds in real time by exploring the latent space through text and audio! 🤗 Model: huggingface.co/google/magenta…… 🧑💻Code: github.com/magenta/magent… 📝Blog post: magenta.withgoogle.com/magenta-realti…