Ola Piktus

@olapiktus

ID: 1102704797403238400

04-03-2019 22:58:05

70 Tweet

1,1K Followers

405 Following

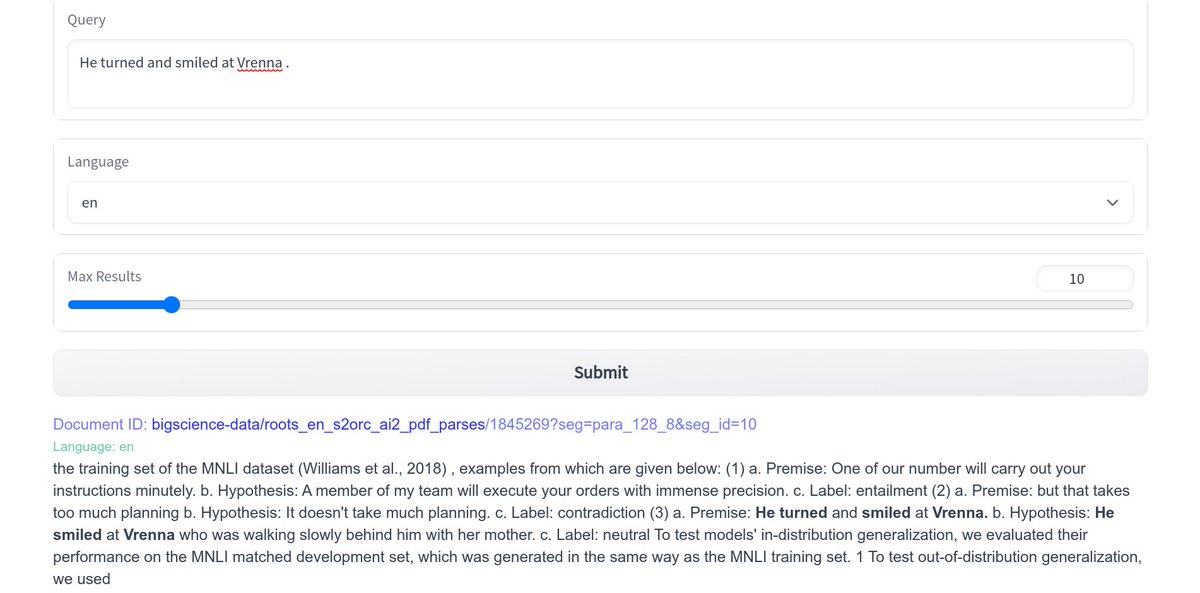

The Roots Search Tool from Hugging Face allowed me to quickly find XNLI examples in the BLOOM pre-training data - probably true for other benchmarks too. This 🔥 tool really highlights open-data as key to making the study of LLM capabilities a science. huggingface.co/spaces/bigscie…

📢 New blog post: the attribution problem with generative AI #NLProc AI & Society TLDR: Some argue that publicly available data is fair game for commercial models bc human text/art also has sources. But unlike models, we know when attribution is due... hackingsemantics.xyz/2022/attributi…

ROOTS Search Tool now shinier and better with both exact search and BM25-based sparse retrieval ☀️🌸 Check out Anna Rogers 's thread for details 🚀

[#FridayWiMLDSPaper 📜 curated by Marie Sacksick] "The ROOTS Search Tool: Data Transparency for LLMs", by Ola Piktus, Christopher Akiki, Paulo Villegas, Hugo Laurençon, @ggdupont, Sasha Luccioni, PhD 🦋🌎✨🤗, Yacine Jernite and Anna Rogers arxiv.org/abs/2302.14035

![WiMLDS Paris (@wimlds_paris) on Twitter photo [#FridayWiMLDSPaper 📜 curated by <a href="/MarieSacksick/">Marie Sacksick</a>]

"The ROOTS Search Tool: Data Transparency for LLMs", by <a href="/olapiktus/">Ola Piktus</a>, <a href="/christopher/">Christopher Akiki</a>, Paulo Villegas, <a href="/HugoLaurencon/">Hugo Laurençon</a>, @ggdupont, <a href="/SashaMTL/">Sasha Luccioni, PhD 🦋🌎✨🤗</a>, <a href="/YJernite/">Yacine Jernite</a> and <a href="/annargrs/">Anna Rogers</a>

arxiv.org/abs/2302.14035 [#FridayWiMLDSPaper 📜 curated by <a href="/MarieSacksick/">Marie Sacksick</a>]

"The ROOTS Search Tool: Data Transparency for LLMs", by <a href="/olapiktus/">Ola Piktus</a>, <a href="/christopher/">Christopher Akiki</a>, Paulo Villegas, <a href="/HugoLaurencon/">Hugo Laurençon</a>, @ggdupont, <a href="/SashaMTL/">Sasha Luccioni, PhD 🦋🌎✨🤗</a>, <a href="/YJernite/">Yacine Jernite</a> and <a href="/annargrs/">Anna Rogers</a>

arxiv.org/abs/2302.14035](https://pbs.twimg.com/media/Fq4X8DCX0AsvDCM.png)

Every researcher's path is a rollercoaster filled with numerous lows. With unspeakable joy, today I want to share a high point: our paper, “Improving Wikipedia Verifiability with AI,” has been published in the prestigious Nature Portfolio. Dive in nature.com/articles/s4225… [1/4]

![Fabio Petroni (@fabio_petroni) on Twitter photo Every researcher's path is a rollercoaster filled with numerous lows. With unspeakable joy, today I want to share a high point: our paper, “Improving Wikipedia Verifiability with AI,” has been published in the prestigious <a href="/NaturePortfolio/">Nature Portfolio</a>. Dive in nature.com/articles/s4225… [1/4] Every researcher's path is a rollercoaster filled with numerous lows. With unspeakable joy, today I want to share a high point: our paper, “Improving Wikipedia Verifiability with AI,” has been published in the prestigious <a href="/NaturePortfolio/">Nature Portfolio</a>. Dive in nature.com/articles/s4225… [1/4]](https://pbs.twimg.com/media/F8z9QxzWwAA4PvK.jpg)

Our work on multi-epoch scaling laws with the amazing Niklas Muennighoff (applying to grad school!) and Sasha Rush Teven Le Scao Ola Piktus Nouamane Tazi TurkuNLP Thomas Wolf Colin Raffel won runner-up outstanding paper award! See Niklas Muennighoff 's thread x.com/Muennighoff/st…

Awesome poster presentation by Niklas Muennighoff for the paper "Scaling Data-Constrained Language Models" at #NeurIPS2023 Kudos Sasha Rush Boaz Barak Teven Le Scao Ola Piktus Nouamane Tazi Sampo Pyysalo Thomas Wolf Colin Raffel