onnxruntime

@onnxruntime

Cross-platform training and inferencing accelerator for machine learning models.

ID: 1041831598415523841

http://onnxruntime.ai 17-09-2018 23:29:44

283 Tweet

1,1K Followers

42 Following

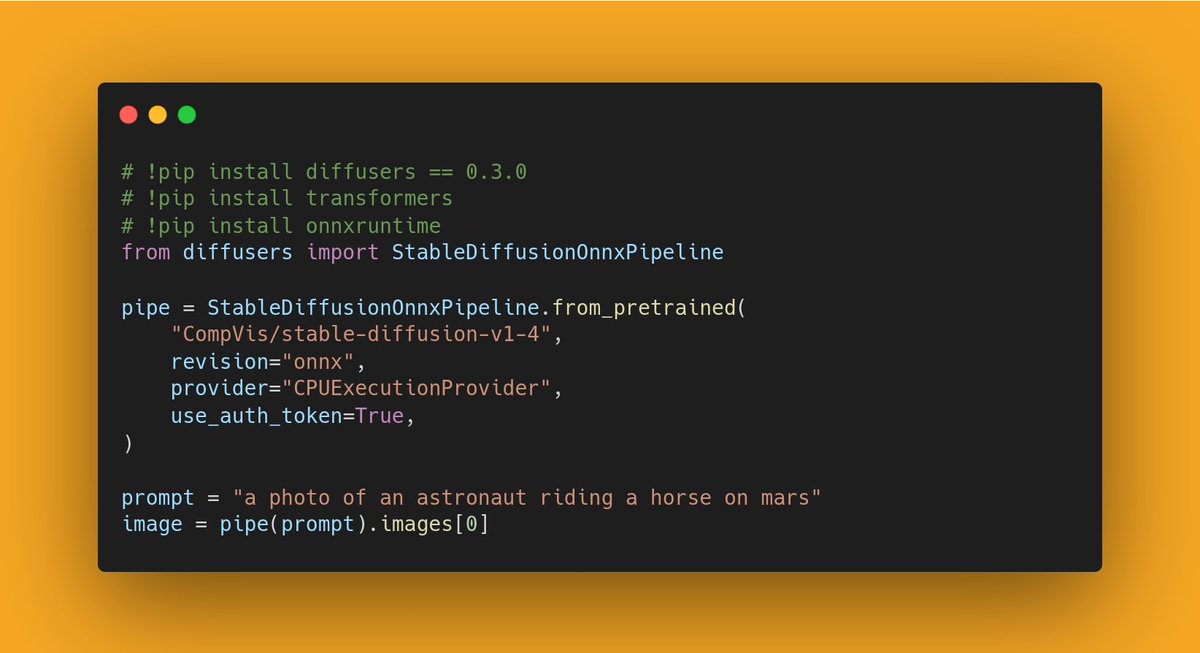

🏭 The hardware optimization floodgates are open!🔥 Diffusers 0.3.0 supports an experimental ONNX exporter and pipeline for Stable Diffusion 🎨 To find out how to export your own checkpoint and run it with onnxruntime, check the release notes: github.com/huggingface/di…

Gerald Versluis What about a video on ONNX runtime? Here is the official documentation devblogs.microsoft.com/xamarin/machin… And MAUI example: github.com/microsoft/onnx…

Finally tokenization with Sentence Piece BPE now works as expected in #NodeJS #JavaScript with tokenizers library 🚀! Now getting "invalid expand shape" errors when passing text tokens' encoded ids to the MiniLM onnxruntime converted Microsoft Research model huggingface.co/microsoft/Mult…

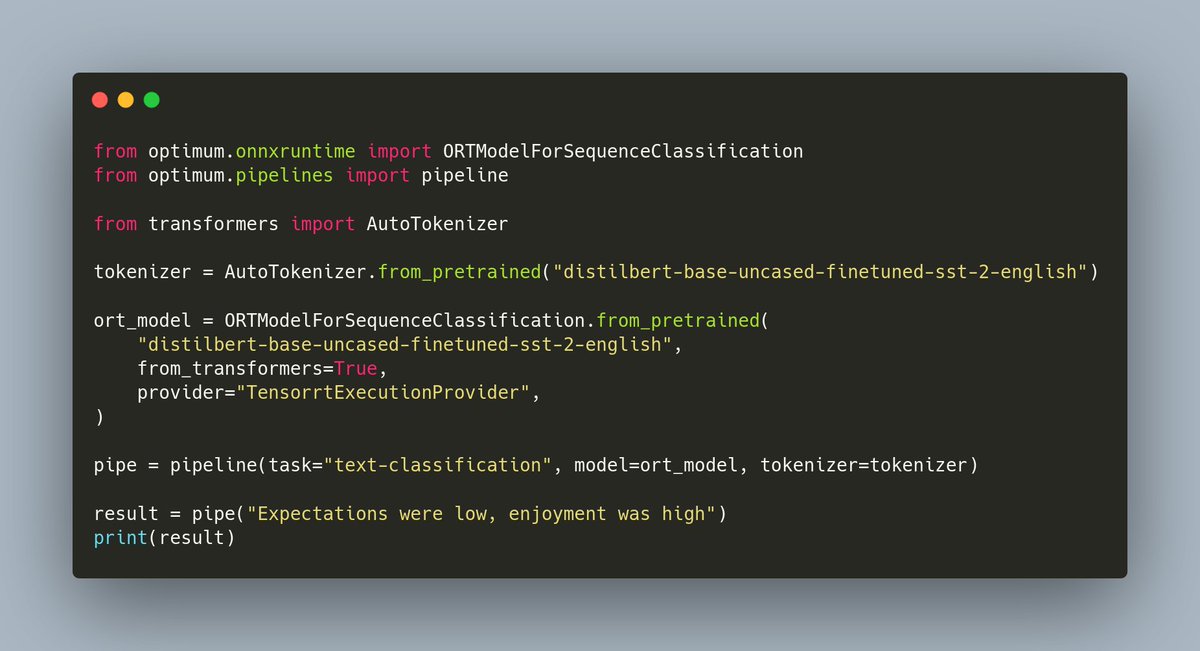

Want to use TensorRT as your inference engine for its speedups on GPU but don't want to go into the compilation hassle? We've got you covered with 🤗 Optimum! With one line, leverage TensorRT through onnxruntime! Check out more at hf.co/docs/optimum/o…

Imagine the frustration of, after applying optimization tricks, finding that the data copying to GPU slows down your "MUST-BE-FAST" inference...🥵 🤗 Optimum v1.5.0 added onnxruntime IOBinding support to reduce your memory footprint. 👀 github.com/huggingface/op… More ⬇️

#ONNX Runtime saved the day with our interoperability and ability to run locally on-client and/or cloud! Our lightweight solution gave them the performance they needed with quantization & configuration tooling. Learn how they achieved this in this blog! cloudblogs.microsoft.com/opensource/202…