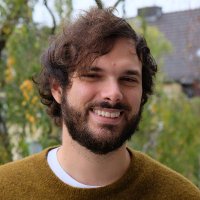

Antonio Orvieto

@orvieto_antonio

Deep Learning PI @ELLISInst_Tue, Group Leader @MPI_IS.

I compute stuff with lots of gradients 🧮,

I like Kierkegaard & Lévi-Strauss 🧙♂️

ID: 1172891108076077057

http://orvi.altervista.org/ 14-09-2019 15:13:38

303 Tweet

1,1K Followers

1,1K Following

*Generalized Interpolating Discrete Diffusion* by Dimitri von Rütte Antonio Orvieto & al. A class of discrete diffusion models combining standard masking with uniform noise to allow the model to potentially "correct" previously wrong tokens. arxiv.org/abs/2503.04482