Oskar Hallström

@oskar_hallstrom

AI R&D @lightonio. Former Indie One Hit Wonder @ Billie Garlic.

ID: 1777997990562566146

https://open.spotify.com/artist/2KZoVTprHSLoYX7G38MBh9?si=IcUsQjxiQzmTH21AqGPC6w 10-04-2024 09:52:30

44 Tweet

297 Followers

79 Following

🚀 Insane day yesterday for the Knowledge squad LightOn! Raphaël Sourty shipped PyLate-rs and Antoine Chaffin delivered a beautiful lecture on late interaction models supremacy, LFG ❤️

We are at #ACL2025 with Oskar Hallström Do not hesitate to come discuss with us if you are interested in IR, encoders, late interaction or VLMs! I am attaching a picture of us because I figured people do not know our faces due to our profile pictures 🥲

📍 ACL 2025: Encoders-only coffee chat anyone? Antoine Chaffin & Oskar Hallström are in Vienna to present ModernBERT Paper at ACL 2025 📅 Don’t miss the Poster Session today 11am. ➡️ Poster 115 ☕ Or feel free to catch them in the #ACL2025NLP aisles! 👉 To know more

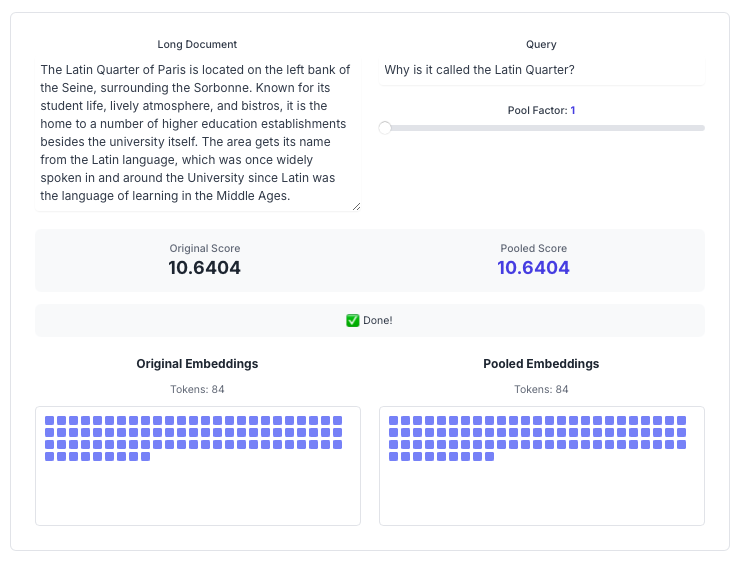

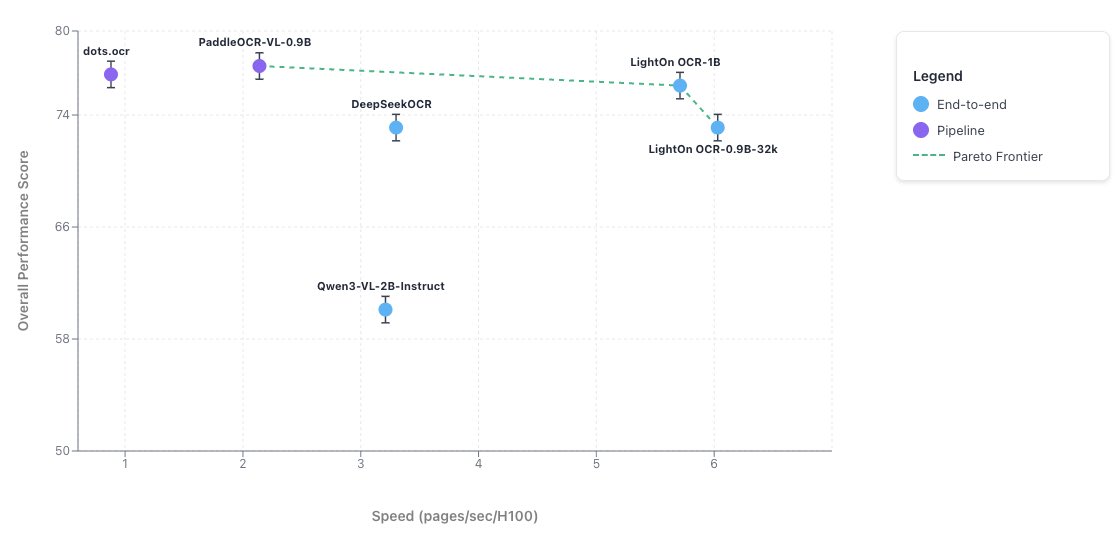

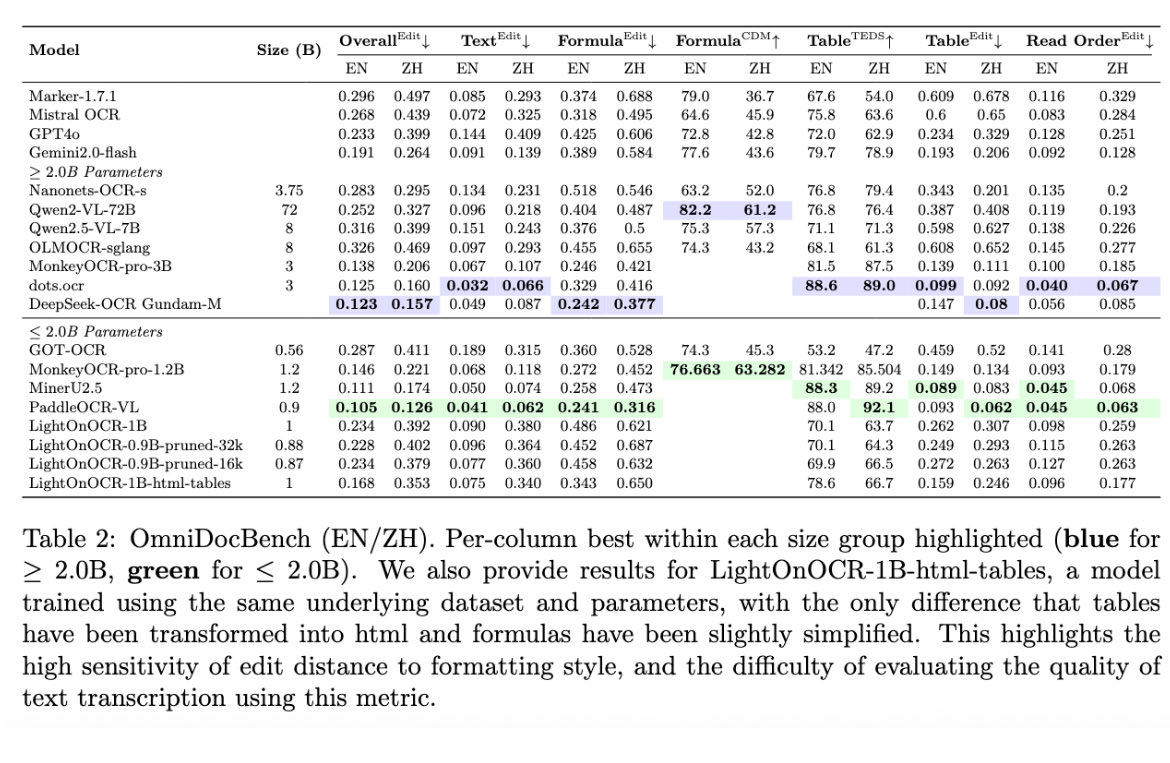

Last few days have been insane in the OCR land with releases from DeepSeek, PaddlePaddle and others. Now we at LightOn are entering the game with our latest release, pushing the state of the art even further. Kudos staghado Baptiste Aubertin Adrien Cavaillès 🥳

Shoutout to our Grand Retrieval Master and Model Whisperer Amélie Chatelain. I had so much FOMO for this talk that I decided to go to London myself to see it. See you there!!

Had an amazing time giving a talk on Retrieval in the Age of Agents at Weights & Biases's #FullyConnected2025! Feeling very grateful to have had this opportunity as well as fascinating discussions with the other attendees ❤️.