Piotr Nawrot

@p_nawrot

PhD student in NLP @Edin_CDT_NLP | Previously intern @Nvidia @AIatMeta @cohere | 🥇🥈@ Polish Championships in Flunkyball

ID: 2694126977

https://piotrnawrot.github.io 10-07-2014 06:50:37

342 Tweet

6,6K Followers

257 Following

Tomorrow at 6pm CET I'm giving a talk about our latest work on Sparse Attention, at Cohere Labs. I plan to describe the field as it is now, discuss our evaluation results, and share insights about what I believe is the future of Sparse Attention. See you!

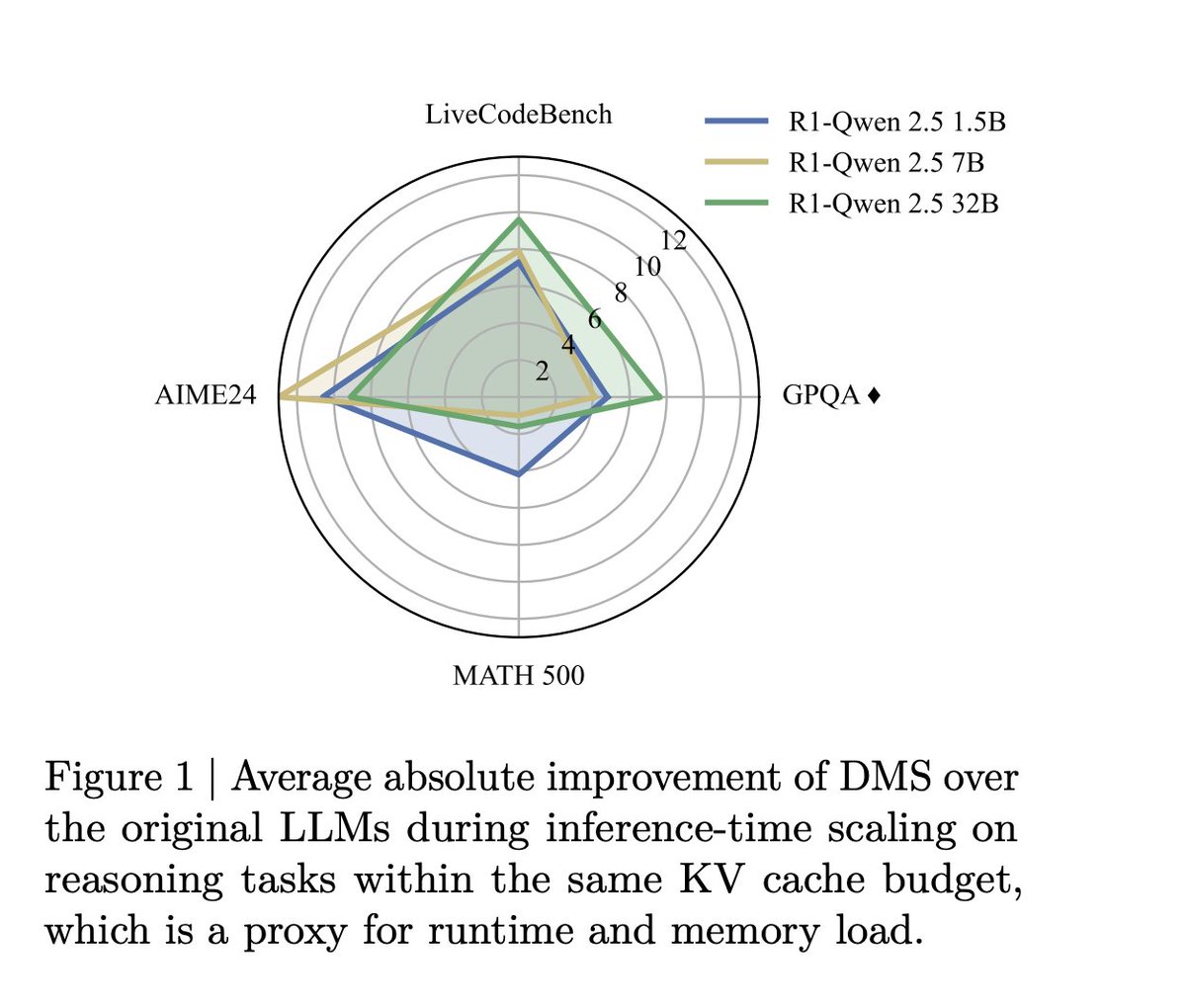

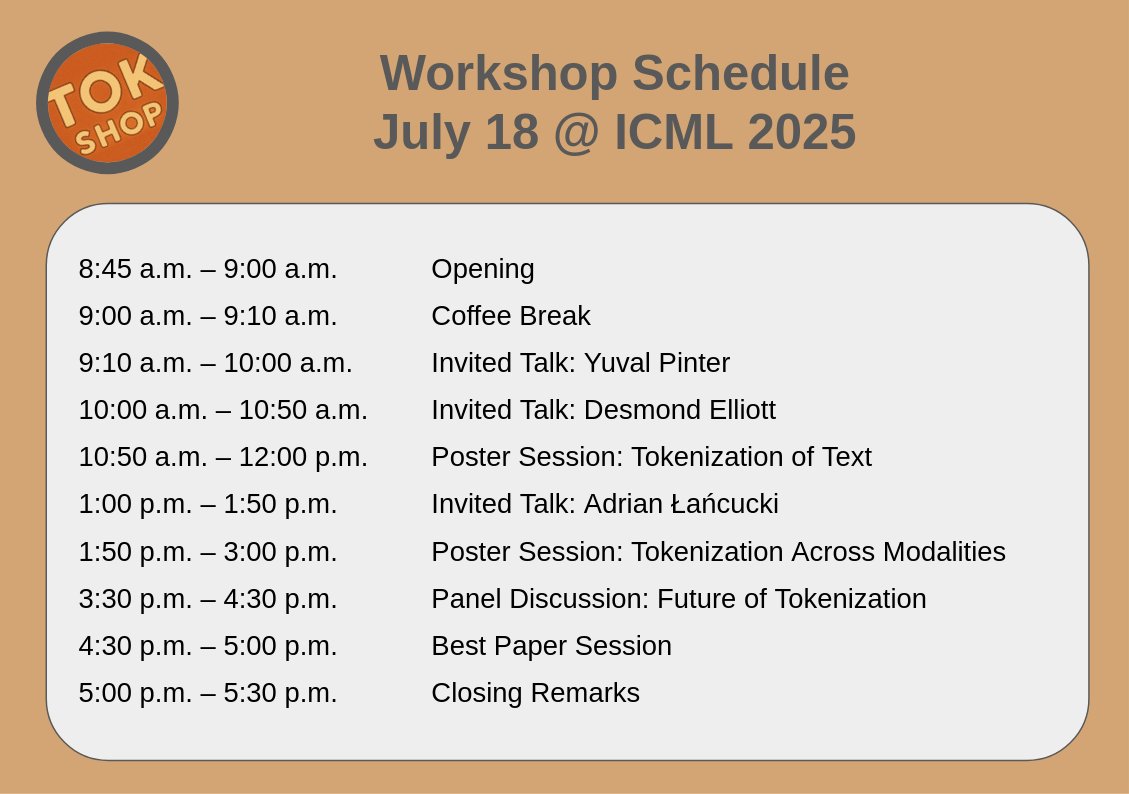

If you are at ICML Conference make sure to attend Adrian Lancucki’s invited talk on our inference-time *hyper*-scaling paper (and more!) at the tokenization workshop this Friday tokenization-workshop.github.io/schedule/

*The Sparse Frontier: Sparse Attention Trade-offs in Transformer LLMs* by Piotr Nawrot Edoardo Ponti Kelly Marchisio (St. Denis) Sebastian Ruder They study sparse attention techniques at scale, comparing to small dense models at the same compute budget. arxiv.org/abs/2504.17768