Parameter Lab

@parameterlab

Empowering individuals and organisations to safely use foundational AI models.

ID: 1640056112329240578

http://parameterlab.de 26-03-2023 18:20:43

127 Tweet

259 Followers

109 Following

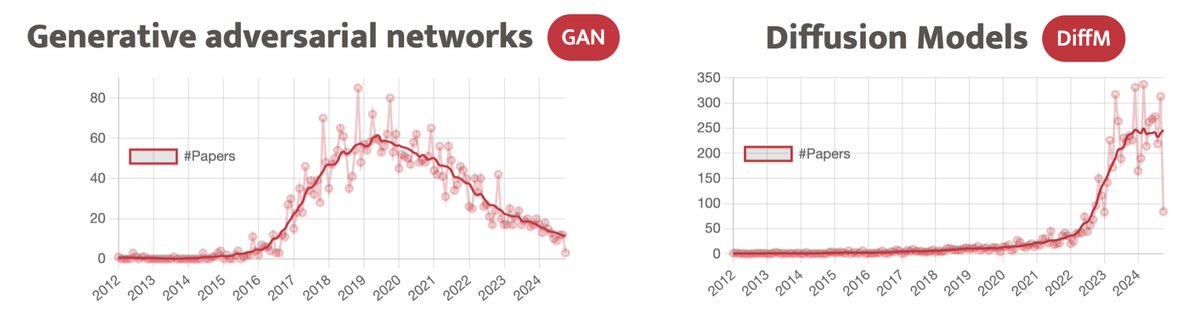

There's an internship opening at Parameter Lab : parameterlab.de/careers The research outputs have been quite successful so far: researchtrend.ai/organizations/…

I'm excited to announce that my internship paper at Parameter Lab was accepted to Findings of #NAACL2025 🎉 Huge thanks to Martin Gubri Seong Joon Oh and Sangdoo Yun! Amazing team!!

#NAACL2025 has started! I’ll be presenting my work at Parameter Lab about detecting pretraining data on Friday 🗓️ May 2, 11:00 AM - May 2, 12:30 PM 🗺️ Poster Session 8 - APP: NLP Applications Location: Hall 3 Work with Martin Gubri Sangdoo Yun Seong Joon Oh