Fred Zhangzhi Peng

@pengzhangzhi1

#ML & #ProteinDesign. PhD student @DukeU.

ID: 1505051724796481536

https://pengzhangzhi.github.io/home/ 19-03-2022 05:21:19

214 Tweet

455 Followers

581 Following

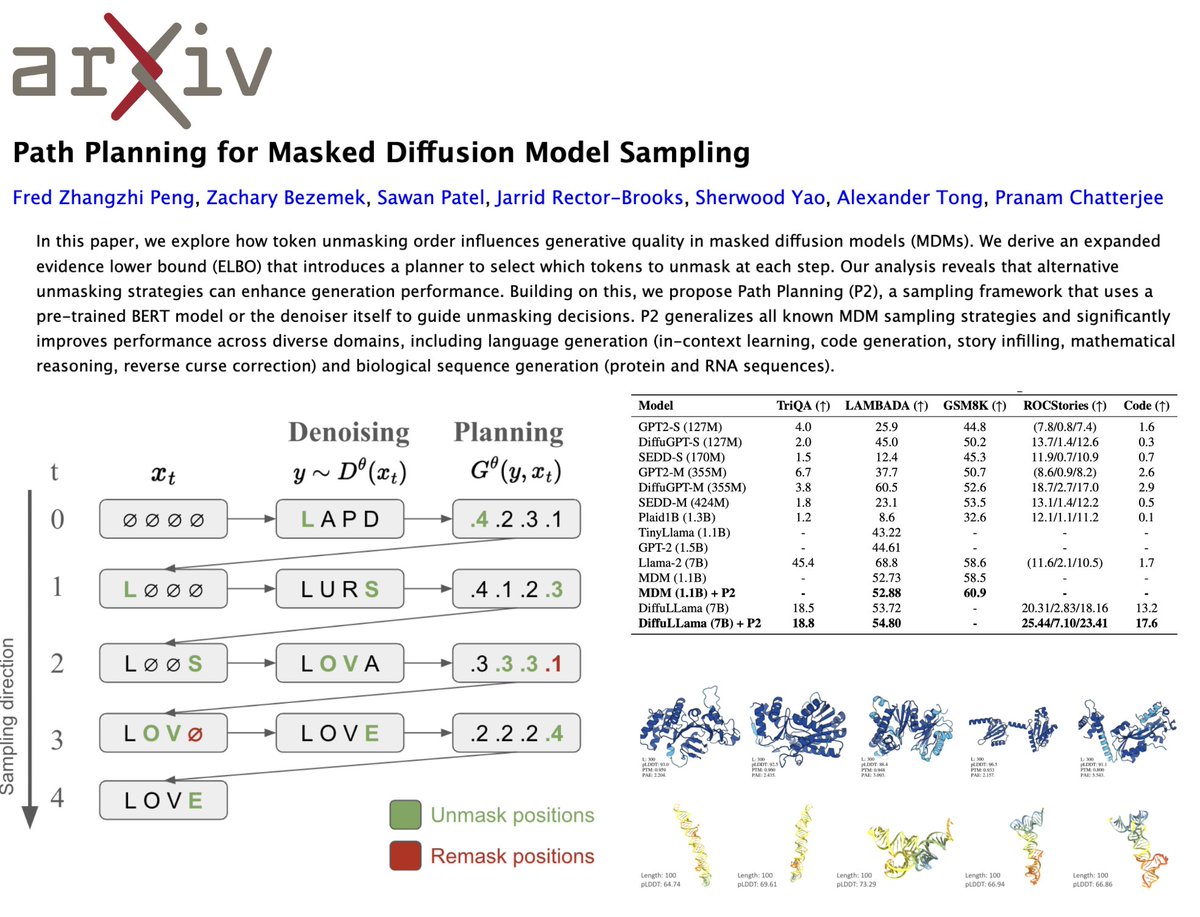

It's tough times for science. 🥺 But we have to keep innovating to fight another day, and today I'm so proud to share Fred Zhangzhi Peng's new, groundbreaking sampling algorithm for generative language models, Path Planning (P2). 🌟 📜: arxiv.org/abs/2502.03540 💻: In the appendix!!

PTM-Mamba: a PTM-aware protein language model with bidirectional gated Mamba blocks Nature Methods 1. PTM-Mamba is the first protein language model explicitly designed to encode post-translational modifications (PTMs), using a novel bidirectional gated Mamba architecture fused

How can protein language models incorporate post-translational modifications (PTMs) to better represent the functional diversity of the proteome?Nature Methods Duke University "PTM-Mamba: a PTM-aware protein language model with bidirectional gated Mamba blocks" Authors: Fred Zhangzhi Peng

Super exited about Singapore and ICLR2025. Will present my work on masked diffusion models at ICLR Nucleic Acids Workshop #DeLTa #FPI and GEMBio Workshop. Please stop by posters and chat about MDMs and protein design :)