Pierre Fernandez

@pierrefdz

Researcher (Meta, FAIR Paris) • Working on AI, watermarking and data protection • ex. @Inria, @Polytechnique, @UnivParisSaclay (MVA)

ID: 1325092743073443840

https://pierrefdz.github.io/ 07-11-2020 15:08:36

164 Tweet

511 Followers

246 Following

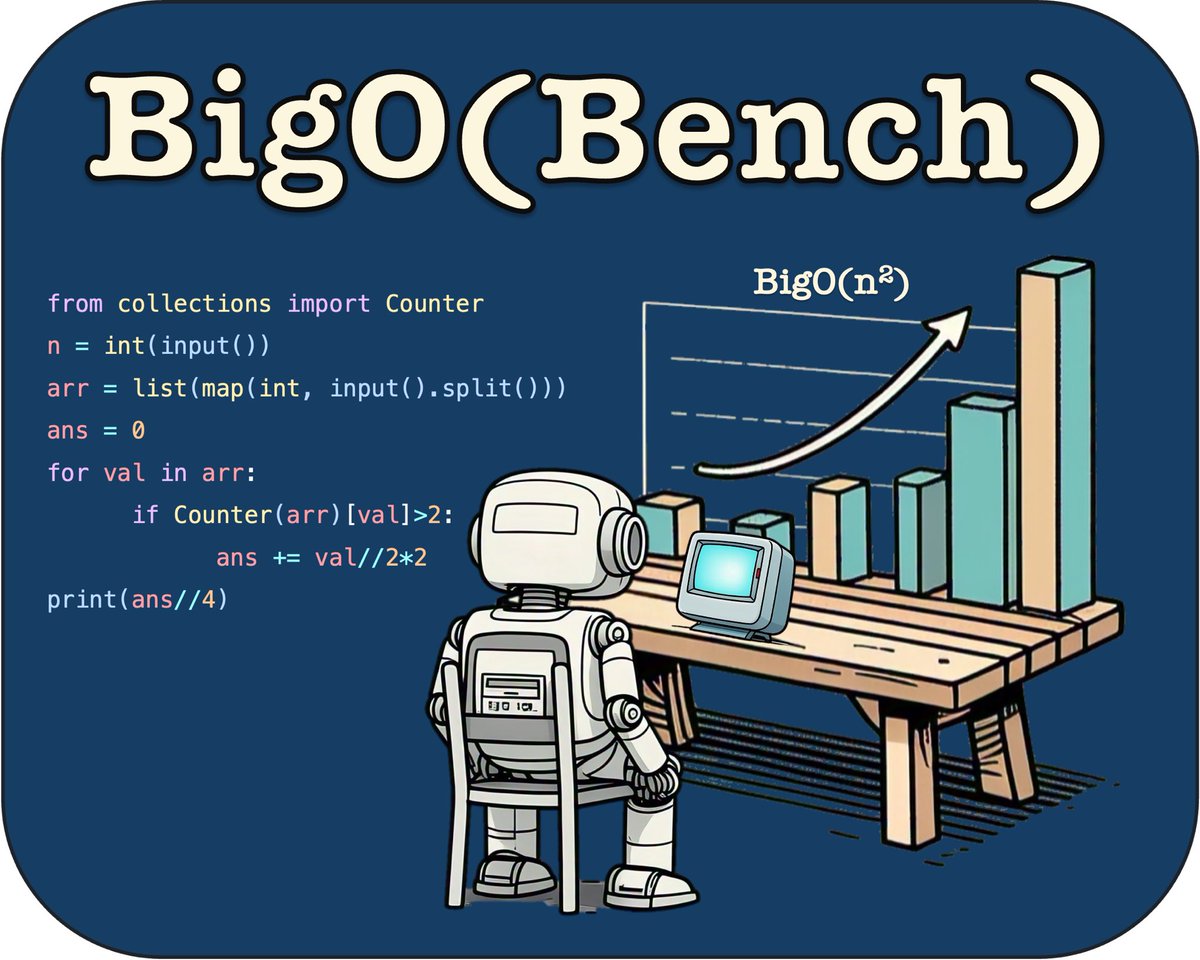

Complexity-related generation sets the bar high for code-related models 🧵 📖 Read of the day, season 3, day 27: « Big(O)Bench: Can LLMs generate code with controlled time and space complexity? », by Pierre Chambon, Baptiste Rozière, Benoît Sagot and Gabriel Synnaeve from AI at Meta We know that

1/ Happy to share my first accepted paper as a PhD student at Meta and École normale supérieure | PSL which I will present at ICLR 2026: 📚 Our work proposes difFOCI, a novel rank-based objective for ✨better feature learning✨ In collab with David Lopez-Paz, Giulio Biroli and Levent Sagun!

My team Google DeepMind is hiring. If you are passionate about engineering and real-world impact with experience in robust ML, provenance of synthetic media, or data trustworthiness, consider applying: job-boards.greenhouse.io/deepmind/jobs/…

We present an Autoregressive U-Net that incorporates tokenization inside the model, pooling raw bytes into words then word-groups. AU-Net focuses most of its compute on building latent vectors that correspond to larger units of meaning. Joint work with Badr Youbi Idrissi 1/8

![Federico Baldassarre (@baldassarrefe) on Twitter photo DINOv2 meets text at #CVPR 2025! Why choose between high-quality DINO features and CLIP-style vision-language alignment? Pick both with dino.txt 🦖📖

We align frozen DINOv2 features with text captions, obtaining both image-level and patch-level alignment at a minimal cost. [1/N] DINOv2 meets text at #CVPR 2025! Why choose between high-quality DINO features and CLIP-style vision-language alignment? Pick both with dino.txt 🦖📖

We align frozen DINOv2 features with text captions, obtaining both image-level and patch-level alignment at a minimal cost. [1/N]](https://pbs.twimg.com/media/Gtbam9fW0AAC7_j.jpg)