Prafull Sharma

@prafull7

PostDoc @MIT with Josh Tenenbaum and Phillip Isola // PhD @MIT with Bill Freeman and Fredo Durand // BS @Stanford

ID: 188711985

http://prafullsharma.net 09-09-2010 12:12:26

346 Tweet

1,1K Followers

755 Following

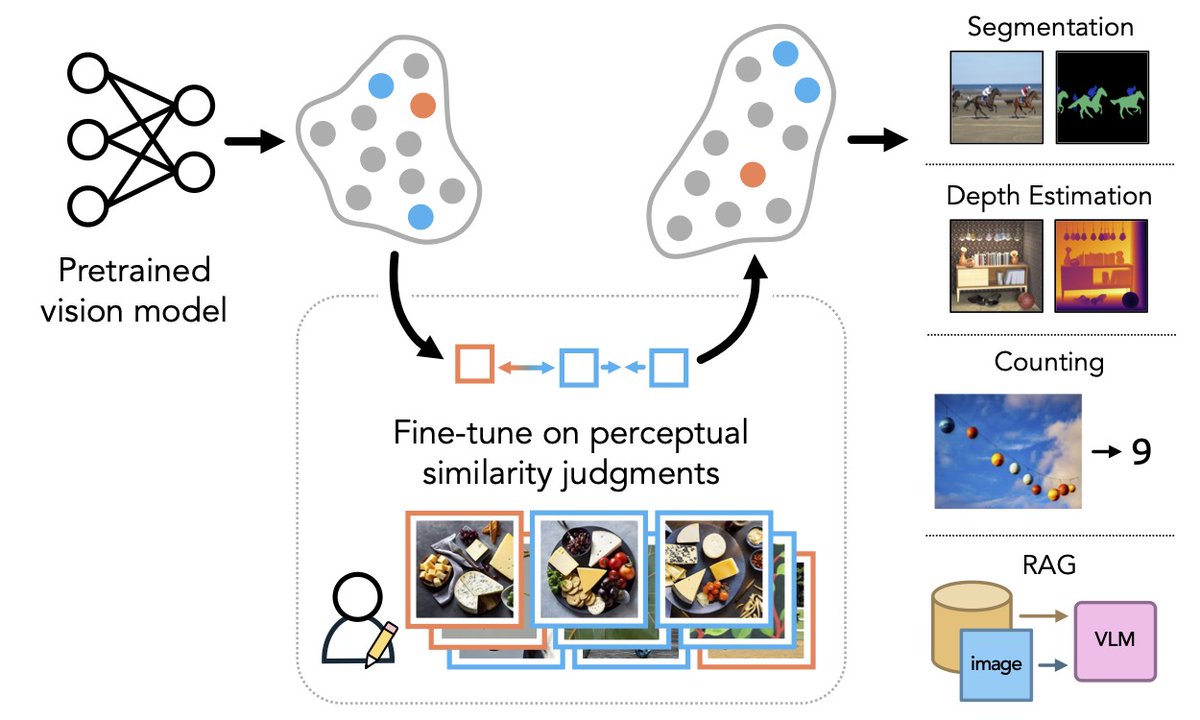

Thrilled to share that ZeST has been accepted to #ECCV2024 !! A huge thanks to my collaborators/mentors Prafull Sharma , Varun Jampani, and my supervisors Niki Trigoni and Andrew Markham for the amazing support!

We just wrote a primer on how the physics of sound constrains auditory perception: authors.elsevier.com/a/1jzSR3QW8S6E… Covers sound propagation and object interactions, and touches on their relevance to music and film. I enjoyed working on this with Vin Agarwal and James Traer.

There's a new kind of coding I call "vibe coding", where you fully give in to the vibes, embrace exponentials, and forget that the code even exists. It's possible because the LLMs (e.g. Cursor Composer w Sonnet) are getting too good. Also I just talk to Composer with SuperWhisper

We wrote a new video diffusion paper! Kiwhan Song and Boyuan Chen and co-authors did absolutely amazing work here. Apart from really working, the method of "variable-length history guidance" is really cool and based on some deep truths about sequence generative modeling....