Prakhar Ganesh

@prakhar_24

PhD @ McGill and Mila | Interested in Responsible AI and Revenge

ID: 861897494011170816

https://prakharg24.github.io/ 09-05-2017 10:55:50

35 Tweet

42 Followers

173 Following

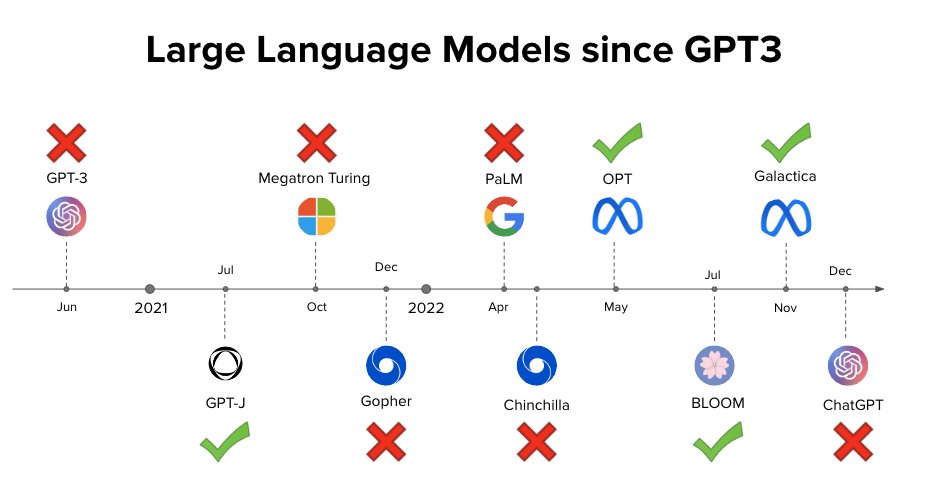

Here's hoping I don't need to update this slide again before my talk next week EMNLP 2025 If anyone is planning to release anything next week, please lmk soon 😅 Am I missing any text-only LLMs?

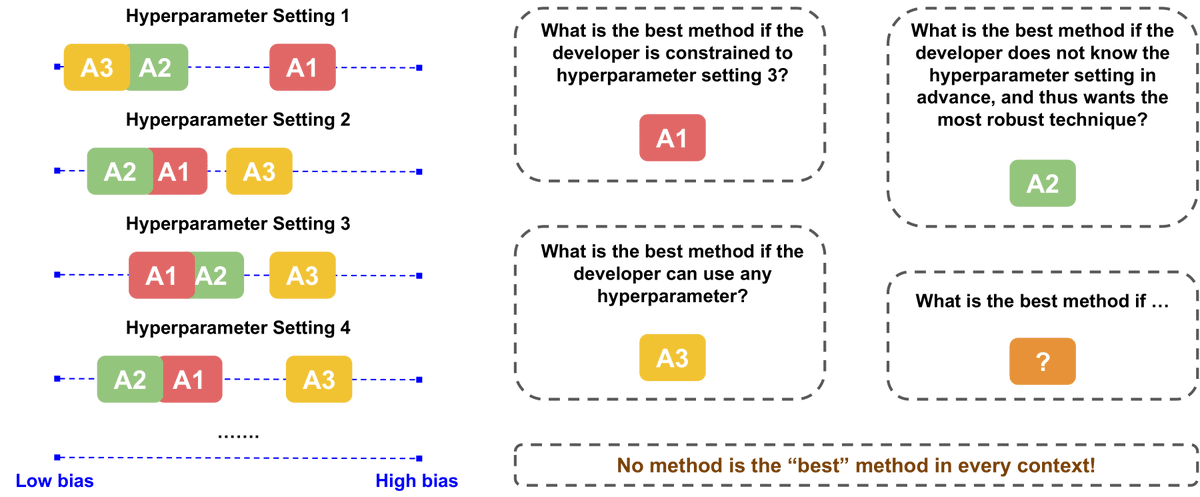

What's the best bias mitigation algorithm? There isn't one! Benchmarks often miss critical nuances. In our latest paper, we unpack these pitfalls. Critical work w/ Usman Gohar , Lu Cheng , Golnoosh Farnadi @NeurIPS2024 🔥Spotlight AFME 2024 @ NeurIPS @ NeurIPS 2024 📰arxiv.org/pdf/2411.11101

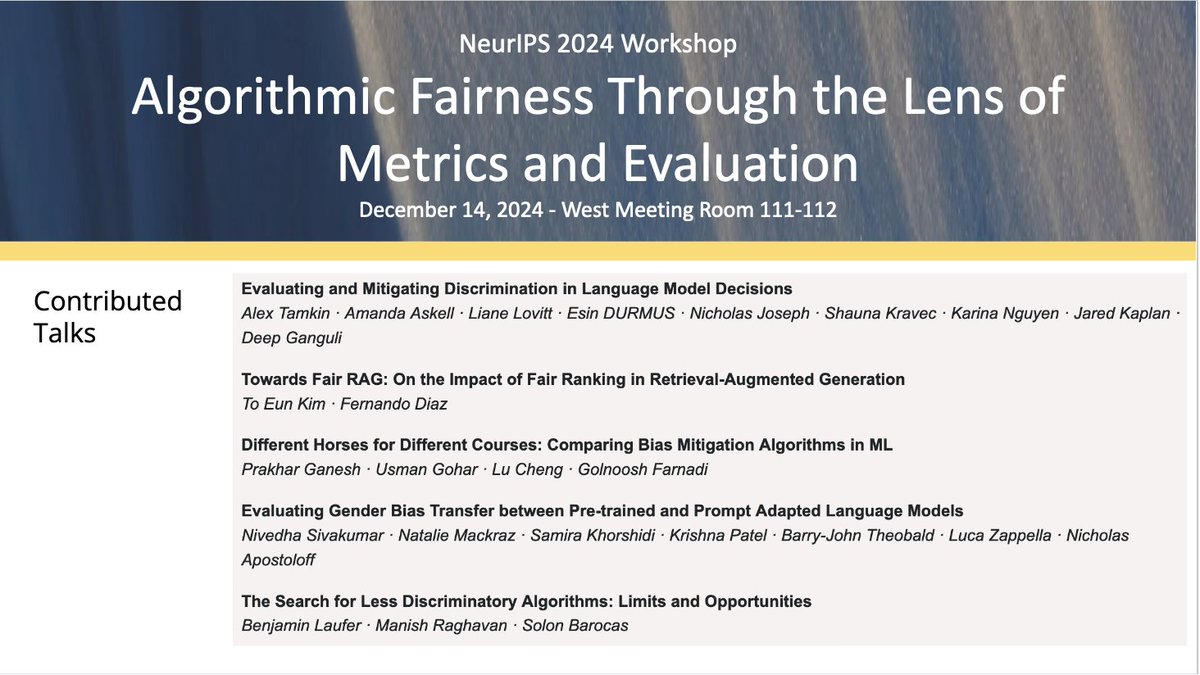

TODAY it is time for the Algorithmic Fairness Workshop #AFME2024 at #NeurIPS2024! 📍West Meeting 111-112! Excited for our 5 contributed spotlight talks today! Benjamin Laufer Danny To Eun Kim (@teknology.bsky.social) Alex Tamkin Prakhar Ganesh Natalie Mackraz