Pranay Mathur

@pranay_mathur17

MLE Perception@Cobot | Prev. at @MathWorks @GoogleOSS @arlteam | Alumni @GeorgiaTech @bitspilaniindia

ID: 1736517024237694976

https://matnay.github.io 17-12-2023 22:41:50

18 Tweet

30 Followers

81 Following

Neural Visibility Field for Uncertainty-Driven Active Mapping Shangjie Xue, Jesse Dill, Pranay Mathur, Frank Dellaert, Panagiotis Tsiotra, Danfei Xu tl;dr: visibility->uncertainty estimation;distribution of a color along a ray->GMM->entropy->next best view arxiv.org/pdf/2406.06948

Active perception with NeRF! It’s quite rare to see a work that is both principled and empirically effective. Neural Visibility Field (NVF) led by Shangjie Xue is a delightful exception. NVF unifies both visibility and appearance uncertainty in a Bayes net framework and achieved

Introducing EgoMimic - just wear a pair of Project Aria Project Aria @Meta smart glasses 👓 to scale up your imitation learning datasets! Check out what our robot can do. A thread below👇

Presenting EgoMimic at #CoRL2024! 🎉 Effortless data collection with Project Aria @Meta glasses—just wear & go. Our low-cost manipulator leverages this scalable data to perform grocery handling, laundry, coffee-making & more. Thrilled to be a part of this effort! egomimic.github.io

The Georgia Tech School of Interactive Computing Georgia Tech is presenting EgoMimic at #Corl2024 . 🎉 Using egocentric data from Aria glasses, the team trained their robot to seamlessly perform everyday tasks. EgoMimic is entirely open source, from robot hardware, the dataset, and learning algorithms. Check out

Thrilled to share this story covering our collaboration with Project Aria @Meta Reality Labs at Meta ! Human data is robot data in disguise. Imitation learning is human modeling. We are at the beginning of something truly revolutionary, both for robotics and human-level AI beyond language.

Excited to announce EgoAct🥽🤖: the 1st Workshop on Egocentric Perception & Action for Robot Learning @ #RSS2025 in LA! We’re bringing together researchers exploring how egocentric perception can drive next-gen robot learning! Full info: egoact.github.io/rss2025 Robotics: Science and Systems

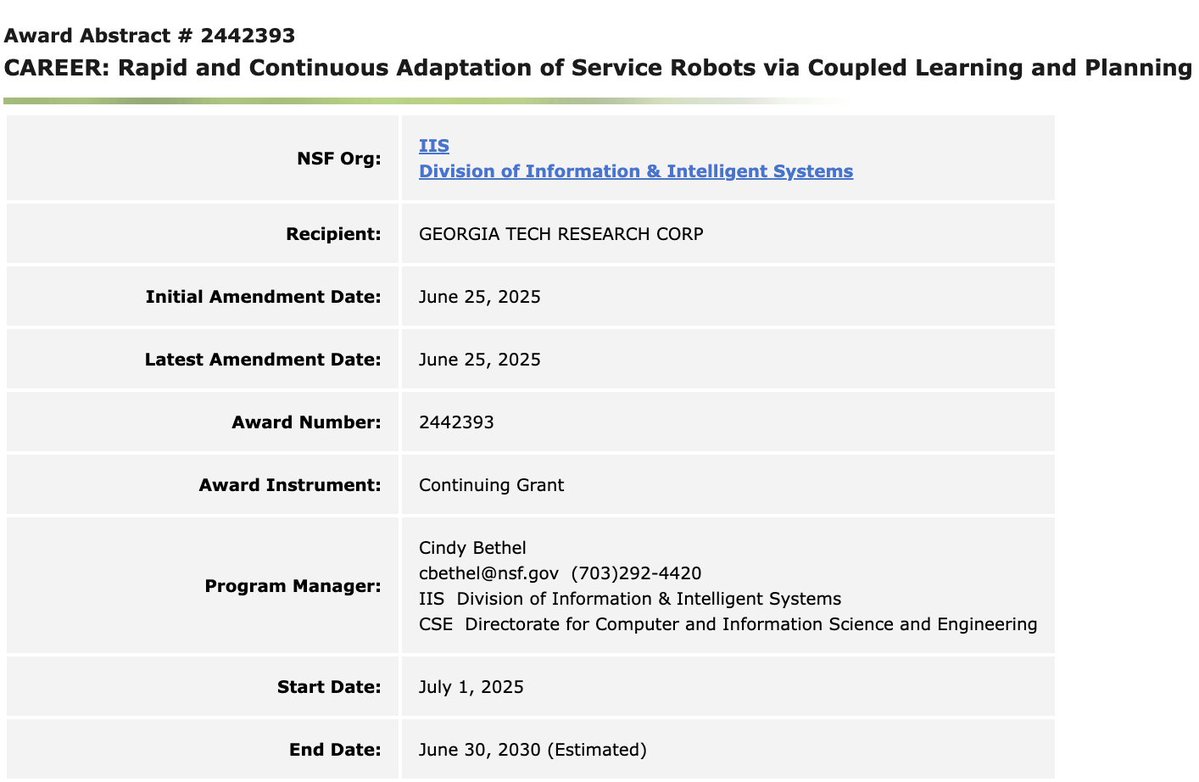

Honored to receive the NSF CAREER Award from the Foundational Research in Robotics (FRR) program! Deep gratitude to my Georgia Tech School of Interactive Computing Georgia Tech Computing colleagues and the robotics community for their unwavering support. Grateful of U.S. National Science Foundation for continuing to fund the future of robotics

At Cobot, we've been trying Real-Time Action Chunking from Physical Intelligence, which keeps robot rollouts smooth even with slow inference. Our blog shares a tweak for even slicker control. Check it out: alexander-soare.github.io/robotics/2025/…