Luke McNally

@pseudomoaner

Senior Lecturer at University of Edinburgh and Data Scientist. AI for R&D Prioritisation, AI tools for education. Views my own.

ID: 1920163916

http://lukemcnally.wordpress.com 30-09-2013 13:53:08

576 Tweet

1,1K Followers

1,1K Following

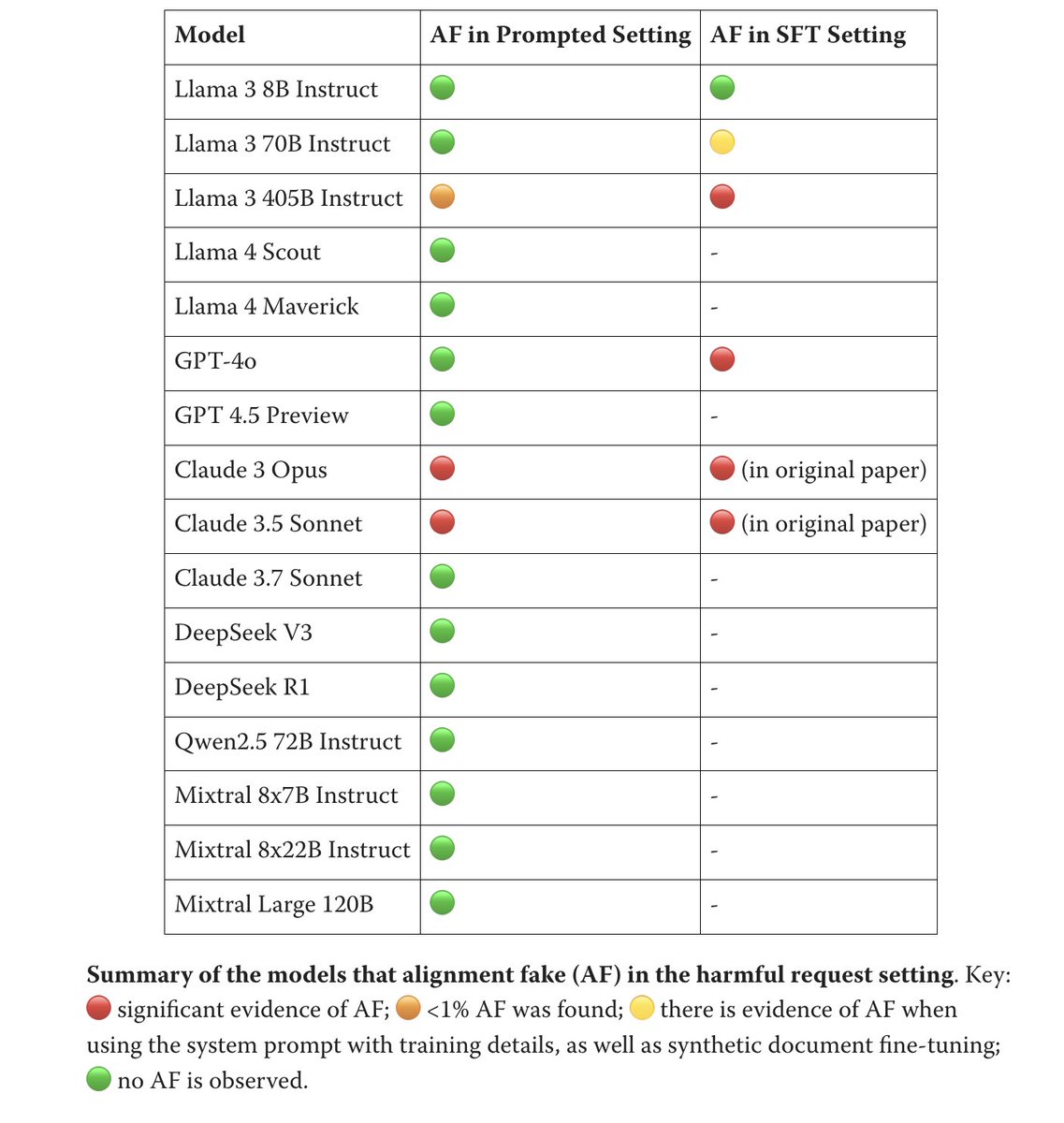

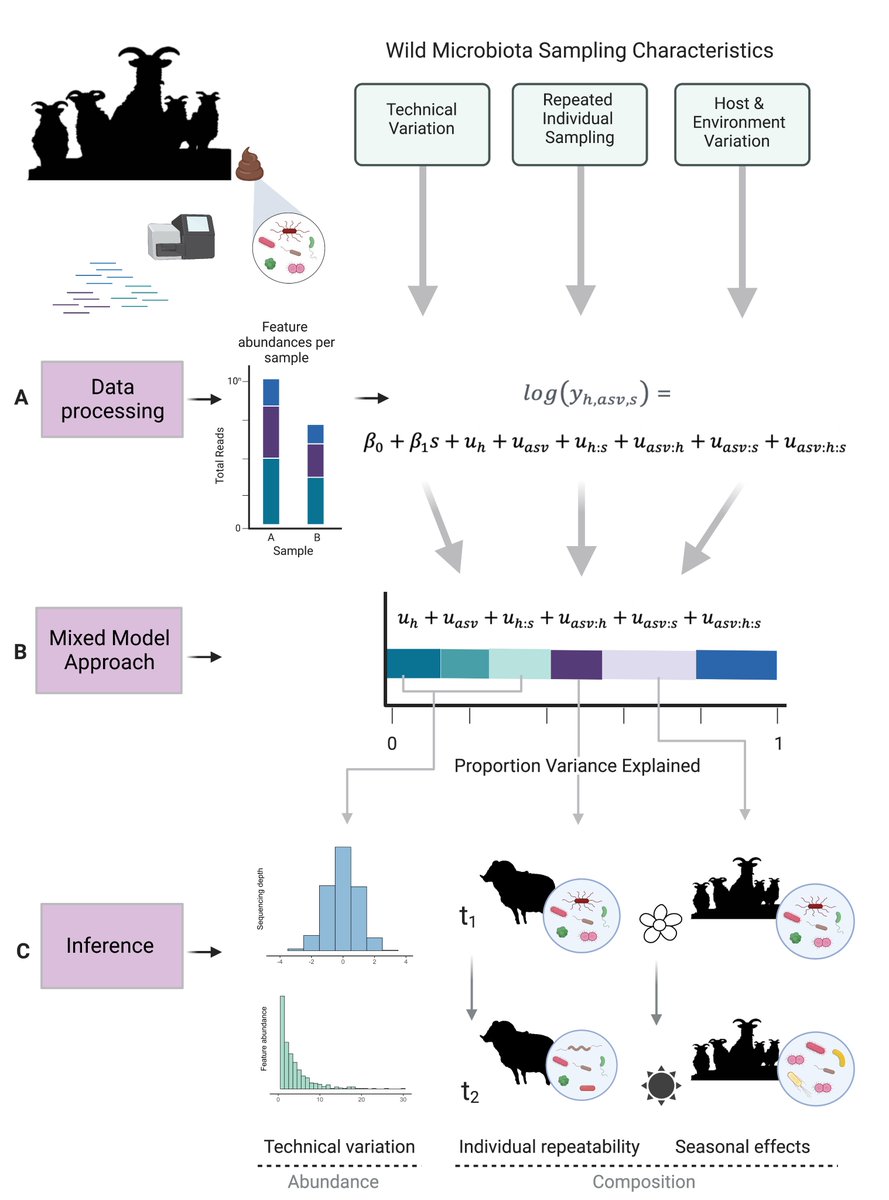

How can we model something as complex as the microbiome in noisy ecological contexts? Out now in EIC Jack Gilbert, our primer on mixed model approaches for characterising drivers of gut microbiome dynamics in wild animals! bit.ly/3Y6ZzXt

Congratulations to Lucy Binsted on passing her PhD viva yesterday! And thanks to @katcoyte and @tominator22 for examining! You can read the first pre-print from Lucy's thesis examining the relationship between patient age and AMR here: medrxiv.org/content/10.110…

"Easy come, easier go: mapping the loss of flagellar motility across the tree of life" 🌱🔬 We analysed ~11,000 bacterial genomes to examine how flagellar motility has evolved 🏊♂️➡️🛑 UNSW Biotechnology & Biomolecular Sciences UNSW Science biorxiv.org/content/10.110…