Rajan Vivek

@rajan__vivek

Member of Technical Staff @ContextualAI. MS CS + AI researcher @stanford. Prev @scale_AI @georgiatech

ID: 1676006311295848448

03-07-2023 23:13:55

39 Tweet

186 Followers

250 Following

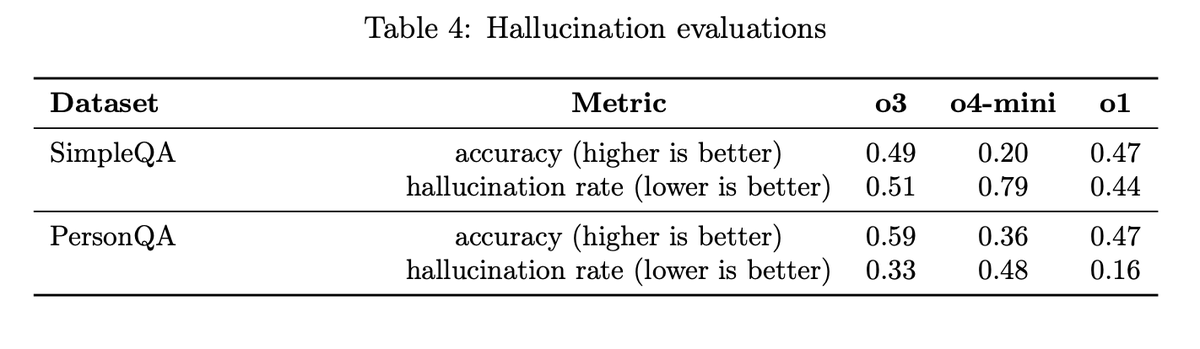

The hardest part of training models to never hallucinate is they tend to become less helpful. We ran a lot of experiments navigating this trade-off and found some tricks that work well. Check out William Berrios's thread to see our post-training strategies!