Ram Shankar Siva Kumar

@ram_ssk

Data Cowboy @Microsoft. Yes, the job is as cool as it sounds. Tech Policy Fellow @UCBerkeley. @BKCHarvard Affiliate. ram-shankar.com

ID: 1195171140

https://www.amazon.com/Not-Bug-But-Sticker-Learning/dp/1119883989 18-02-2013 22:57:58

6,6K Tweet

3,3K Followers

2,2K Following

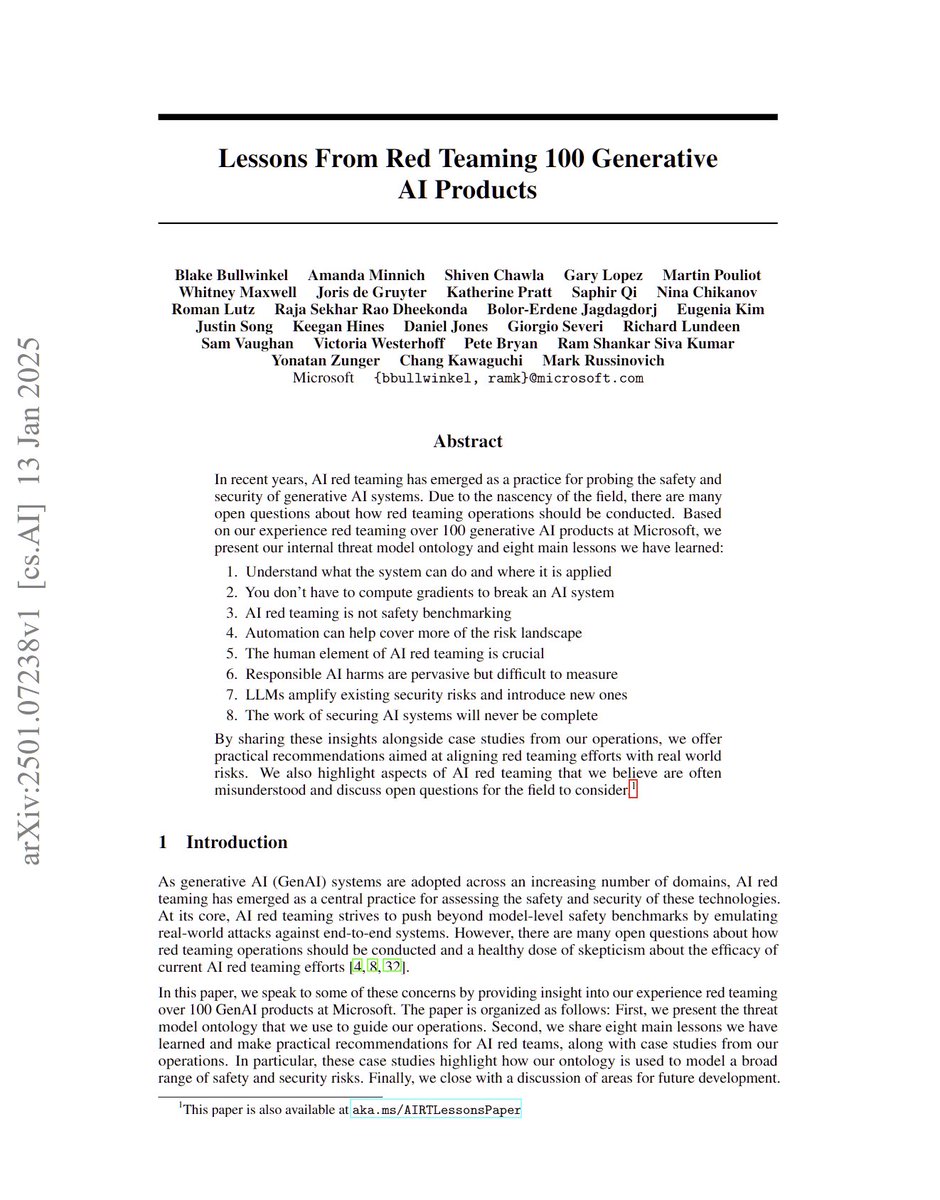

This is so exciting! Make sure to register for the AI Red Team Training (aka.ms/AIRedTeamTrain…) and submit your Cloud & AI bounties for multiplied rewards! Ram Shankar Siva Kumar Tom Gallagher Madeline Eckert Rebecca Pattee

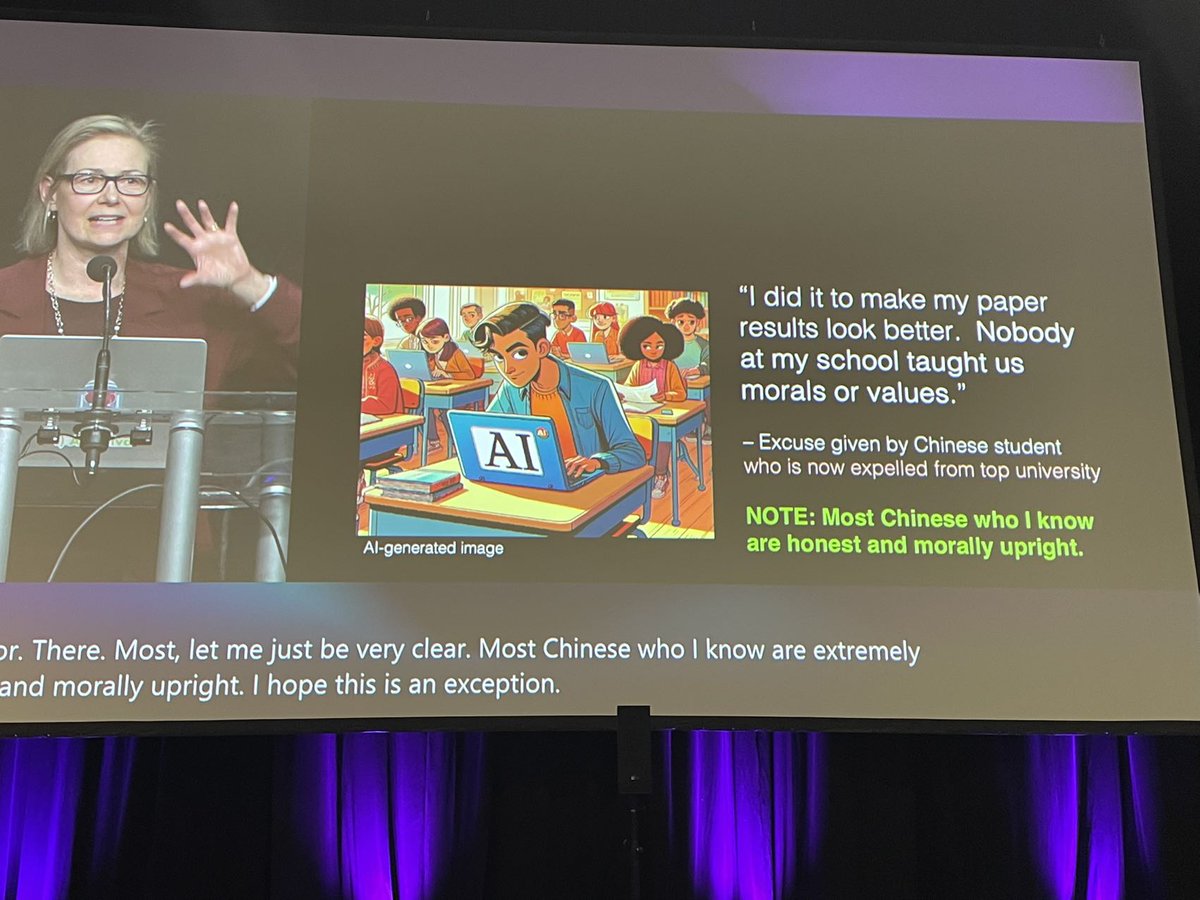

Mitigating racial bias from LLMs is a lot easier than removing it from humans! Can’t believe this happened at the best AI conference NeurIPS Conference We have ethical reviews for authors, but missed it for invited speakers? 😡

I'm shocked to see racism happening in academia again, at the best AI conference NeurIPS Conference. Targeting specific ethnic groups to describe misconduct is inappropriate and unacceptable. NeurIPS Conference must take a stand. We call on Rosalind Picard Massachusetts Institute of Technology (MIT) MIT Media Lab to retract and