Ramakanth

@ramakanth1729

Research Scientist @MetaAI

ID: 910945363

http://rama-kanth.com 28-10-2012 19:19:33

294 Tweet

471 Followers

443 Following

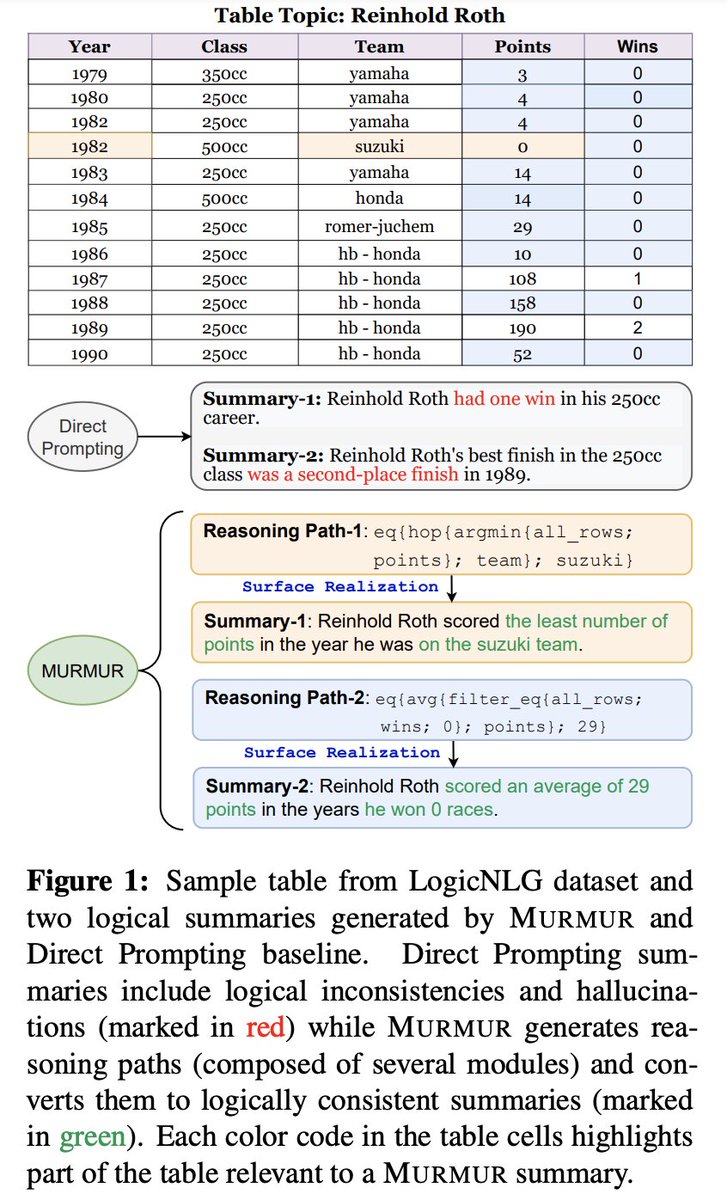

Introducing our new work, MURMUR, a neuro-symbolic modular reasoning approach to generate logical, faithful and diverse summaries from structured inputs w/ Swarnadeep Saha , Xinyan Velocity Yu , Mohit Bansal , Ramakanth

Our paper on 𝐩𝐫𝐨𝐦𝐩𝐭𝐢𝐧𝐠 𝐋𝐋𝐌𝐬 𝐰𝐢𝐭𝐡 𝐞𝐱𝐩𝐥𝐚𝐧𝐚𝐭𝐢𝐨𝐧𝐬 is accepted at Findings of #ACL2023NLP Check out our poster at the 𝐍𝐋𝐑𝐒𝐄 𝐰𝐨𝐫𝐤𝐬𝐡𝐨𝐩 𝐨𝐧 𝐓𝐡𝐮𝐫𝐬𝐝𝐚𝐲. I won't be there in person😢 but you can say hi to my advisor Greg Durrett 😆

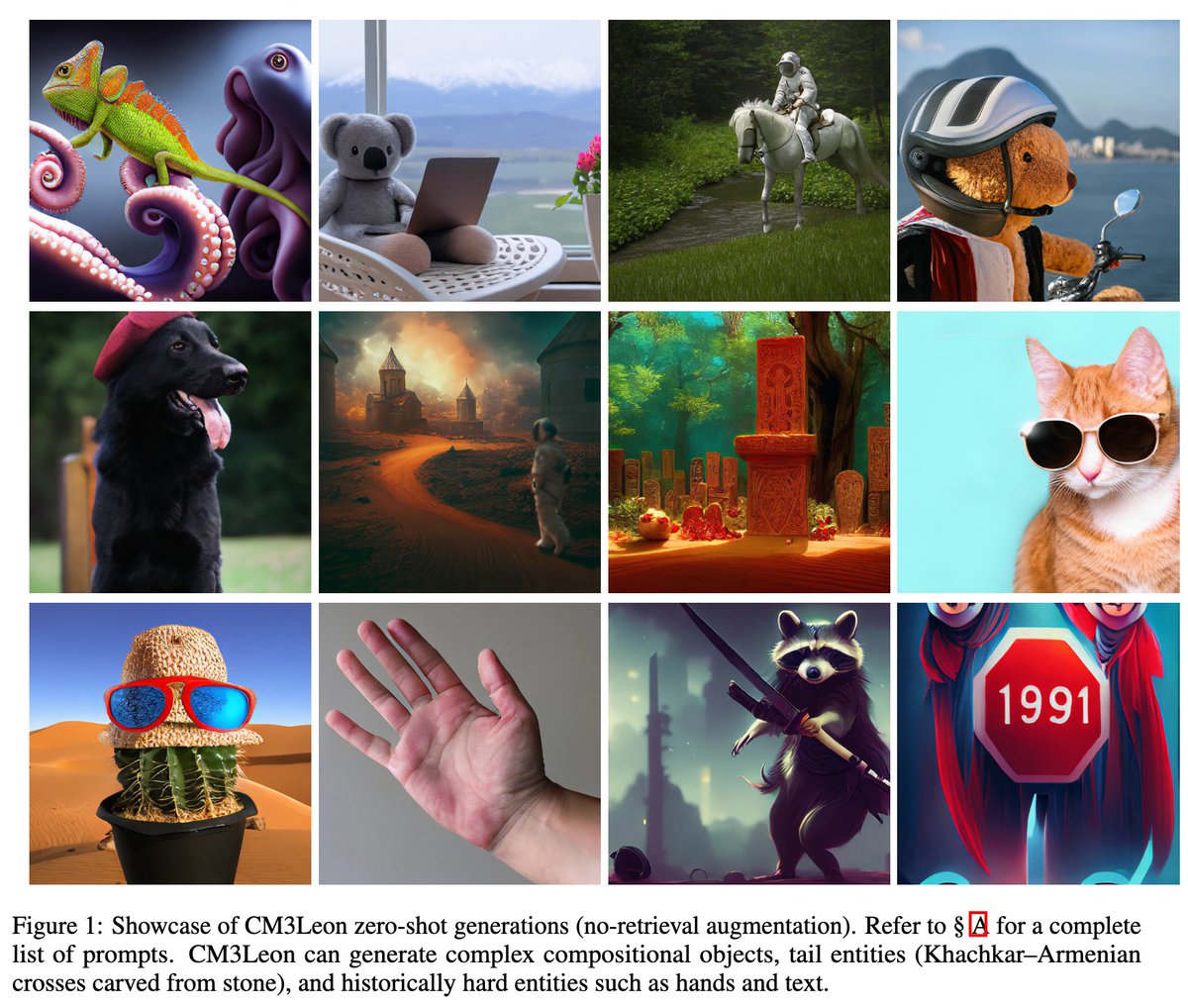

Excited to release our work from last year showcasing a stable training recipe for fully token-based multi-modal early-fusion auto-regressive models! arxiv.org/abs/2405.09818 Huge shout out to Armen Aghajanyan Ramakanth Luke Zettlemoyer Gargi Ghosh and other co-authors. (1/n)

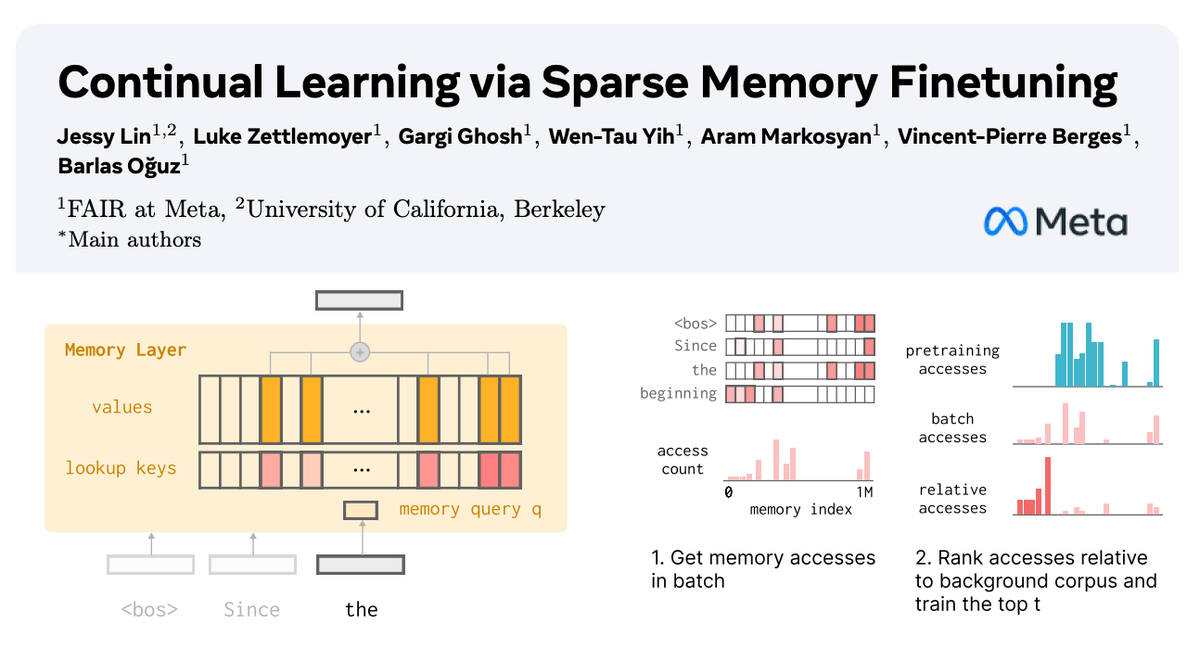

🧠 How can we equip LLMs with memory that allows them to continually learn new things? In our new paper with AI at Meta, we show how sparsely finetuning memory layers enables targeted updates for continual learning, w/ minimal interference with existing knowledge. While full