Subbarao Kambhampati (కంభంపాటి సుబ్బారావు)

@rao2z

AI researcher & teacher @SCAI_ASU. Former President of @RealAAAI; Chair of @AAAS Sec T. Here to tweach #AI. YouTube Ch: bit.ly/38twrAV Bsky: rao2z

ID: 2850858010

http://rakaposhi.eas.asu.edu 30-10-2014 02:20:55

10,10K Tweet

23,23K Followers

76 Following

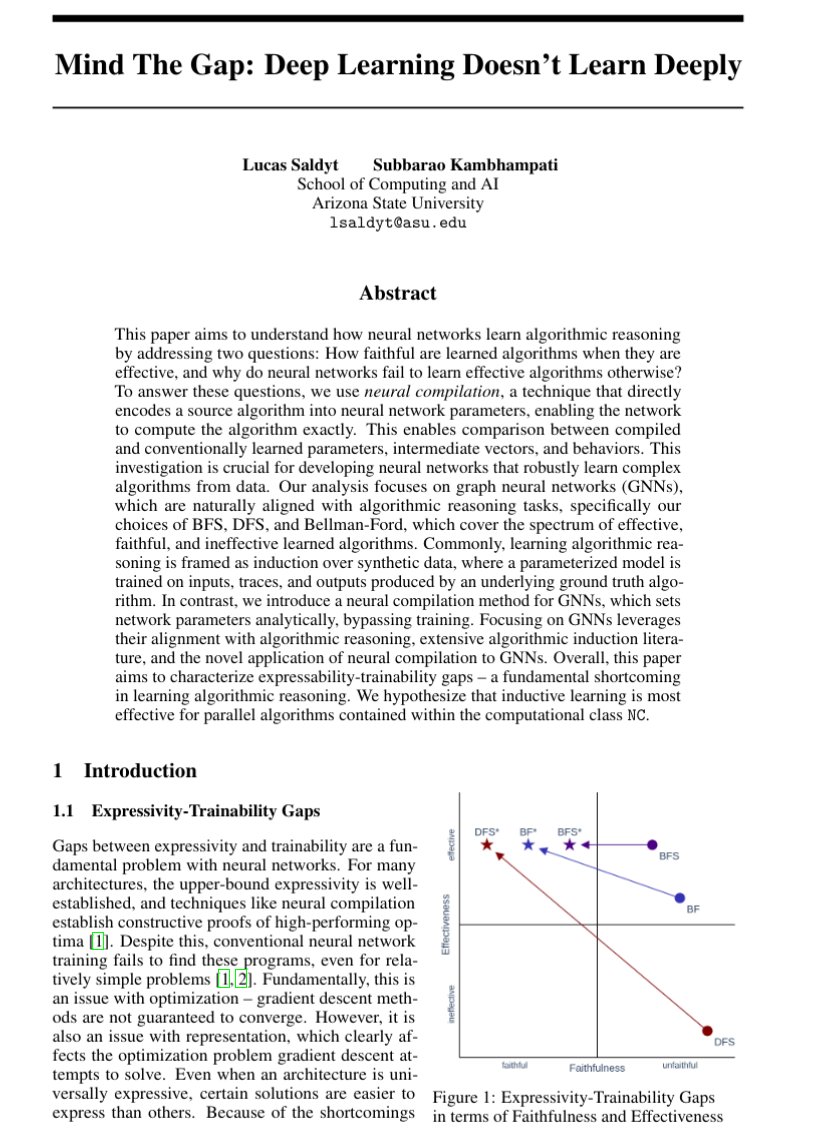

Neural networks can express more than they learn, creating expressivity-trainability gaps. Our paper, “Mind The Gap,” shows neural networks best learn parallel algorithms, and analyzes gaps in faithfulness and effectiveness. Subbarao Kambhampati (కంభంపాటి సుబ్బారావు)

The transformer expressiveness results are often a bit of a red herring as there tends to be a huge gap between what can be expressed in transformers, and what can be learned with gradient descent. Mind the Gap, a new paper lead by Lucas Saldyt dives deeper into this issue 👇👇