Ronnie Clark

@ronnieclark__

Associate Professor @UniofOxford, Prev: Dyson Robotics Lab @imperialcollege. Computer Vision, Graphics, Machine Learning. pixl.cs.ox.ac.uk

ID: 3323459854

http://www.ronnie-clark.co.uk 13-06-2015 18:09:06

499 Tweet

3,3K Followers

1,1K Following

Jan @czarnowski' DeepFactors (RA-L 2020) built code ideas into a unified SLAM system where network mono-depth prediction is a factor in a standard probabilistic factor graph. With Tristan Laidlow and Ronnie Clark from the Dyson Robotics Lab. Open source! 46/n x.com/czarnowskij/st…

Ever wondered how 3D vision can help understand the weather and climate? Check our new work on reconstructing 3D cloud fields. We test our model using data from Raspberry Pi cameras observing a 10km2 area of the sky. Project: cloud-field.github.io w/ Jacob Lin Ed Gryspeerdt

If you’re interested in LLM reasoning, check out our new paper on multi-agent LLM training (MALT), where we specialise a base model to create a generator (G), verifier (V), and refiner (R) that work together to solve problems. Paper: arxiv.org/pdf/2412.01928 Led by Sumeet Motwani

We trained a computer vision agent that, given a prompt + image, can choose the best specialist model to solve a task or even chain together models for more complex tasks. #CVPR2025 w/ Microsoft AI See more: arxiv.org/pdf/2412.09612

Motion blur typically breaks SLAM/SfM algorithms - but what if blur was actually the key to super-robust motion estimation? In our new work, Image as an IMU, Ronnie Clark and I demonstrate exactly how a single motion-blurred image can be used to our advantage. 🧵1/9

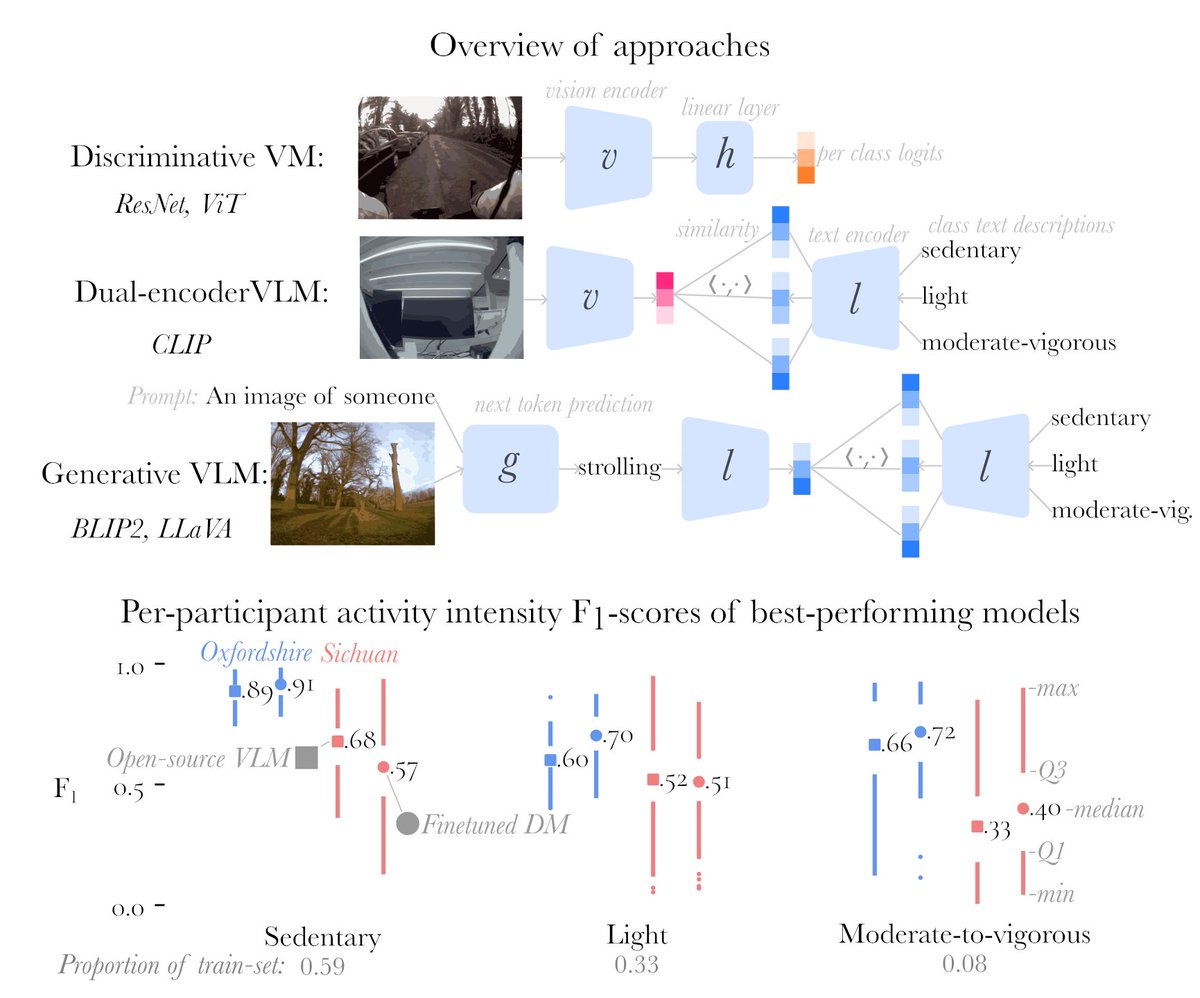

🚨 New preprint on arXiv from OxWearables PIXL @ Oxford ! Can vision-language models (VLMs) help automatically annotate physical activity in large real-world wearable datasets (⌚️+📷, 🇬🇧 + 🇨🇳). 📄 arxiv.org/abs/2505.03374 🧵1/7