Rosie Zhao

@rosieyzh

PhD student with @hseas ML Foundations Group. Previously @mcgillu.

ID: 1189002232366284800

https://rosieyzh.github.io/ 29-10-2019 02:13:28

36 Tweet

469 Followers

496 Following

Excited to share our recent work at #NeurIPS2023 on the nature of Simplicity Bias (SB) in 1-Hidden Layer Neural Networks (NNs) with Jatin Batra Prateek Jain Praneeth Netrapalli. SB is known to be one of the reasons behind brittleness of neural networks towards distribution shift (1/5)

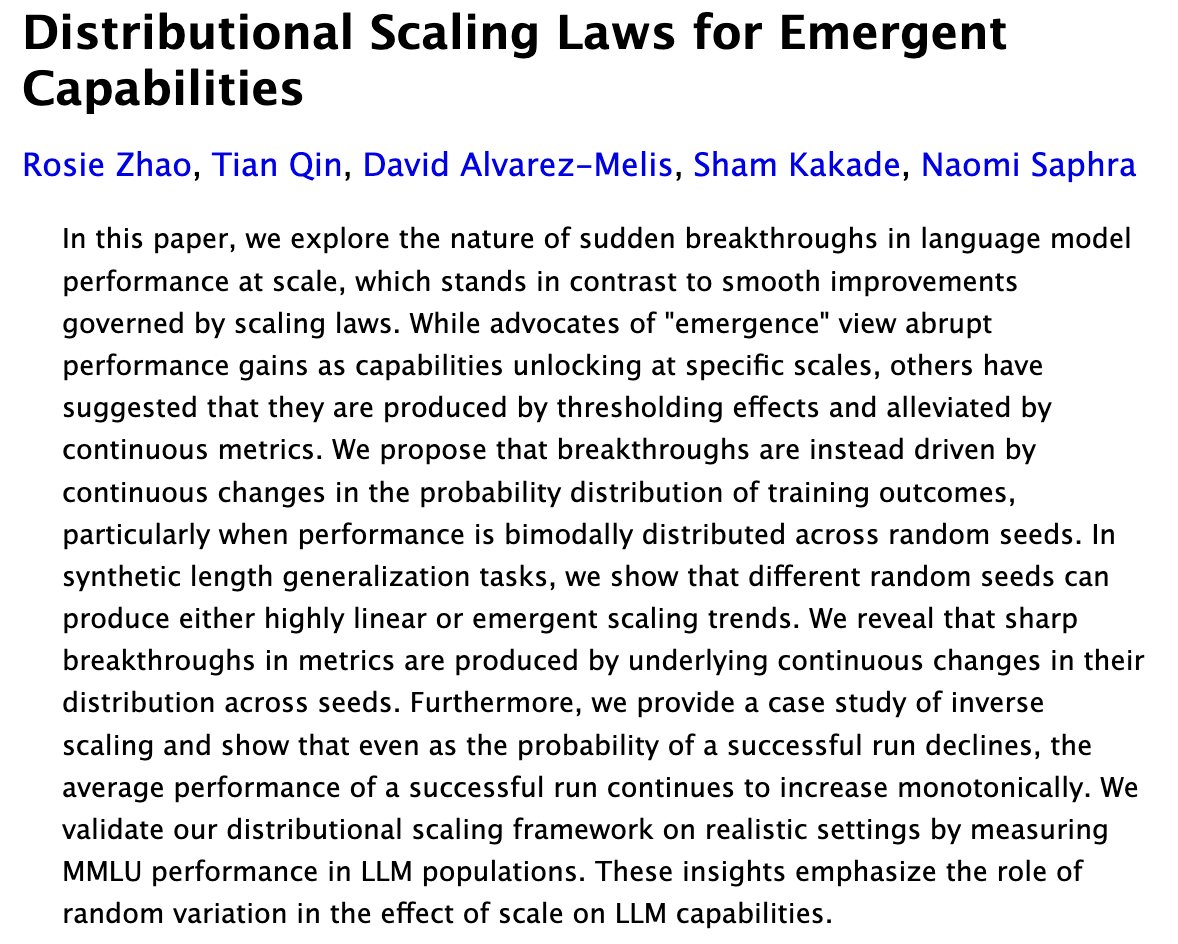

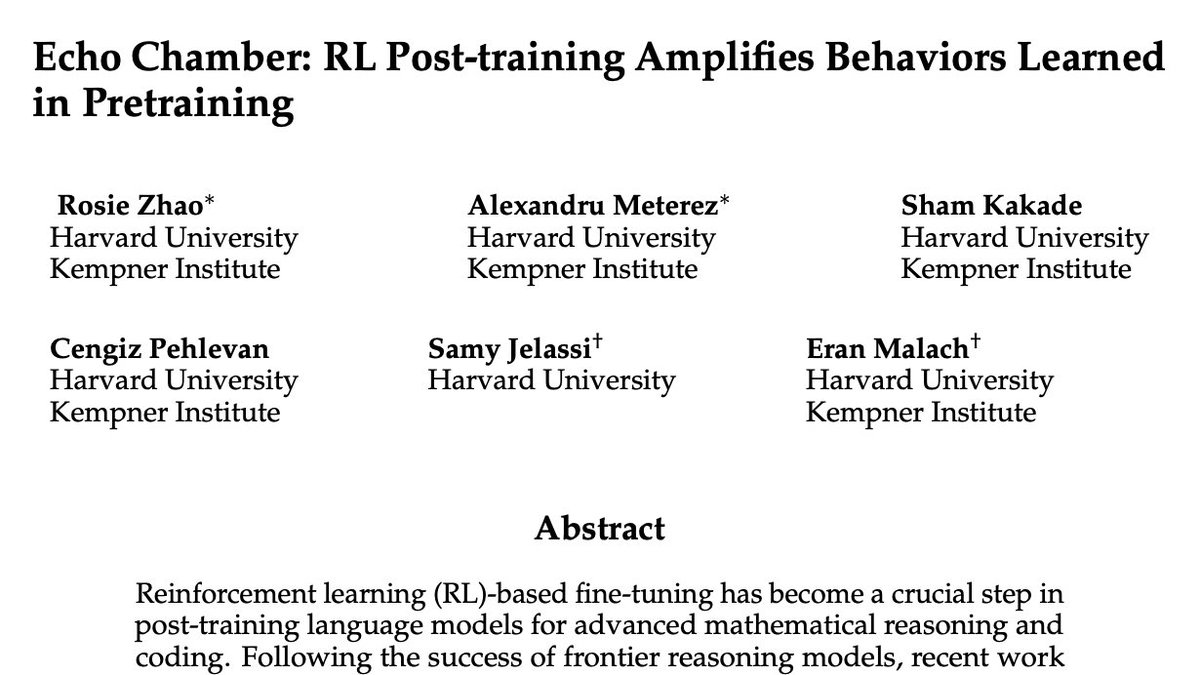

Are you attending #ICML2024? Be sure to check out our posters, workshops & presentations from the #KempnerInstitute community! Ada Fang Blake Bordelon ☕️🧪👨💻 Cengiz Pehlevan Riley Simmons-Edler Naomi Saphra Kanaka Rajan David Brandfonbrener Rosie Zhao Eran Malach Ryan P. Badman Marinka Zitnik

Adam is similar to many algorithms, but cannot be effectively replaced by any simpler variant in LMs. The community is starting to get the recipe right, but what is the secret sauce? Robert M. Gower 🇺🇦 and I found that it has to do with the beta parameters and variational inference.