Tongzheng Ren

@rtz19970824

QR @citsecurities. CS PhD @UTCompSci. Previously QR Intern @citsecurities, Student Researcher @GoogleDeepMind. Interested in Applied Math & Prob.

ID: 4647878532

http://cs.utexas.edu/~tzren 25-12-2015 07:40:07

187 Tweet

181 Followers

258 Following

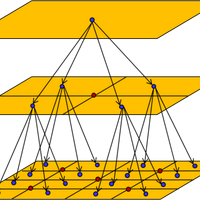

How does the depth of a transformer affect reasoning capabilities? New preprint by myself and Ashish Sabharwal shows that a little depth goes a long way to increase transformers’ expressive power We take this as encouraging for further research on looped transformers!🧵

[New paper on in-context learning] "In-Context Linear Regression Demystified" (link: arxiv.org/abs/2503.12734). Joint work Jianliang He, Xintian Pan, Siyu Chen. We establish a rather complete understanding of how one-layer multi-head attention solves in-context linear regression,

Check it out & see you in Vancouver (again)! arxiv.org/abs/2502.12089 Huge thanks to my amazing coauthors Antonio Sclocchi (*) Francesco Cagnetta Pascal Frossard and Matthieu Wyart from EPFL Physics of Complex Systems Lab LTS4 Johns Hopkins University SISSA Gatsby Computational Neuroscience Unit

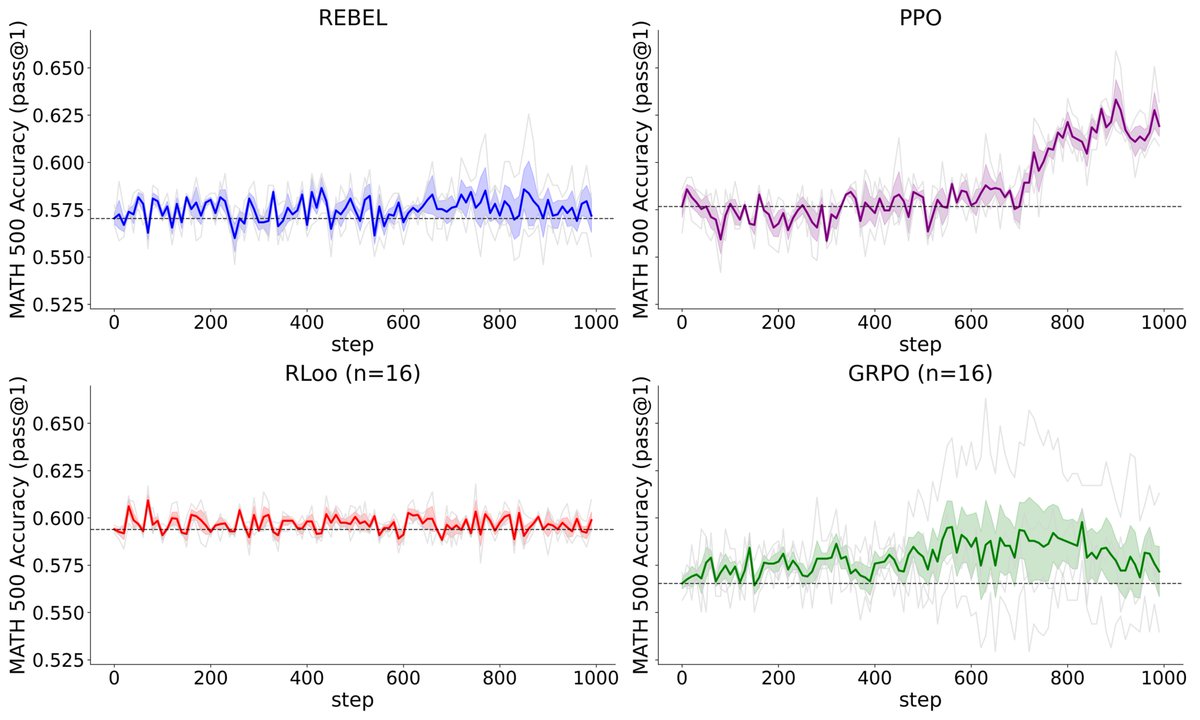

PPO vs. DPO? 🤔 Our new paper proves that it depends on whether your models can represent the optimal policy and/or reward. Paper: arxiv.org/abs/2505.19770 Led by Ruizhe Shi Minhak Song