Simone Scardapane

@s_scardapane

I fall in love with a new #machinelearning topic every month 🙄 |

Researcher @SapienzaRoma | Author: Alice in a diff wonderland sscardapane.it/alice-book

ID: 1235205731747540993

https://www.sscardapane.it/ 04-03-2020 14:09:51

1,1K Tweet

11,11K Followers

659 Following

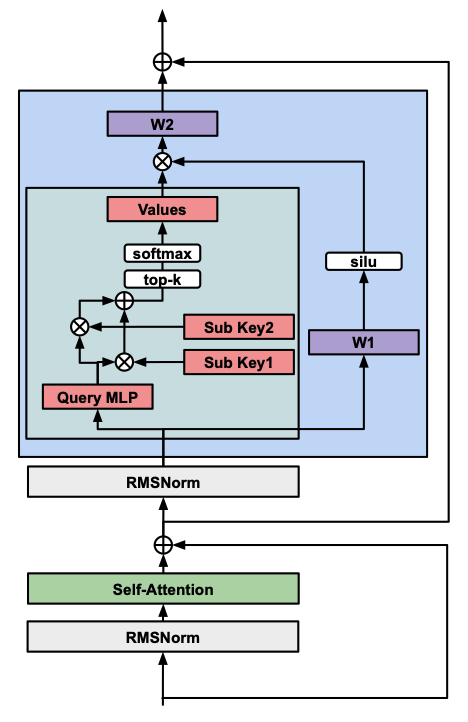

*Memory Layers at Scale* by Vincent-Pierre Berges Barlas Oğuz Daniel Haziza Luke Zettlemoyer Gargi Ghosh They show how to scale memory layers - simple variants of attention with potentially unbounded parameter count, which can be viewed as associative memories. arxiv.org/abs/2412.09764

*The GAN is dead; long live the GAN!* by Aaron Gokaslan zonkedNGray Volodymyr Kuleshov 🇺🇦 James Tompkin A modern GAN baseline exploiting a "relativistic" loss (which avoids a minimax problem), gradient penalties, and newer backbones. openreview.net/forum?id=OrtN9…

Happy to share I just started as associate professor in Sapienza Università di Roma! I have now reached my perfect thermodynamical equilibrium. 😄 Also, ChatGPT's idea of me is way infinitely cooler so I'll leave it here to trick people into giving me money.

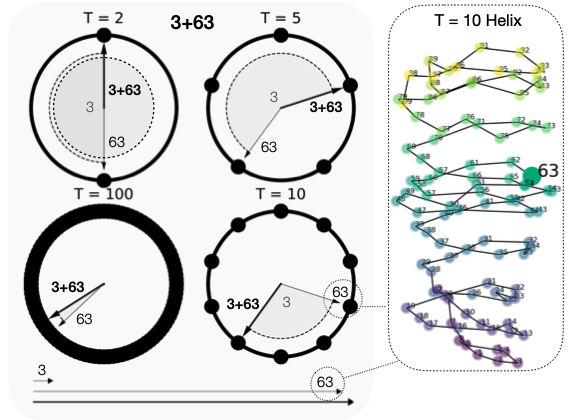

*Language Models Use Trigonometry to Do Addition* by Subhash Kantamneni Max Tegmark Really interesting paper that retro-engineers several LLMs to understand how single-token addition is performed in the internal embeddings. arxiv.org/abs/2502.00873

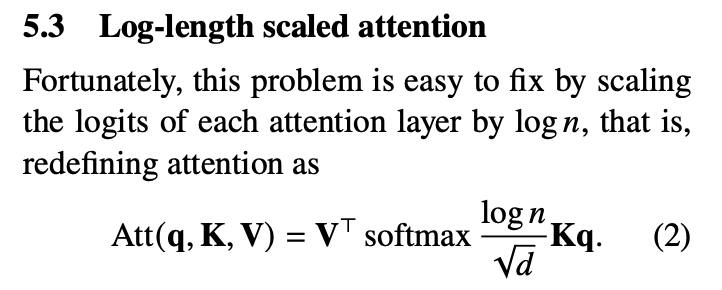

Simone Scardapane That was covered by David Chiang and Peter Cholak in 2022 in Overcoming a Theoretical Limitation of Self-Attention. arxiv.org/abs/2202.12172

*Universal Sparse Autoencoders* by Harry Thasarathan Thomas Fel Matthew Kowal Kosta Derpanis They train a shared SAE latent space on several vision encoders at once, showing, e.g., how the same concept activates in different models. arxiv.org/abs/2502.03714

*Recursive Inference Scaling* by Ibrahim Alabdulmohsin | إبراهيم العبدالمحسن Xiaohua Zhai Recursively applying the first part of a model can be a strong compute-efficient baseline in many scenarios, when evaluating on a fixed compute budget. arxiv.org/abs/2502.07503

*Rethinking Early Stopping: Refine, Then Calibrate* by Eugène Berta Leshem (Legend) Choshen 🤖🤗 David Holzmüller Francis Bach Doing early stopping on the "refinement loss" (original loss modulo calibration loss) is beneficial for both accuracy and calibration. arxiv.org/abs/2501.19195

*ConceptAttention: Diffusion Transformers Learn Highly Interpretable Features* by Alec Helbling Tuna Meral Ben Hoover Pinar Yanardag 🚀 CVPR2025 Duen Horng "Polo" Chau Creates saliency maps for diffusion ViTs by propagating concepts (eg, car) and repurposing cross-attention layers. arxiv.org/abs/2502.04320

*From superposition to sparse codes: interpretable representations in NNs* by David Klindt Nina Miolane 🦋 @ninamiolane.bsky.social Patrik Reizinger Charlie O'Neill Nice overview on the linearity of NN representations and the use of sparse coding to recover interpretable activations. arxiv.org/abs/2503.01824

*Differentiable Logic Cellular Automata* by Pietro Miotti Eyvind Niklasson Ettore Randazzo Alex Mordvintsev Combines differentiable cellular automata and logic circuits to learn recurrent circuits exhibiting complex (learned) behavior. google-research.github.io/self-organisin…

*Generalized Interpolating Discrete Diffusion* by Dimitri von Rütte Antonio Orvieto & al. A class of discrete diffusion models combining standard masking with uniform noise to allow the model to potentially "correct" previously wrong tokens. arxiv.org/abs/2503.04482

*NoProp: Training Neural Networks without Backpropagation or Forward-propagation* by Yee Whye Teh et al. They use a neural network to define a denoising process over the class labels, which allows them to train the blocks independently (i.e., "no backprop"). arxiv.org/abs/2503.24322