Sagnik Mukherjee

@saagnikkk

CS PhD student at @IllinoisCDS @convai_uiuc

ID: 1617896838950187009

https://sagnikmukherjee.github.io/ 24-01-2023 14:47:35

46 Tweet

107 Followers

159 Following

Interesting finding from my colleagues at ConvAI@UIUC (Beyza Bozdag and Ishika Agarwal) that languages store their own knowledge.

LLMs have opened a great variety of research topics and this survey on persuasion by my awesome lab mate Beyza Bozdag is definitely a must read 😉

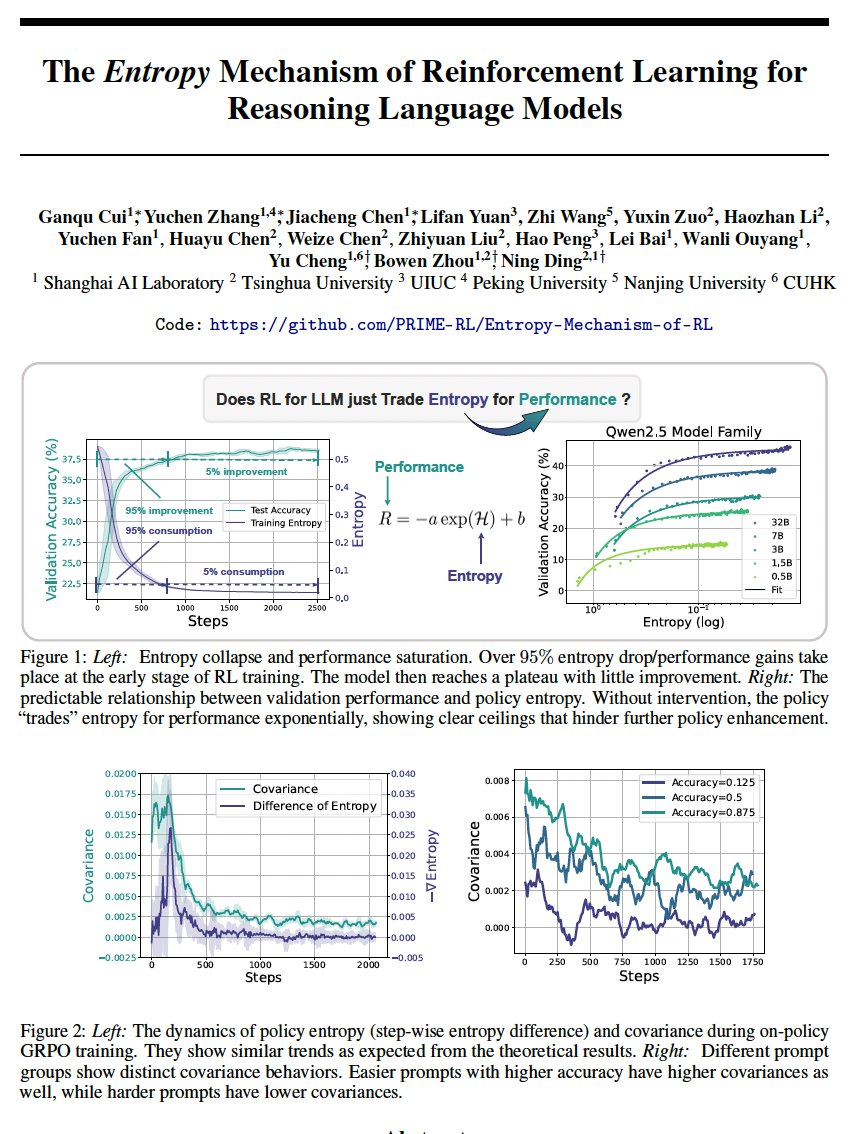

![Xinyu Zhu (@tianhongzxy) on Twitter photo 🔥The debate’s been wild: How does the reward in RLVR actually improve LLM reasoning?🤔

🚀Introducing our new paper👇

💡TL;DR: Just penalizing incorrect rollouts❌ — no positive reward needed — can boost LLM reasoning, and sometimes better than PPO/GRPO!

🧵[1/n] 🔥The debate’s been wild: How does the reward in RLVR actually improve LLM reasoning?🤔

🚀Introducing our new paper👇

💡TL;DR: Just penalizing incorrect rollouts❌ — no positive reward needed — can boost LLM reasoning, and sometimes better than PPO/GRPO!

🧵[1/n]](https://pbs.twimg.com/media/GsdM6V9b0AE7zx8.png)