Samira Abnar

@samira_abnar

Apple ML research

ID: 1798519229635014656

06-06-2024 00:56:49

40 Tweet

224 Followers

182 Following

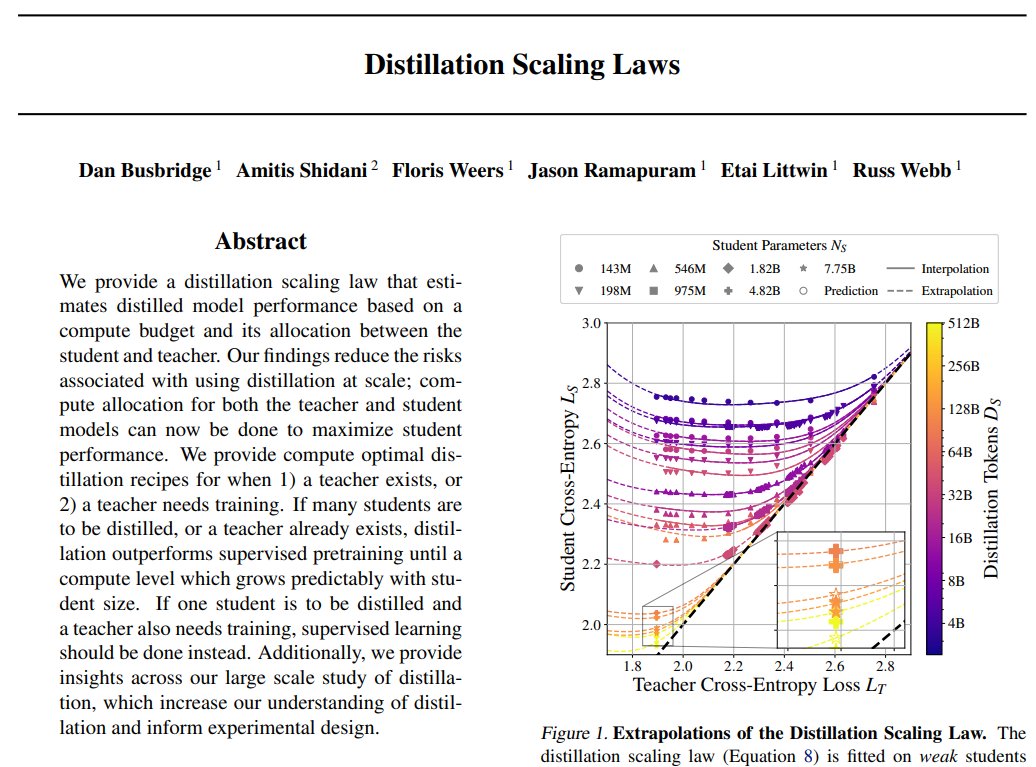

Mixture of experts is an interesting architecture or so Samira Abnar told me when I joined the project last year. After some brilliant work from Harshay Shah and Samira, we have a scaling law paper!

Really interesting work studying the optimal sparsity levels for MoEs under different compute budgets, work led by the powerful Samira Abnar Harshay Shah Vimal Thilak🦉🐒

Thuerey Group at TUM Nice work and cool results! FWIW you can train a diffusion model directly in data space (and with general geometries) without requiring to compress data into a latent space arxiv.org/abs/2305.15586. Perhaps Fig. 17 is the closest to your experimental setting (cc Ahmed Elhag)

Particularly in the last few months, as test-time compute scaling is taking off, understanding parameter and FLOP trade-offs has become increasingly important. This work led by Samira Abnar with fantastic contributions from Harshay Shah , provides a deep dive into this topic.

Check out this post that has information about research from Apple that will be presented at ICLR 2025 in 🇸🇬 this week. I will be at ICLR and will be presenting some of our work (led by Samira Abnar) at SLLM Sparsity in LLMs Workshop at ICLR 2025 workshop. Happy to chat about JEPAs as well!

We have two awesome new videos on MLX at #WWDC25 this year. - Learn all about MLX. - Learn all about running LLMs locally with MLX. Angelos Katharopoulos, Shashank Prasanna, myself, and others worked super hard to make these. Check them out and hope you find them useful!

![Eeshan Gunesh Dhekane (@eeshandhekane) on Twitter photo Parameterized Transforms 🚀

Here is a new tool that provides a modular and extendable implementation of torchvision-based image augmentations that provides access to their parameterization. [1/5] Parameterized Transforms 🚀

Here is a new tool that provides a modular and extendable implementation of torchvision-based image augmentations that provides access to their parameterization. [1/5]](https://pbs.twimg.com/media/GjsuvFnXUAAIOKv.jpg)