Adam Santoro

@santoroai

Research Scientist in artificial intelligence at DeepMind

ID: 733640801914343425

20-05-2016 12:49:32

1,1K Tweet

9,9K Followers

227 Following

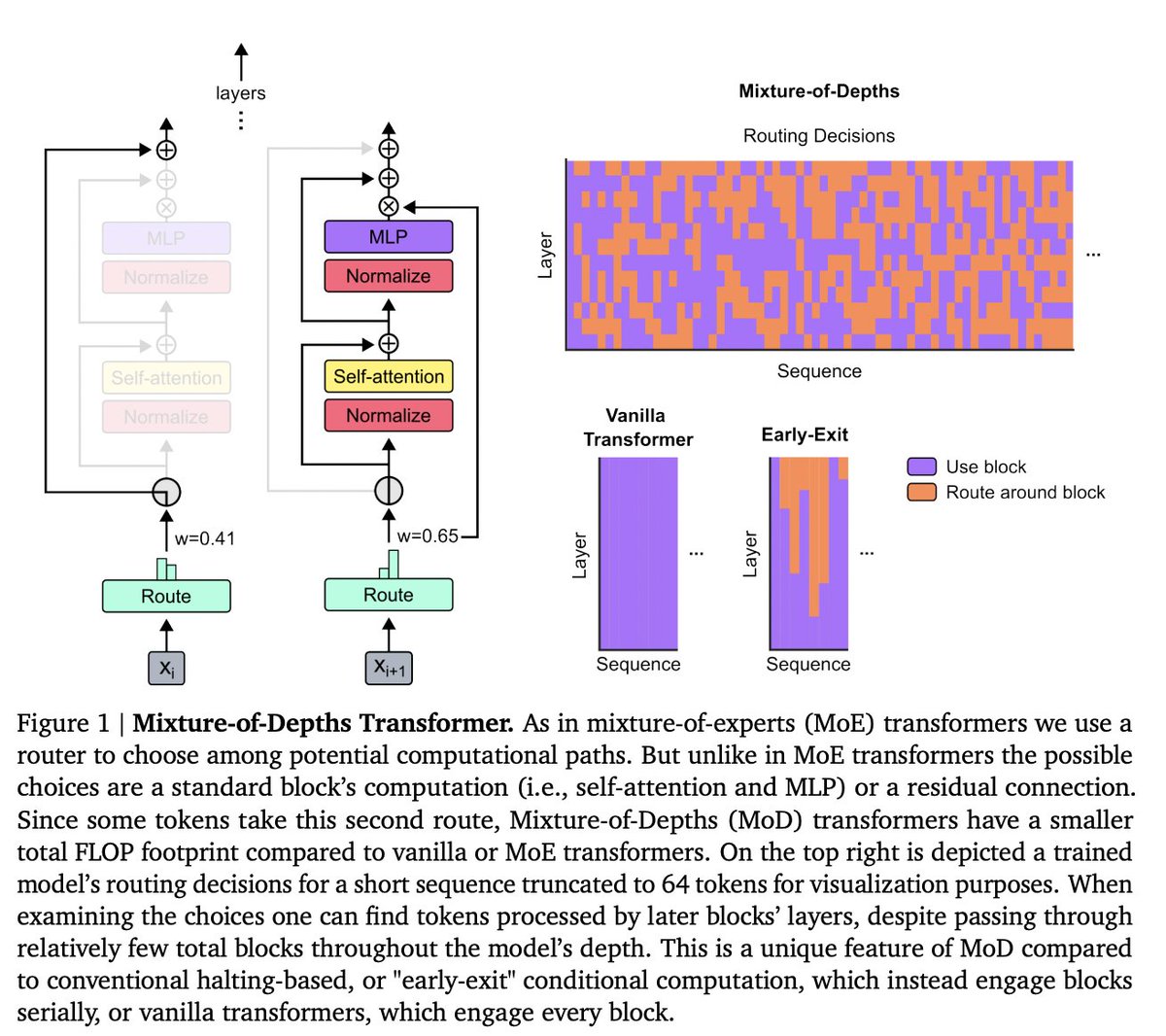

Big fan of the work of Adam Santoro and others. Glad Google decided to finally release it! arxiv.org/abs/2404.02258

I have implemented Mixture-of-Depths and it shows significant memory reduction during training and 10% speed increase. I will verify if it achieves the same quality with 12.5% active tokens. github.com/thepowerfuldee… thanks Alex Hägele for initial code