Sara Hooker

@sarahookr

I lead @Cohere_Labs. Formerly Research @Google Brain @GoogleDeepmind. ML Efficiency at scale, LLMs, ML reliability. Changing spaces where breakthroughs happen.

ID: 731538535795163136

https://www.sarahooker.me/ 14-05-2016 17:35:53

9,9K Tweet

45,45K Followers

8,8K Following

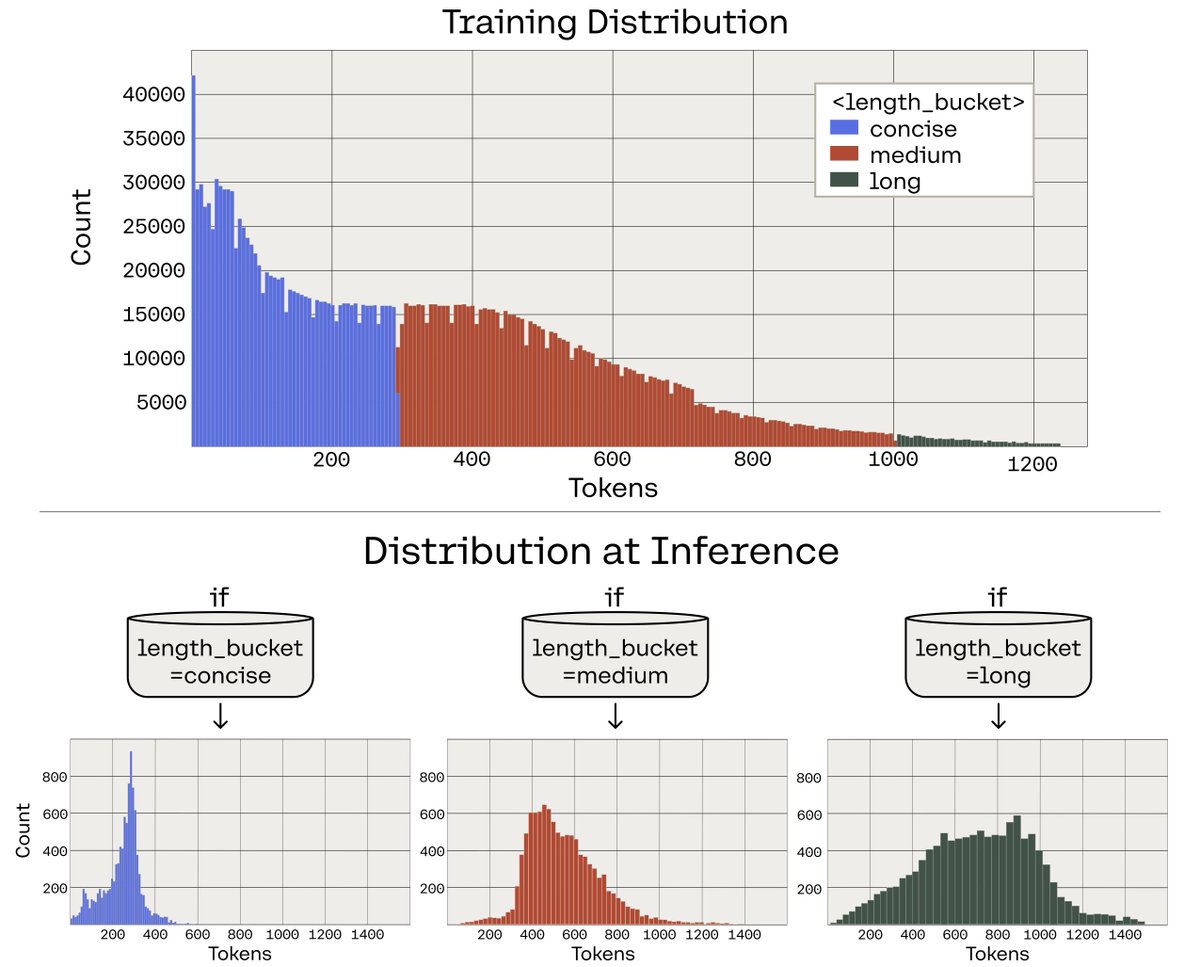

🚨 Wait, adding simple markers 📌during training unlocks outsized gains at inference time?! 🤔 🚨 Thrilled to share our latest work at Cohere Labs: “Treasure Hunt: Real-time Targeting of the Long Tail using Training-Time Markers“ that explores this phenomenon! Details in 🧵 ⤵️

Can we train models for better inference-time control instead of over-complex prompt engineering❓ Turns out the key is in the data — adding fine-grained markers boosts performance and enables flexible control at inference🎁 Huge congrats to Daniel D'souza for this great work

RLHF is powerful but slow. What if it could be 70% faster? ⚡️Michael Noukhovitch shows how with Asynchronous RLHF. Join his talk with us on Thursday, June 26th to learn how he's making LLMs more efficient. Thanks to Alif and Abrar Rahman - d/acc for organizing this session 👏

For the community, by the community. We’re very excited to share our completely free Summer School by the Cohere Labs Community. A unique learning experience, built together. Register now!

the Cohere Labs ML Summer School is a fantastic opportunity for anyone looking to gain hands-on experience with cutting-edge research, explore advanced topics, and connect with awesome researchers and collaborators at Cohere Labs

Amir Efrati Stephanie Palazzolo Worth reading this research which showed it has already been turned into cheat-slop and that meta was one of the worst culprits for gaming it x.com/singhshiviii/s…

Papers ✅ Park ⏳ Tomorrow at Trinity Bellwoods come learn about Cohere Labs new paper improving models performance on rare cases