Scientific Methods for Understanding Deep Learning

@scifordl

Workshop @ NeurIPS 2024.

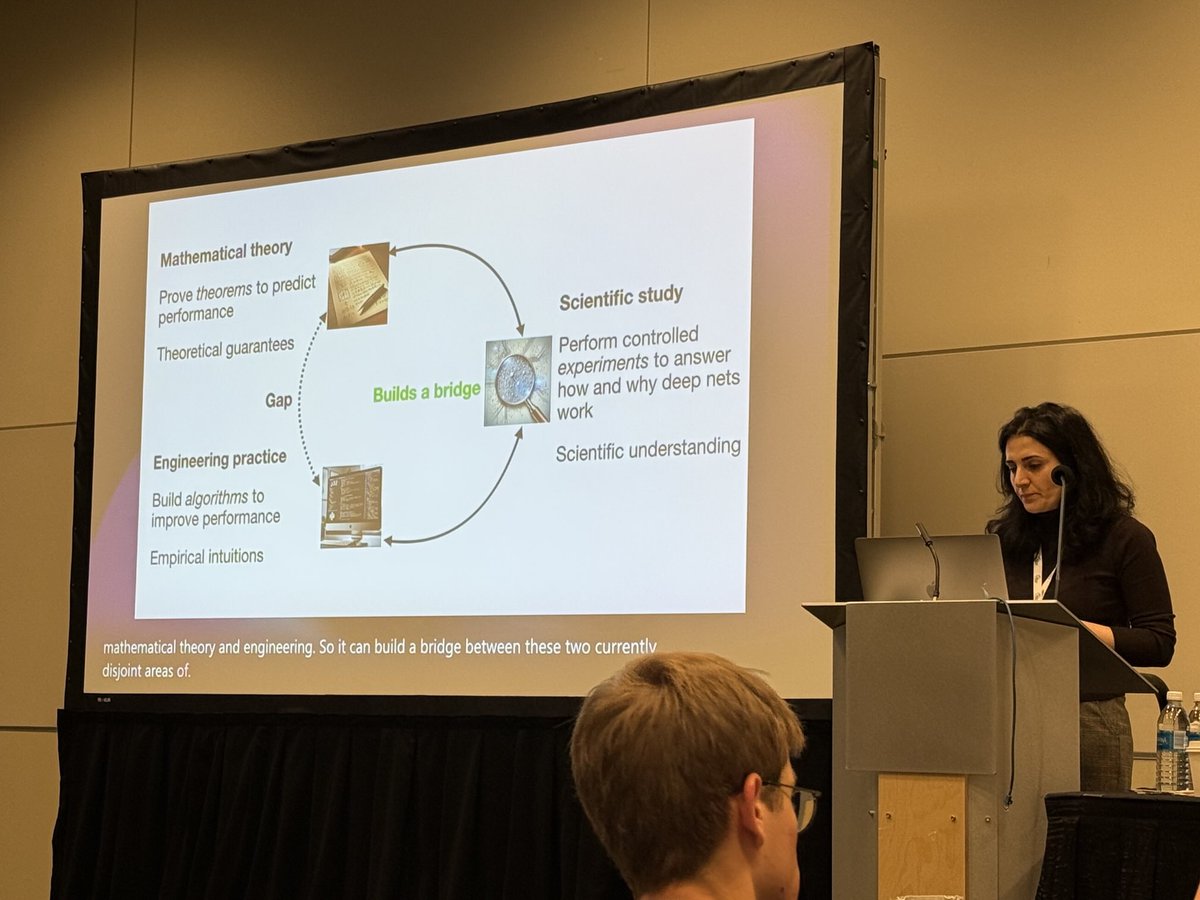

Using controlled experiments to test hypotheses about the inner workings of deep networks.

ID: 1818772037906706432

https://scienceofdlworkshop.github.io/ 31-07-2024 22:13:49

39 Tweet

338 Followers

21 Following

We need more of *Science of Deep Learning* in the major ML conferences. This year’s NeurIPS Conference workshop Scientific Methods for Understanding Deep Learning on this topic is just starting, and I hope it is NOT the last edition!!!

Now onto Surya Ganguli talk, investigating the creativity in diffusion models, i.e. why do diffusion models fail to learn the score function and why it's a good thing.

Can LLMs plan? The answer might be in Hanie Sedghi's talk. Only one way to know, join us in West meeting rooms 205-207

We are back with a contributed talk from Christos Perivolaropoulos about some intriguing properties of sotfmax in Transformer architectures!

It's time for our panel session! Surya Ganguli Eero Simoncelli Andrew Gordon Wilson Yasaman Bahri and Mikhail Belkin discuss science, understanding and deep learning. 👀 Hosted by our amazing organizers Zahra Kadkhodaie and Florentin Guth.

What a great panel Scientific Methods for Understanding Deep Learning moderated by Zahra Kadkhodaie and Florentin Guth ! #NeurIPS2024

Congratulation to the winners of our debunking challenge François Charton and Julia Kempe for "Emergent properties with repeated examples" 🥳🎉

One epoch is not all you need! Our paper, Emergent properties with repeated examples, with Julia Kempe, won the NeurIPS24 Debunking Challenge, organized by the Science for Deep Learning workshop, Scientific Methods for Understanding Deep Learning arxiv.org/abs/2410.07041