JuYoung Suk

@scott_sjy

MS student at KAIST AI

ID: 1636010791135744004

15-03-2023 14:26:17

49 Tweet

332 Followers

1,1K Following

Glad to share that Prometheus 2 was accepted at the main track of EMNLP 2025 ! See you in Miami ☺️

New preprint 📄 (with Jinho Park ) Can neural nets really reason compositionally, or just match patterns? We present the Coverage Principle: a data-centric framework that predicts when pattern-matching models will generalize (validated on Transformers). 🧵👇

🚨 New Paper co-led with byeongguk jeon 🚨 Q. Can we adapt Language Models, trained to predict next token, to reason in sentence-level? I think LMs operating in higher-level abstraction would be a promising path towards advancing its reasoning, and I am excited to share our

![Hyeonbin Hwang (@ronalhwang) on Twitter photo 🚨 New LLM Reasoning Paper 🚨

Q. How can LLMs self-improve their reasoning ability?

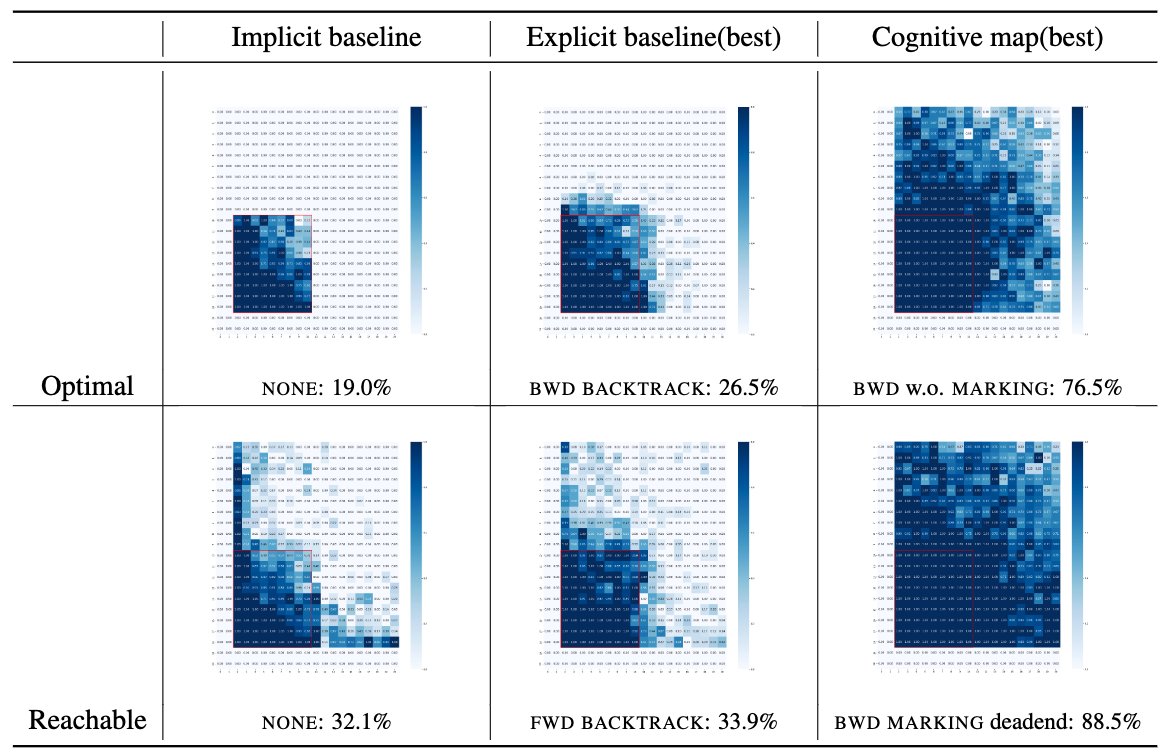

⇒ Introducing Self-Explore⛰️🧭, a training method specifically designed to help LLMs avoid reasoning pits by learning from their own outputs! [1/N] 🚨 New LLM Reasoning Paper 🚨

Q. How can LLMs self-improve their reasoning ability?

⇒ Introducing Self-Explore⛰️🧭, a training method specifically designed to help LLMs avoid reasoning pits by learning from their own outputs! [1/N]](https://pbs.twimg.com/media/GLX07fHbAAAO9l4.jpg)

![MiyoungKo (@miyoung_ko) on Twitter photo 📢 Excited to share our latest paper on the reasoning capabilities of LLMs! Our research dives into how these models recall and utilize factual knowledge during solving complex questions. [🧵1 / 10]

arxiv.org/abs/2406.19502 📢 Excited to share our latest paper on the reasoning capabilities of LLMs! Our research dives into how these models recall and utilize factual knowledge during solving complex questions. [🧵1 / 10]

arxiv.org/abs/2406.19502](https://pbs.twimg.com/media/GRZsvURbkAA4Rau.jpg)