Seungone Kim @ NAACL2025

@seungonekim

Ph.D. student @LTIatCMU and in-coming intern at @AIatMeta working on (V)LM Evaluation & Systems that Improve with (Human) Feedback | Prev: @kaist_ai @yonsei_u

ID: 1455179335548035074

https://seungonekim.github.io/ 01-11-2021 14:26:25

733 Tweet

1,1K Followers

888 Following

Turns out that reasoning models not only excel at solving problems but are also excellent confidence estimators - an unexpected side effect of long CoTs! This reminds me that smart ppl are good at determining what they know & don't know👀 Check out Dongkeun Yoon 's post!

🚨 Lucie-Aimée Kaffee and I are looking for a junior collaborator to research the Open Model Ecosystem! 🤖 Ideally, someone w/ AI/ML background, who can help w/ annotation pipeline + analysis. docs.google.com/forms/d/e/1FAI…

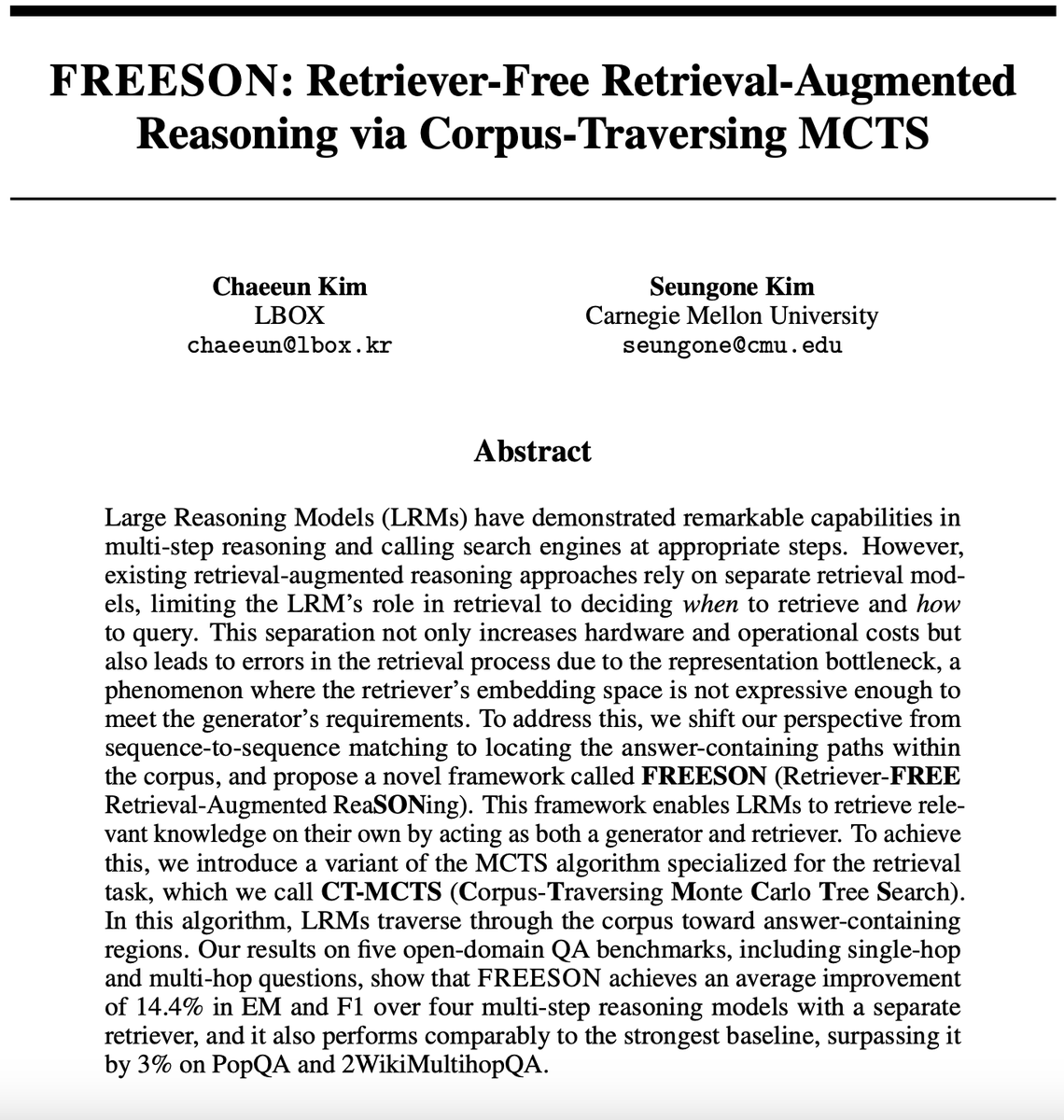

Within the RAG pipeline, the retriever often acts as the bottleneck! Instead of training a better embedding model, we explore using a reasoning model both as the retriever&generator. To do this, we add MCTS to the generative retrieval pipeline. Check out Chaeeun Kim's post!

🚨 New Paper co-led with byeongguk jeon 🚨 Q. Can we adapt Language Models, trained to predict next token, to reason in sentence-level? I think LMs operating in higher-level abstraction would be a promising path towards advancing its reasoning, and I am excited to share our

Thrilled to announce that I will be joining UT Austin Computer Science at UT Austin as an assistant professor in fall 2026! I will continue working on language models, data challenges, learning paradigms, & AI for innovation. Looking forward to teaming up with new students & colleagues! 🤠🤘

![Jiayi Geng (@jiayiigeng) on Twitter photo Using LLMs to build AI scientists is all the rage now (e.g., Google’s AI co-scientist [1] and Sakana’s Fully Automated Scientist [2]), but how much do we understand about their core scientific abilities?

We know how LLMs can be vastly useful (solving complex math problems) yet Using LLMs to build AI scientists is all the rage now (e.g., Google’s AI co-scientist [1] and Sakana’s Fully Automated Scientist [2]), but how much do we understand about their core scientific abilities?

We know how LLMs can be vastly useful (solving complex math problems) yet](https://pbs.twimg.com/media/Gr9RJv8W8AE8HWy.png)