Shervine Amidi

@shervinea

Ecole Centrale Paris, Stanford University. Twin of @afshinea. New book on Transformers & Large Language Models at superstudy.guide.

ID: 446966253

https://amidi.ai/shervine 26-12-2011 10:33:25

258 Tweet

3,3K Followers

85 Following

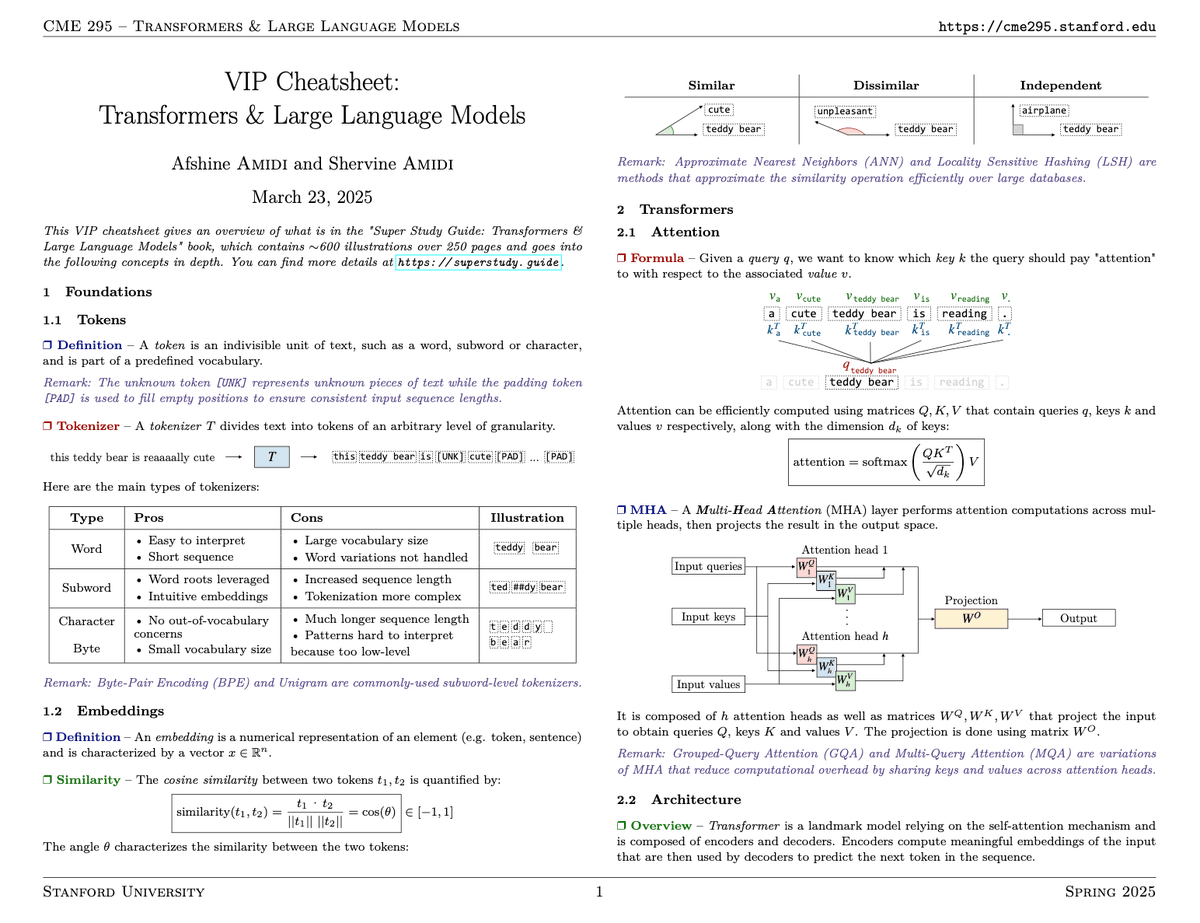

Impressive new book by Afshine Amidi and Shervine Amidi — "Super Study Guide: Transformers & Large Language Models" …

I’m enjoying reading through Afshine Amidi & Shervine Amidi ‘s latest book on Transformers and LLMs. It’s a good balance between math formalizations and application of the latest techniques in foundational models! And the graphs are beautiful 🖌️

This book is really nicely illustrated! Nearly every page has multiple figures/diagrams that help explain the underlying concepts behind transformers & LLMs, including embeddings, attention, LoRA, distillation, quantization, ... Nice work, Afshine Amidi and Shervine Amidi !

New book "Super Study Guide: Transformers & Large Language Models" by Afshine Amidi and Shervine Amidi: amzn.to/3SW6YYm Beautifully presented, excellent content, timely, thorough, educational, and a result of great dedication. Shervine and Afshine tell me that they started

Really enjoying this visual study guide on Transformers and LLMs. It contains a very concise overview of the key concepts in Transformers and LLMs. Topics range from embeddings to attention mechanism to post-training techniques. Thanks for the great book Shervine Amidi and

If you are looking to have a better understanding of LlMs and how they (really) work, do yourself a favor and get this book by Afshine Amidi and Shervine Amidi. It is amazing! It covers all the inportant concepts behind the Transformer architecture with deep learning foundations,

Thank you Bhavesh Bhatt for the great review!

This Spring, my twin brother Shervine Amidi and I will be teaching a new class at Stanford called "Transformers & Large Language Models" (CME 295). The goal of this class is to understand where LLMs come from, how they are trained, and where they are most used. We will also explore

Geminiの開発エンジニアが書いたスタンフォード講義資料「Transformer と大規模言語モデル」の日本語版 by Yoshiyuki Nakai 中井喜之 これはがっつり読みたい。書籍紹介ページ superstudy.guide/transformer-da… と、無償のチートシートもある。github.com/afshinea/stanf…