shiemannor

@shiemannor

Prof@Technion, Researcher@Nvidia, Founder@Jether Energy. Trying to get machine learning to really work.

ID: 1077174278917885953

24-12-2018 12:08:55

16 Tweet

275 Followers

12 Following

In case you missed our three papers in #Neurips2022, here they are again: 1. "RL with a Terminator" at the main conference: arxiv.org/abs/2205.15376 Guy Tennenholtz Nadav Merlis Lior Shani Uri Shalit shiemannor Gal Chechik

2. "Implementing RL Datacenter Congestion Control in NVIDIA NICs" at RL4RealLife and @ML4systems (spotlight) workshops: arxiv.org/abs/2207.02295 Benjamin Fuhrer yuval shpigelman Chen Tessler shiemannor Gal Chechik

3. "SoftTreeMax: Policy Gradient with Tree Search" at the @DeepRL workshop: arxiv.org/abs/2209.13966 shiemannor Gal Chechik

7/ This work was made possible thanks to my amazing co-authors: Yoni Kasten, Yunrong Guo, Shie Mannor, Gal Chechik, and Jason Peng. More videos, a link to the paper, and code (coming real soon!): research.nvidia.com/labs/par/calm/

We released a multi-agent RL framework for network congestion control with the first public realistic network simulator! github.com/NVlabs/RLCC. Based on the amazing work of Benjamin Fuhrer and Chen Tessler

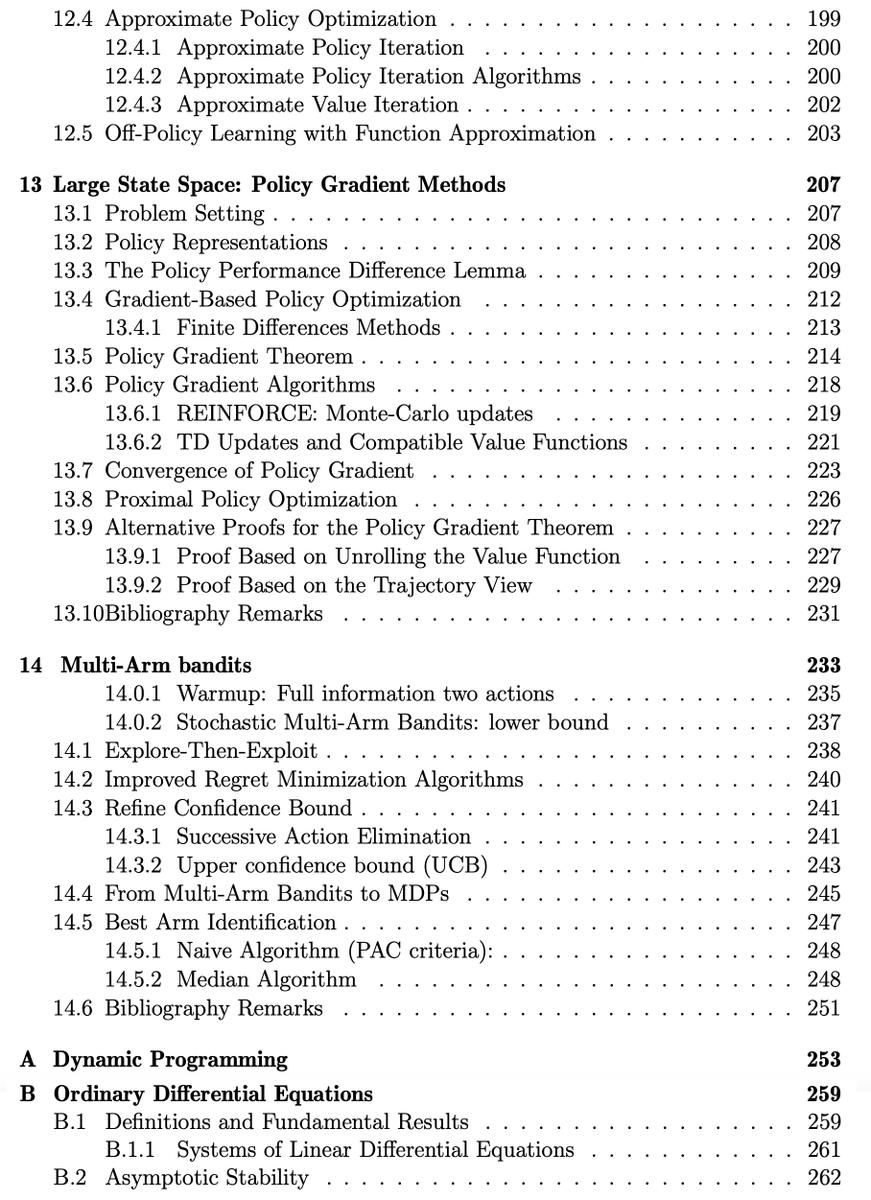

Want to learn / teach RL? Check out new book draft: Reinforcement Learning - Foundations sites.google.com/view/rlfoundat… W/ shiemannor and Yishay Mansour This is a rigorous first course in RL, based on our teaching at TAU CS and Technion ECE.

We hope you find it useful! The book is still work in progress - we’d be grateful for comments, suggestions, omissions, and errors of any kind, at [email protected]

![Chen Tessler (@chentessler) on Twitter photo 1/ Excited to share that our latest work, Conditional Adversarial Latent Models [CALM], has been accepted to <a href="/siggraph/">ACM SIGGRAPH</a> 2023.

🧵👇

#reinforcementlearning #animation #games #isaacgym #siggraph2023 <a href="/NVIDIAAI/">NVIDIA AI</a> 1/ Excited to share that our latest work, Conditional Adversarial Latent Models [CALM], has been accepted to <a href="/siggraph/">ACM SIGGRAPH</a> 2023.

🧵👇

#reinforcementlearning #animation #games #isaacgym #siggraph2023 <a href="/NVIDIAAI/">NVIDIA AI</a>](https://pbs.twimg.com/media/FvJRUI_WwAAzgK8.jpg)

![UriG (@uri_gadot) on Twitter photo Tired of manual #ComfyUI workflow design? While recent methods predict them, our new paper, FlowRL, introduces a Reinforcement Learning framework that learns to generate complex, novel workflows for you!

paper [arxiv.org/abs/2505.21478] Tired of manual #ComfyUI workflow design? While recent methods predict them, our new paper, FlowRL, introduces a Reinforcement Learning framework that learns to generate complex, novel workflows for you!

paper [arxiv.org/abs/2505.21478]](https://pbs.twimg.com/media/GuzGuaEWcAAA4wT.jpg)