Shivam Garg

@shivamg_13

Researcher @MSFTResearch. Previously postdoc @Harvard and PhD student @Stanford.

ID: 128799170

02-04-2010 06:33:50

34 Tweet

247 Followers

252 Following

New reqs for low to high level researcher positions: jobs.careers.microsoft.com/global/en/job/… , jobs.careers.microsoft.com/global/en/job/…, jobs.careers.microsoft.com/global/en/job/…, jobs.careers.microsoft.com/global/en/job/…, with postdocs from Akshay and Miro Dudik x.com/MiroDudik/stat… . Please apply or pass to those who may :-)

Machine unlearning ("removing" training data from a trained ML model) is a hard, important problem. Datamodel Matching (DMM): a new unlearning paradigm with strong empirical performance! w/ Kristian Georgiev Roy Rinberg Sam Park Shivam Garg Aleksander Madry Seth Neel (1/4)

How to be more emotionally intelligent (without trying so hard) 🧵 for Threadapalooza!

The Belief State Transformer edwardshu.com/bst-website/ is at ICLR this week. The BST objective efficiently creates compact belief states: summaries of the past sufficient for all future predictions. See the short talk: microsoft.com/en-us/research… and mgostIH for further discussion.

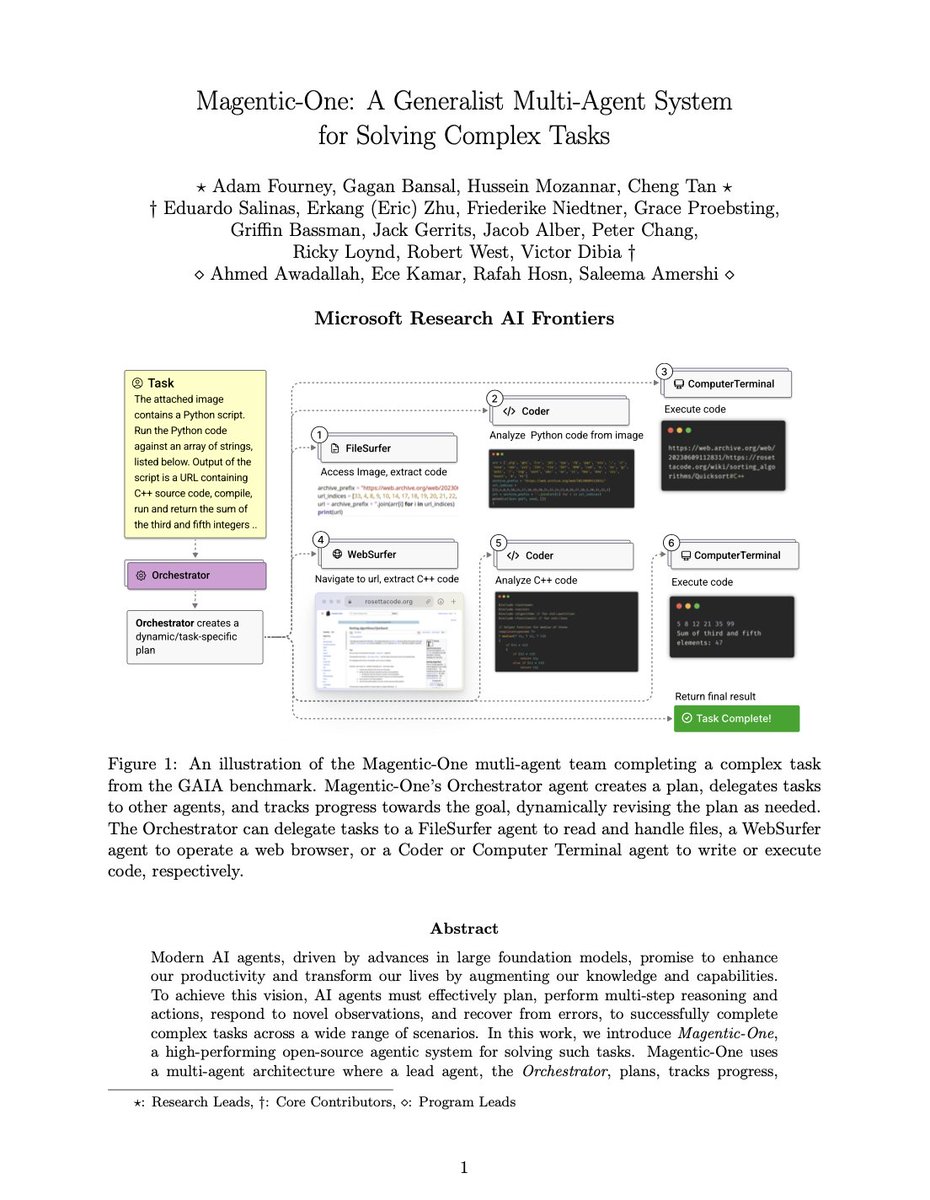

🚀 Introducing Magentic-UI — an experimental human-centered web agent from Microsoft Research . It automates your web tasks while keeping you in control 🧠🤝—through co-planning, co-tasking, action guards, and plan learning. 🔓 Fully open-source. We can't wait for you to try it. 🔗

“How will my model behave if I change the training data?” Recent(-ish) work w/ Logan Engstrom: we nearly *perfectly* predict ML model behavior as a function of training data, saturating benchmarks for this problem (called “data attribution”).

![Dimitris Papailiopoulos (@dimitrispapail) on Twitter photo LLMs Can In-context Learn Multiple Tasks in Superposition

We explore a bizarre LLM superpower that allows them to solve multiple ICL tasks in parallel.

This is related to the view of them as simulators in superposition [cref:<a href="/repligate/">j⧉nus</a>]

arxiv.org/pdf/2410.05603

1/n LLMs Can In-context Learn Multiple Tasks in Superposition

We explore a bizarre LLM superpower that allows them to solve multiple ICL tasks in parallel.

This is related to the view of them as simulators in superposition [cref:<a href="/repligate/">j⧉nus</a>]

arxiv.org/pdf/2410.05603

1/n](https://pbs.twimg.com/media/GZjNzBjW4AE0ewQ.png)