Shrutimoy Das

@shrutimoy

PhD Student at IIT Gandhinagar

ID: 1405624883254493186

17-06-2021 20:34:36

40 Tweet

79 Followers

335 Following

An amazing 🤩 panel on Generative Models: Past, present and future; with Partha Talukdar, Prateek Jain, Yonatan Belinkov ✈️ COLM2025, Bhuvana Ramabhadran, and Sneha Mondal! ✨ #ResearchWeekWithGoogle 2023! #GoogleResearchIndia

Scientific discovery in the Age of AI 🧪🤖🧑🔬✨ ...now published in nature! It's been fantastic writing this survey-spinoff of the AI for Science workshops with these amazing coauthors! Thanks Marinka Zitnik for always keeping our spirits high! 😊 nature.com/articles/s4158…

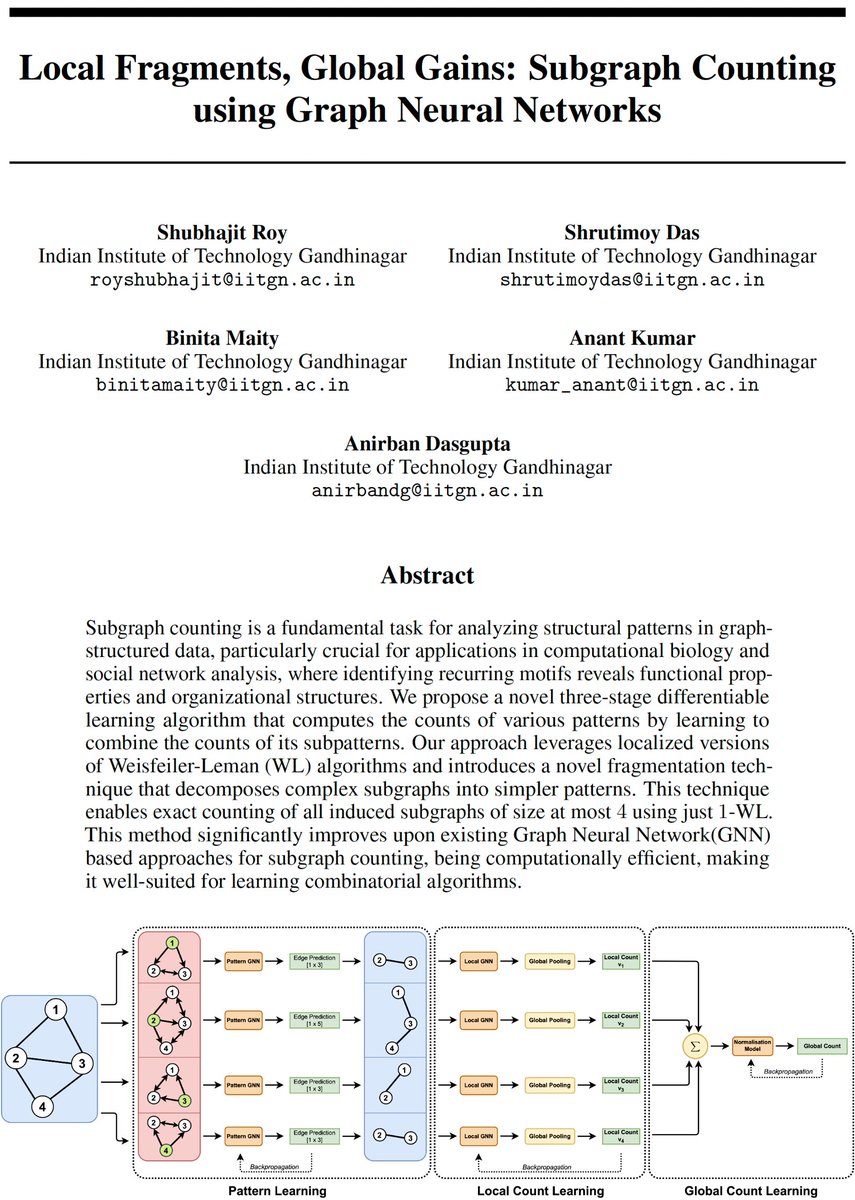

🚀 Excited to share our paper got accepted at DiffCoAlg@NeurIPS 2025 Differentiable Learning of Comb. Algorithms !🎉 🙏 Thanks to Shrutimoy Das, Binita Maity, Anant Kumar, Anirban Dasgupta & CSE@IITGN . #NeurIPS2025 #GNN #GraphLearning #AIResearch

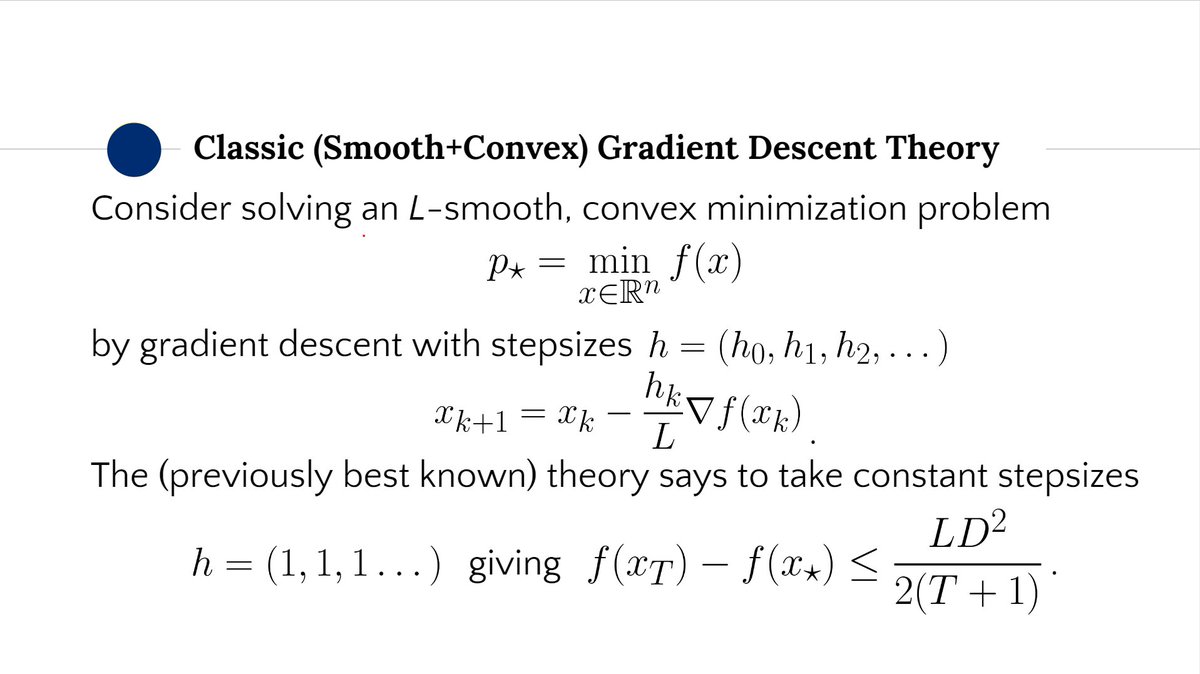

![Ben Grimmer (@prof_grimmer) on Twitter photo The new strangest results of my career (with Kevin Shu and Alex Wang).

Gradient descent can accelerate (in big-O!) by just periodically taking longer steps.

No momentum needed to beat O(1/T) in smooth convex opt!

Paper: arxiv.org/abs/2309.09961 [1/3] The new strangest results of my career (with Kevin Shu and Alex Wang).

Gradient descent can accelerate (in big-O!) by just periodically taking longer steps.

No momentum needed to beat O(1/T) in smooth convex opt!

Paper: arxiv.org/abs/2309.09961 [1/3]](https://pbs.twimg.com/media/F6Y-yrxaEAA15vr.jpg)