Siddharth Karamcheti

@siddkaramcheti

PhD student @stanfordnlp & @StanfordAILab. Robotics Intern @ToyotaResearch. I like language, robots, and people. On the academic job market!

ID: 1036997113274662920

http://www.siddkaramcheti.com/ 04-09-2018 15:19:13

991 Tweet

3,3K Followers

799 Following

HNY! Lately I took a crack at implementing the pi0 model from Physical Intelligence PaliGemma VLM (2.3B fine-tuned) + 0.3B "action expert" MoE + block attention Flow matching w/ action chunking Strong eval on Simpler w/ 75ms inference github.com/allenzren/open… ckpts available! 👇(1/6)

Alphaxiv is an awesome way to discuss ML papers -- often with the authors themselves. Here's an intro and demo by Raj Palleti at #neurips2024 .

Thrilled to share this story covering our collaboration with Project Aria @Meta Reality Labs at Meta ! Human data is robot data in disguise. Imitation learning is human modeling. We are at the beginning of something truly revolutionary, both for robotics and human-level AI beyond language.

Welcome #HRIPioneers2025! Megha Srivastava from Stanford University will present their work 'Robotics for Personalized Motor Skills Instruction' at The HRI Conference Read more on Megha's website: cs.stanford.edu/~megha

Here is another uncut video of real-time interactions with Google DeepMind 's Gemini Robotics!

Introducing ✨Latent Diffusion Planning✨ (LDP)! We explore how to use expert, suboptimal, & action-free data. To do so, we learn a diffusion-based *planner* that forecasts latent states, and an *inverse-dynamics model* that extracts actions. w/ Oleg Rybkin Dorsa Sadigh Chelsea Finn

I'm joining UW–Madison Computer Sciences UW School of Computer, Data & Information Sciences as an assistant professor in fall 2026!! There, I'll continue working on language models, computational social science, & responsible AI. 🌲🧀🚣🏻♀️ Apply to be my PhD student! Before then, I'll postdoc for a year at another UW🏔️ -- UW NLP Allen School.

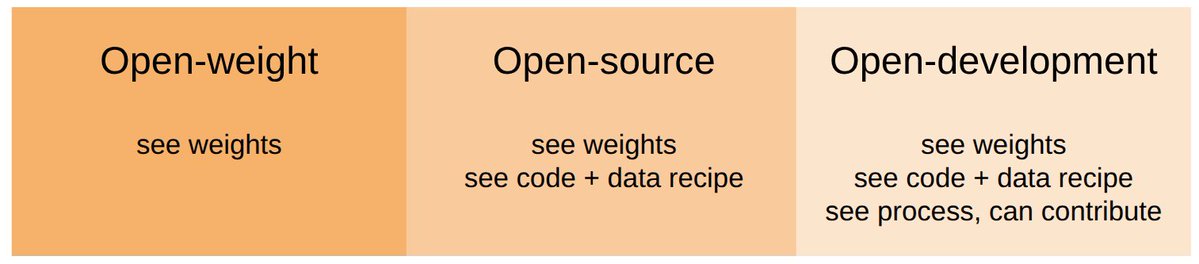

For a rare look into how LLMs are really built, check out David Hall's retrospective on how we trained the Marin 8B model from scratch (and outperformed Llama 3.1 8B base). It’s an honest account with all the revelations and mistakes we made along our journey. Papers are forced to