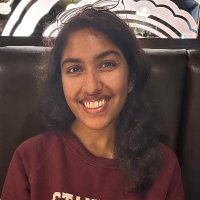

Simran Arora

@simran_s_arora

cs @StanfordAILab @hazyresearch

ID: 4712264894

https://arorasimran.com/ 05-01-2016 06:18:44

311 Tweet

3,3K Followers

193 Following

Really cool work led by Sabri Eyuboglu Ryan Ehrlich Simran Arora! The idea of training a "cartridge" which represents the knowledge in a document (or corpora), and can be slotted into LLMs to support engagement has tons of applications/practical importance for law (1/4)

Thrilled to share that I'll be starting as an Assistant Professor at Georgia Tech (Georgia Tech School of Interactive Computing / Robotics@GT / Machine Learning at Georgia Tech) in Fall 2026. My lab will tackle problems in robot learning, multimodal ML, and interaction. I'm recruiting PhD students this next cycle – please apply/reach out!