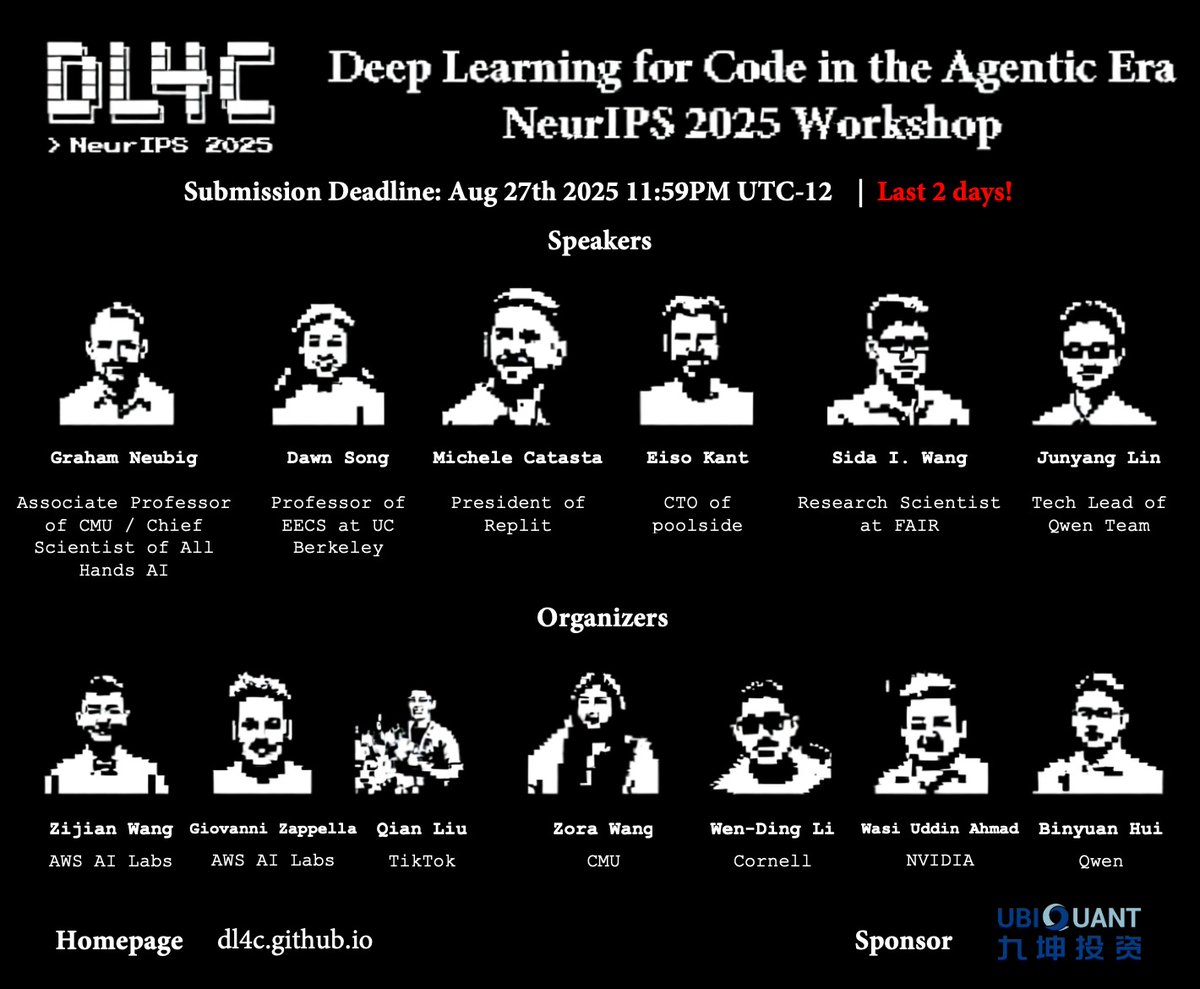

Qian Liu

@sivil_taram

Researcher @ TikTok 🇸🇬

📄 Sailor / StarCoder / OpenCoder

💼 Past: Research Scientist @SeaAIL; PhD @MSFTResearch

🧠 Contribution: @XlangNLP @BigCodeProject

ID: 1465140087193161734

http://siviltaram.github.io/ 29-11-2021 02:06:42

1,1K Tweet

3,3K Followers

674 Following