Solim LeGris

@solimlegris

phd student at nyu. figuring out how to make computers behave like humans in the relevant ways.

ID: 829775316

http://solimlegris.com 17-09-2012 20:44:36

55 Tweet

152 Followers

279 Following

Today (now!), Solim LeGris explains how we predict insight using behavioural data in a physical reasoning game. Check out poster #CogSci2024 P3-LL-867 at 1pm today! Learn about the project here: exps.gureckislab.org/e/blue-giganti…

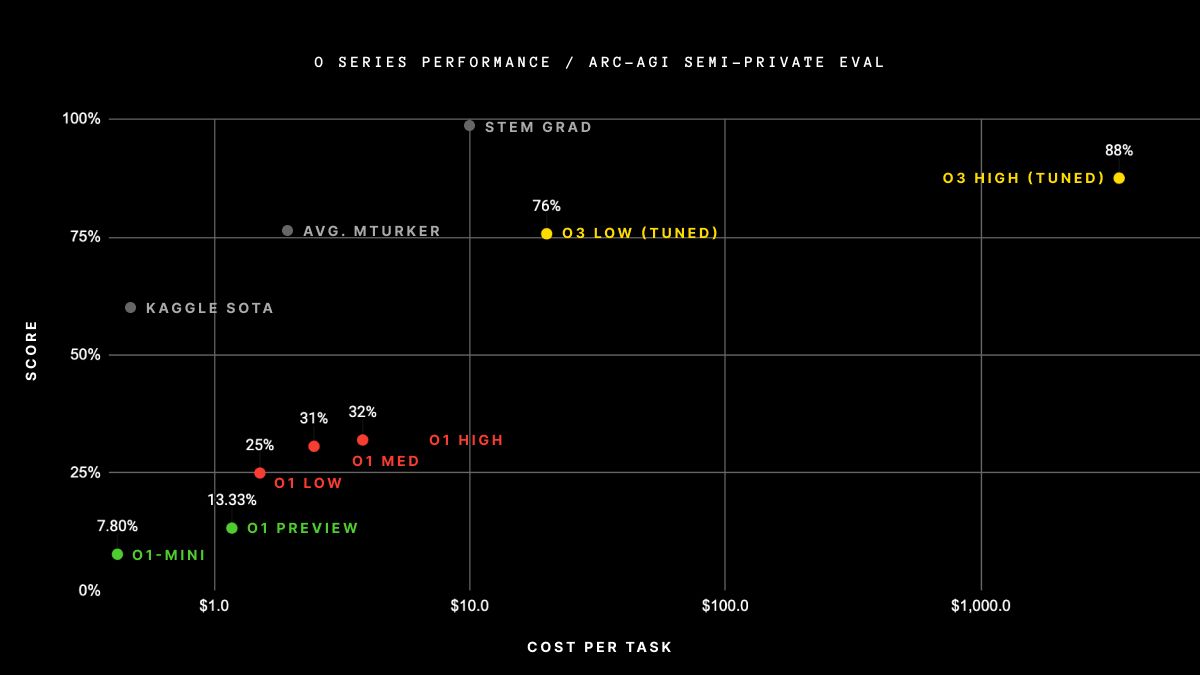

One inspiration for ARC-AGI solutions is the psychology how humans solve novel tasks. A new study by todd gureckis Brenden Lake Solim LeGris Wai Keen Vong @ NYU explores human performance on ARC, finding that 98.7% of the public tasks are solvable by at least 1 MTurker.

We have a new preprint led by Solim LeGris and Wai Keen Vong (w/ Brenden Lake ) looking at how people solve the Abstraction and Reasoning benchmark. arxiv.org/abs/2409.01374 ARC is one of the most challenging & long standing AGI benchmarks and the focus of a large $$ prize ARC Prize 🧵

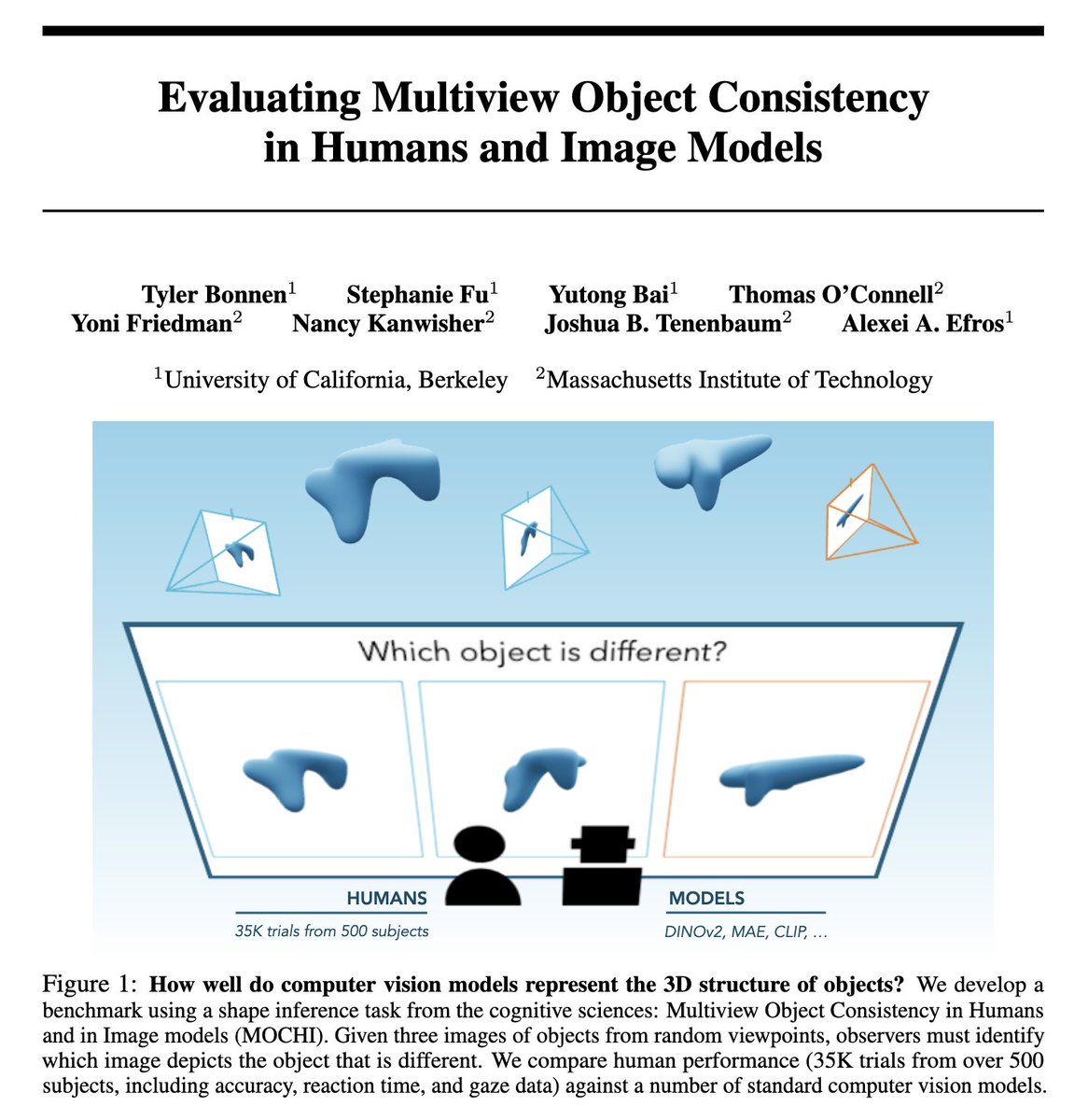

do large-scale vision models represent the 3D structure of objects? excited to share our benchmark: multiview object consistency in humans and image models (MOCHI) with Stephanie Fu Yutong Bai Thomas O'Connell @_yonifriedman Nancy Kanwisher @[email protected] Josh Tenenbaum and Alexei Efros 1/👀

Humans still outperform AI on visual reasoning tasks, according to a new study by Solim LeGris, Wai Keen Vong, Brenden Lake, and todd gureckis. Despite advances, the top AI models are still significantly worse than humans on the ARC Prize's ARC benchmark. nyudatascience.medium.com/human-intellig…

There are infinitely many ways to write a program. In our new work, we show that training autoregressive LMs to synthesize programs with sequences of edits improves the trade-off between zero-shot generation quality and inference-time compute. (1/8) w/ Rob Fergus Lerrel Pinto

.Solim LeGris and Wai Keen Vong estimated that average human performance on ARC is about 64% correct (on the public eval set). This would make o3 clearly better than the average human. Notably, almost all tasks were solvable by at least one person. arxiv.org/abs/2409.01374