Saurabh Saxena

@srbhsxn

Researcher at Google Deepmind

ID: 1876211365

http://saurabhsaxena.org 17-09-2013 16:59:15

129 Tweet

846 Followers

373 Following

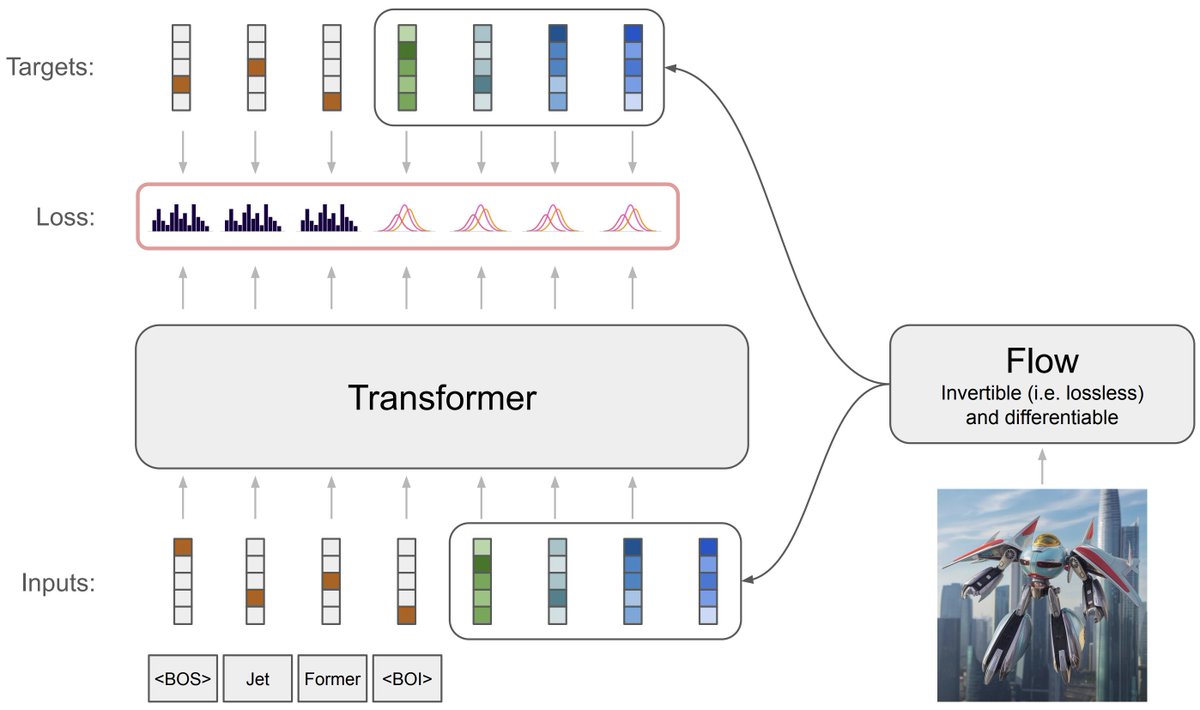

Looking for diffusion model advancements at #NeurIPS2023? Come to check our oral work "Understanding Diffusion Objectives as the ELBO with Simple Data Augmentation" w/ Durk Kingma. New theoretical understanding, SOTA empirical results, and more! Arxiv: arxiv.org/abs/2303.00848

Excited to release NeRFiller with my amazing collaborators Aleksander Holynski, Varun Jampani, Saurabh Saxena, Noah Snavely, Abhishek Kar, and Angjoo Kanazawa! The project page is available at ethanweber.me/nerfiller/. We focus on scene completion by using a 2D inpainter.

Hiring Research Scientists within Google DeepMind - Toronto to join our team & advance the next generation of medical AI, develop cutting-edge LLMs & Multi-modal models to tackle real-world healthcare challenges. Please submit your interest through: forms.gle/2cSbBotUwSfVfu…

[[THREAD]] Happy to announce 4DiM, our diffusion model for novel view synthesis of scenes! 4DiM allows camera+time control with as few as one input image. Joint work with Saurabh Saxena* Lala Li* Andrea Tagliasacchi 🇨🇦 David Fleet *equal contribution

My Google colleague and longtime UC Berkeley faculty member David Patterson has a great essay out in this month's Communications of the ACM (Association for Computing Machinery):🎉 "Life Lessons from the First Half-Century of My Career Sharing 16 life lessons, and nine magic words." I saw an