Yoshi Suhara

@suhara

Building Small & Large Language Models @nvidia

ID: 7062832

https://yoshi-suhara.com 25-06-2007 05:20:01

163 Tweet

322 Followers

282 Following

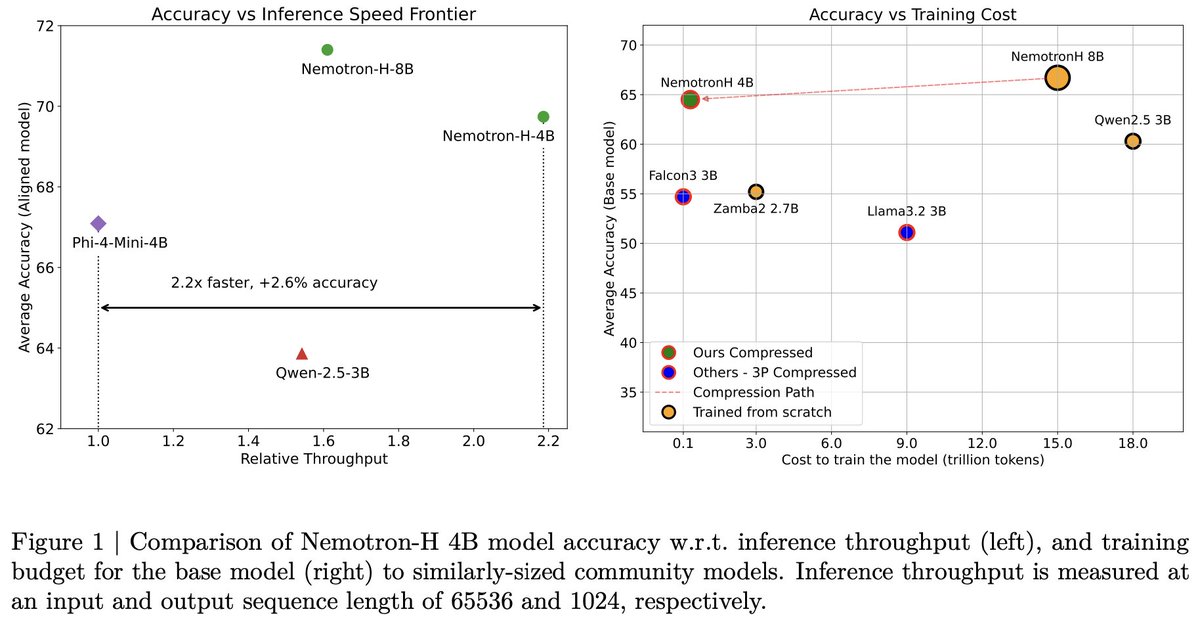

Nemotron-H base models (8B/47B/56B): A family of Hybrid Mamba-Transformer LLMs are now available on HuggingFace: huggingface.co/nvidia/Nemotro… huggingface.co/nvidia/Nemotro… huggingface.co/nvidia/Nemotro… Technical Report: arxiv.org/abs/2504.03624 Blog: research.nvidia.com/labs/adlr/nemo…

A new video game benchmark for LLM agents, designed across various game titles! Happy to be part of this wonderful collaboration with Dongmin Park and the amazing team KRAFTON AI!