Sweta Agrawal

@swetaagrawal20

Research Scientist @Google Translate |

Past: Postdoc Researcher @itnewspt | Ph.D. @ClipUmd, @umdcs

#nlproc

ID: 2559288140

http://sweta20.github.io 10-06-2014 15:47:30

339 Tweet

1,1K Followers

1,1K Following

🚀 New paper alert! 🚀 Ever tried asking an AI about a 2-hour movie? Yeah… not great. Check: ∞-Video: A Training-Free Approach to Long Video Understanding via Continuous-Time Memory Consolidation! 🔗 arxiv.org/abs/2501.19098 w/ Antonio Farinhas , Dan McNamee , Andre Martins

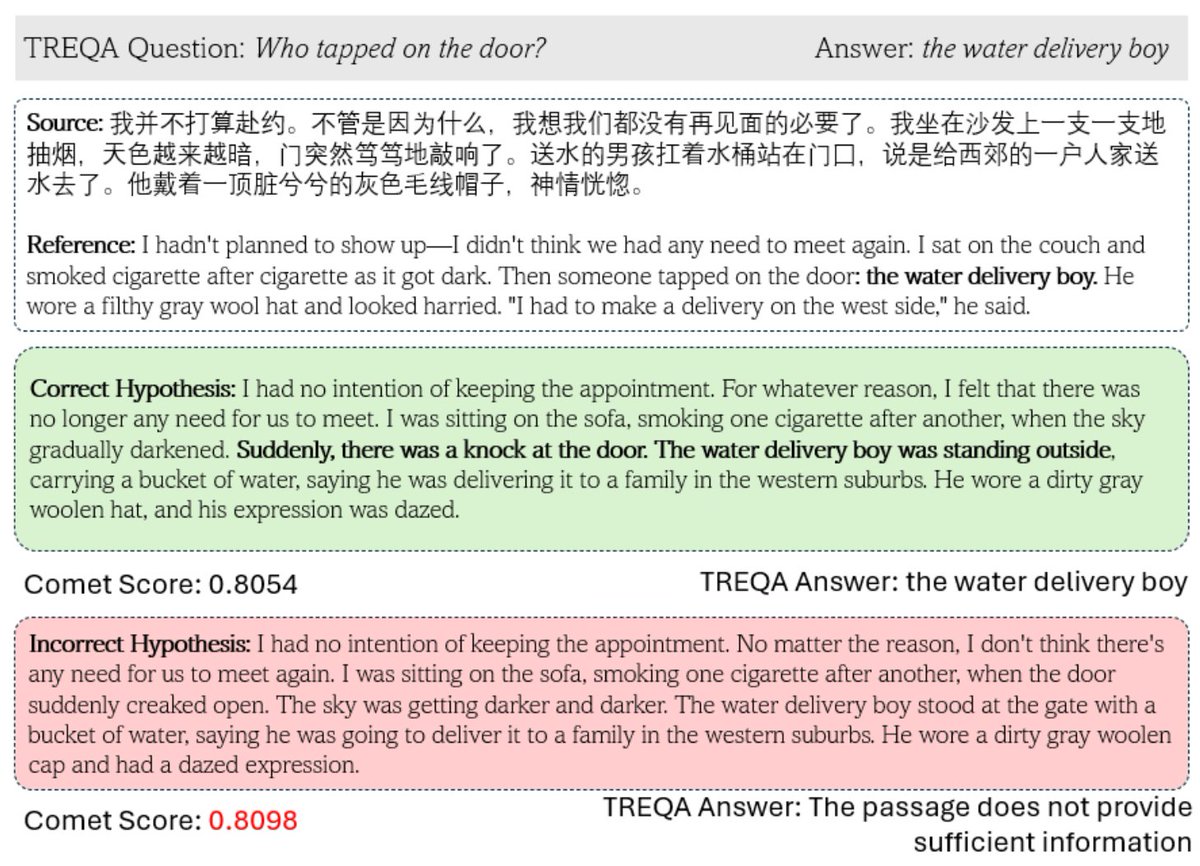

MT metrics excel at evaluating sentence translations, but struggle with complex texts We introduce *TREQA* a framework to assess how translations preserve key info by using LLMs to generate & answer questions about them arxiv.org/abs/2504.07583 (co-lead Sweta Agrawal) 1/15

✨MTA was accepted at #COLM2025 ✨ Since our first announcement, we have updated the paper with scaling laws, new baselines, and more evaluations! Code is now available in our repo: github.com/facebookresear… Conference on Language Modeling

If you’re a student in India - you’ve just been granted access to a FREE Gemini upgrade worth ₹19,500 for one year 🥳✨ Claim and get free access to Veo 3, Gemini in Google apps, and 2TB storage 🔗 goo.gle/freepro. Google Gemini App