Thomas Buckley

@tabuckley_

PhD Student at @HarvardDBMI

ID: 1677706430781046784

08-07-2023 15:49:38

18 Tweet

72 Followers

146 Following

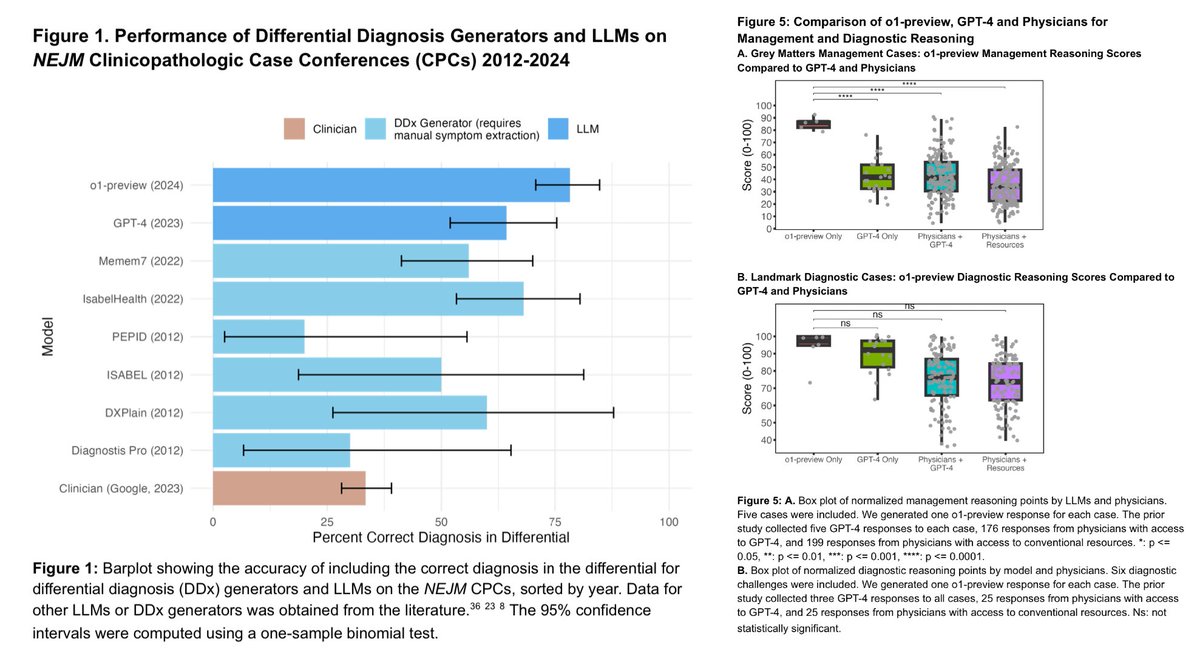

Do large language models have a probabilistic understanding of disease states? And what does this mean for the future of diagnosis and clinical reasoning? I explore this with Thomas Buckley, Arjun (Raj) Manrai, and Dan Morgan in our new paper in JAMA Network Open. A brief 🧵⬇️

Lior Pachter I believe I have improved upon UMAP and would like to introduce you to my new method: U-map! Here are some reasons I think it’s better than other methods: 1. Preserves neighborhoods 2. Incredibly robust to noise 3. Generalizable to other letters

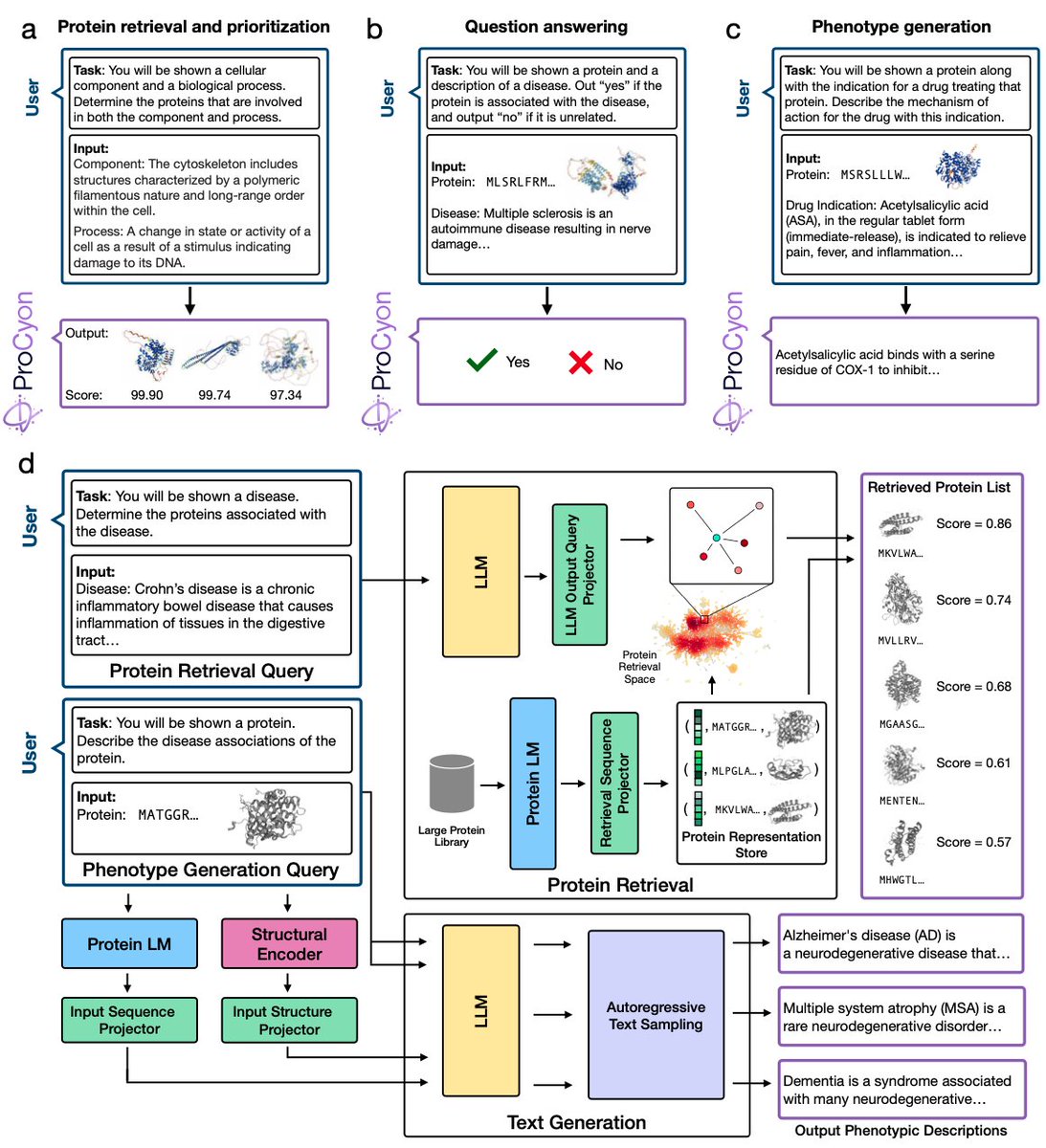

(1/4) Excited to introduce ProCyon: a multimodal foundation model to model, generate, and predict protein phenotypes, led by stellar Owen Queen Yepeng Robert Calef Valentina Giunchiglia 👉 biorxiv.org/content/10.110… ProCyon is an 11B parameter multimodal model that integrates protein

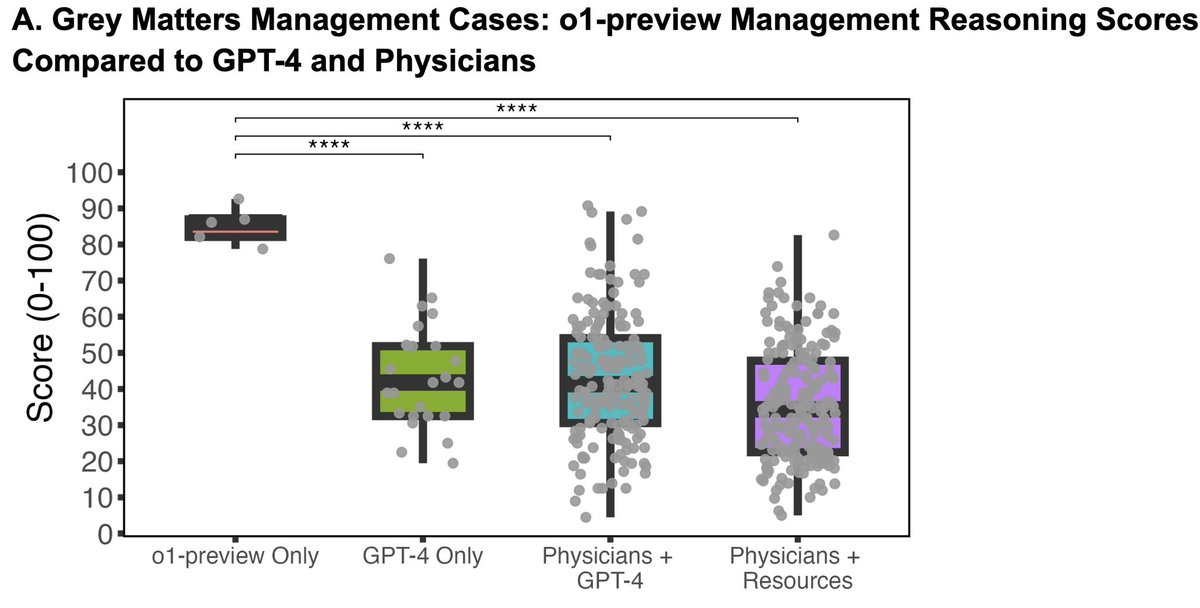

How does o1-preview compare to hundreds of clinicians in medical reasoning? We explore this in our new preprint, led by stars Thomas Buckley of DBMI at Harvard Med and Peter Brodeur of BIDMC. Full text: arxiv.org/abs/2412.10849 Great thread by co-conspirator Adam Rodman below 👇

Thanks for the sneak peak of this last month, Adam Rodman. This work from Adam and Arjun (Raj) Manrai (co-led by the inimitable Thomas Buckley) is really worth a read. The most astounding result highlighted in the thread below: 👇🏽

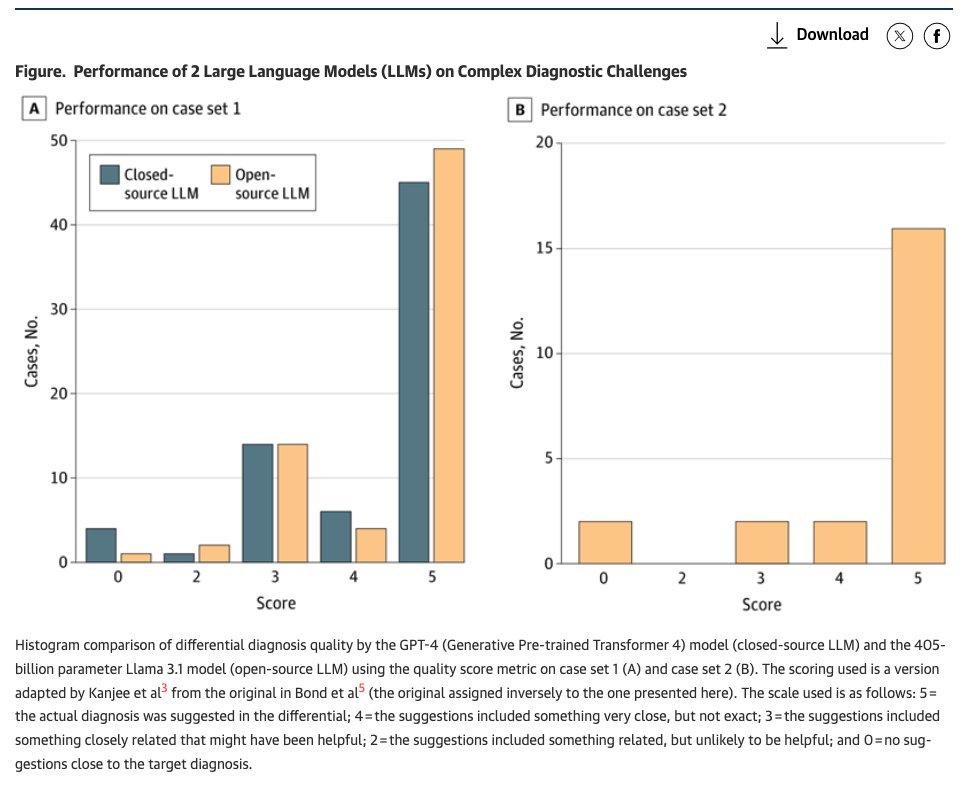

Open-source LLMs have narrowed the gap with leading proprietary models surprisingly fast. But how well do they do on tough clinical cases? Excited to share our new study out today in JAMA Health Forum led by Thomas Buckley with amazing physician coauthors. Detailed thread to come

Full study: jamanetwork.com/journals/jama-… News coverage from Harvard Medical School: hms.harvard.edu/news/open-sour…

New research letter on the diagnostic abilities of open-source models for diagnosis led by superstar Thomas Buckley -- short letter (and something that I think most researchers already know) but big implications for medicine. A 🧵⬇️

AIM DBMI at Harvard Med graduate students already pushing the frontier in medical AI thecrimson.com/article/2025/3…

We just added a major new experiment to our o1 study, comparing o1 and attending physicians at key diagnostic touchpoints on REAL cases from the BIDMC ER Stellar work led by Thomas Buckley and Peter Brodeur as part of a rapidly growing DBMI at Harvard Med and BIDMC collab 🚀