taesiri

@taesiri

PhD graduate UofA, Working on Large Multimodal Models.

ID: 827975822095036416

https://taesiri.ai/ 04-02-2017 20:23:13

542 Tweet

715 Followers

4,4K Following

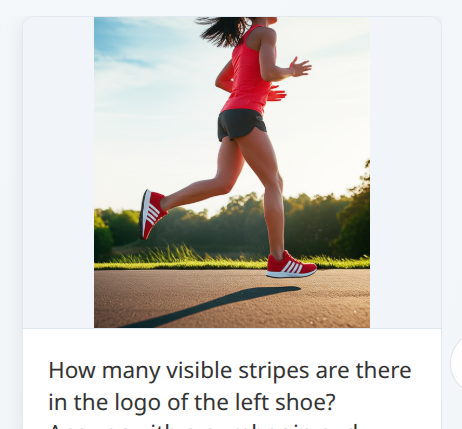

How do best AI image editors 🤖 GPT-4o, Gemini 2.0, SeedEdit, HF 🤗 fare ⚔️ human Photoshop wizards 🧙♀️ on text-based 🏞️ image editing? Logan Logan Bolton and Brandon Brandon Collins shared some answers at our poster today! #CVPR2025 psrdataset.github.io A few insights 👇

Pooyan Pooyan Rahmanzadehgervi presenting our Transformer Attention Bottleneck paper at #CVPR2026 💡 We **simplify** MHSA (e.g. 12 heads -> 1 head) to create an attention **bottleneck** where users can debug Vision Language Models by editing the bottleneck and observe expected VLM text outputs.

With all the attention on the new 🍌 Nano Banana, we (with Brandon Collins) stress-tested it with our "shirt-color chain" benchmark: 26 sequential edits where each output feeds into the next. Learn more our study on image editing models here: psrdataset.github.io

yuyin zhou@ICCV2025 NeurIPS Conference We have a paper in the same situation. AC: Yes! PC: No no. NeurIPS Conference please consider the whether 1st author is a student and whether this would be their first top-tier paper BEFORE making such a cut. More healthy for junior researchers. OR use a Findings track.