Tatsunori Hashimoto

@tatsu_hashimoto

Assistant Prof at Stanford CS, member of @stanfordnlp and statsml groups; Formerly at Microsoft / postdoc at Stanford CS / Stats.

ID: 1118495199863476225

https://thashim.github.io/ 17-04-2019 12:43:31

191 Tweet

7,7K Followers

197 Following

It's been an exciting journey to work on S1. Answers to some questions people have asked: 1. Both s1K (huggingface.co/datasets/simpl…) and the full 59K dataset are public (huggingface.co/datasets/simpl…). We do not have manual verification of the authenticity of "ground truth" solution field,

🎙️ Speech recognition is great - if you speak the right language. Our new Stanford NLP Group paper introduces CTC-DRO, a training method that reduces worst-language errors by up to 47.1%. Work w/ Ananjan, Moussa, Dan Jurafsky, Tatsunori Hashimoto and Karen Livescu. Here’s how it works! 🧵

Just wrapped up #CS224N NLP with Deep Learning poster session with over 600 students and an amazing co-instructor Tatsunori Hashimoto 😊 Lots of exciting research and engaging discussions 🚀 Huge congratulations to all the students for their hard work, and a big thank you to our

Want to learn the engineering details of building state-of-the-art Large Language Models (LLMs)? Not finding much info in OpenAI’s non-technical reports? Percy Liang and Tatsunori Hashimoto are here to help with CS336: Language Modeling from Scratch, now rolling out to YouTube.

At #ICLR, check out Perplexity Correlations: a statistical framework to select the best pretraining data with no LLM training! I can’t make the trip, but Tatsunori Hashimoto will present the poster for us! Continue reading for the latest empirical validations of PPL Correlations:

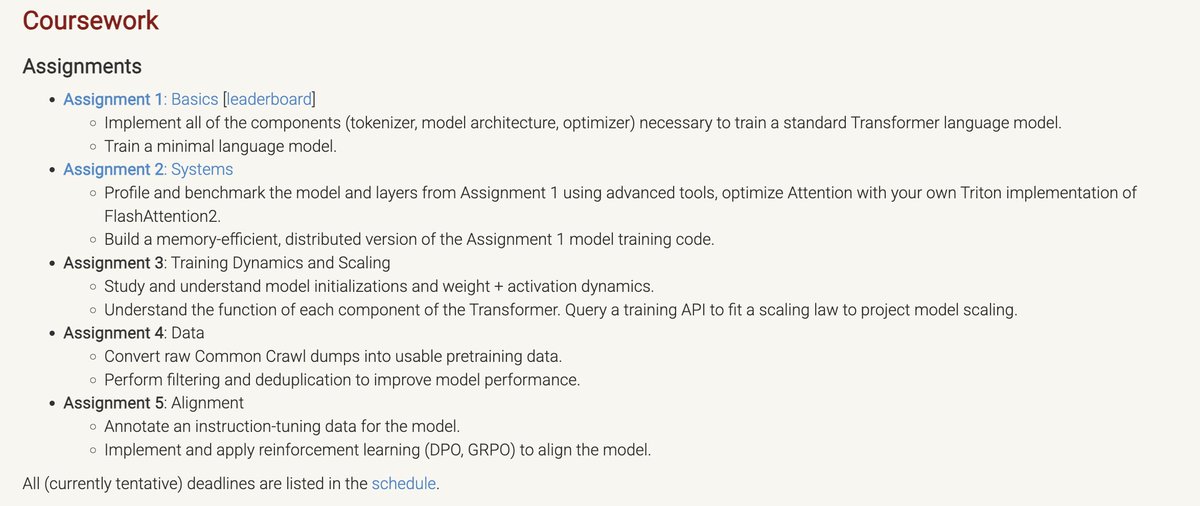

Designed some graphics for Stanford CS336 (Language Modeling from Scratch) by Percy Liang Tatsunori Hashimoto Marcel Rød Neil Band Rohith Kuditipudi Covering four assignments 📚 that teach you how to 🧑🍳 cook an LLM from scratch: - Build and Train a Tokenizer 🔤 - Write Triton kernels for

Wrapped up Stanford CS336 (Language Models from Scratch), taught with an amazing team Tatsunori Hashimoto Marcel Rød Neil Band Rohith Kuditipudi. Researchers are becoming detached from the technical details of how LMs work. In CS336, we try to fix that by having students build everything: