Terry Yue Zhuo

@terryyuezhuo

@BigComProject (non-profit, looking for compute sponsorship) Lead. IBM PhD fellow (2024-2025). Vibe Coding: swe-arena.com

ID: 1266233448521297926

https://terryyz.github.io 29-05-2020 05:02:42

1,1K Tweet

1,1K Followers

621 Following

The NeurIPS Conference 2025 Datasets & Benchmarks track CFP is open! Good datasets are essential to progress in AI so I'm thrilled to be a co-chair this year and with my fellow colleagues share some improvements to the submission and review process that raise the bar for standards of

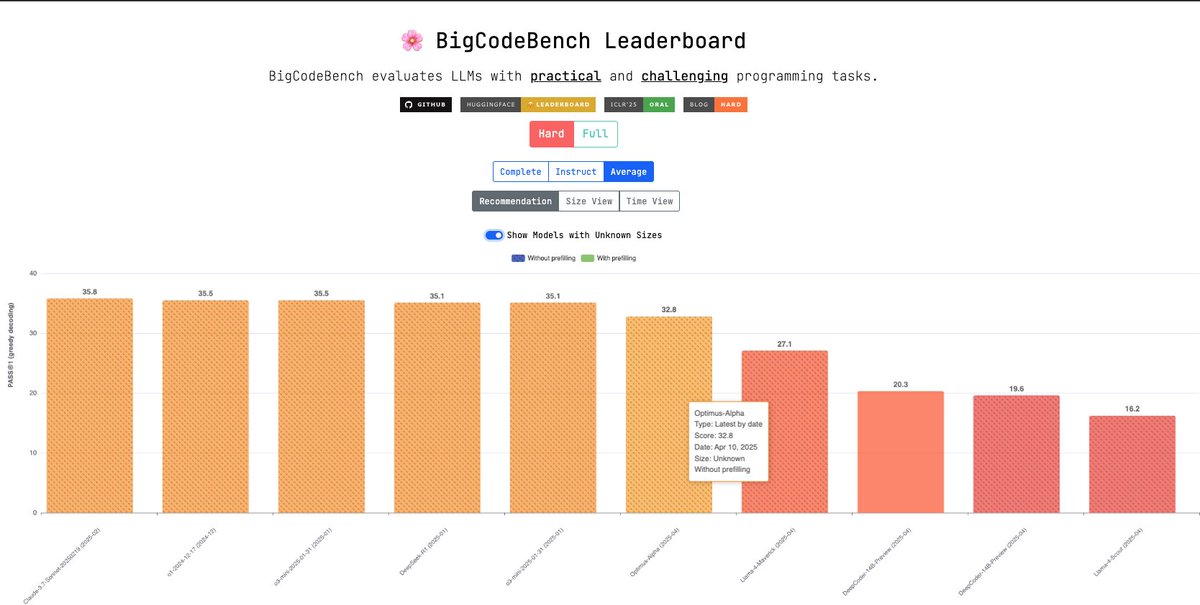

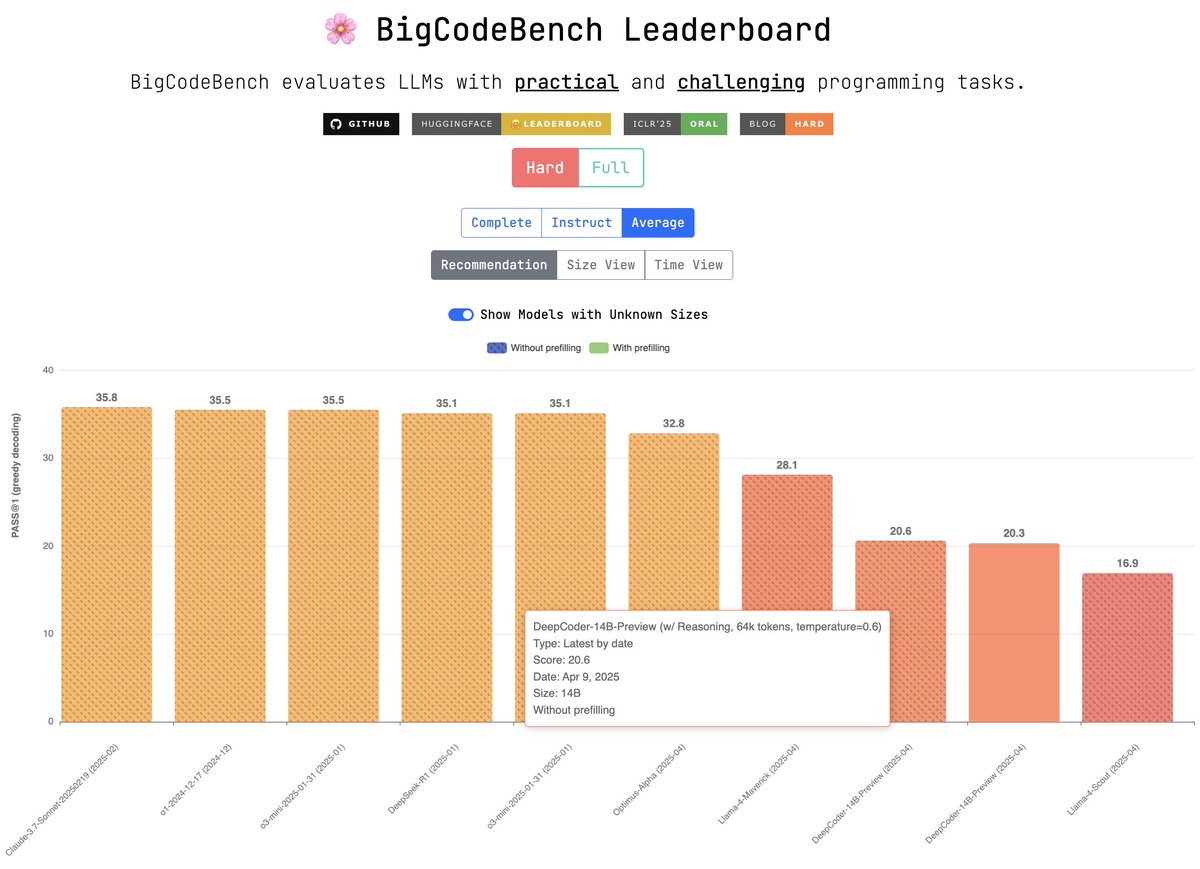

Quasar Alpha via OpenRouter API on BigCodeBech-Hard (Ranked 6th/195) 37.8% Complete 31.8% Instruct 34.8% Average