Tessa Barton

@tessybarton

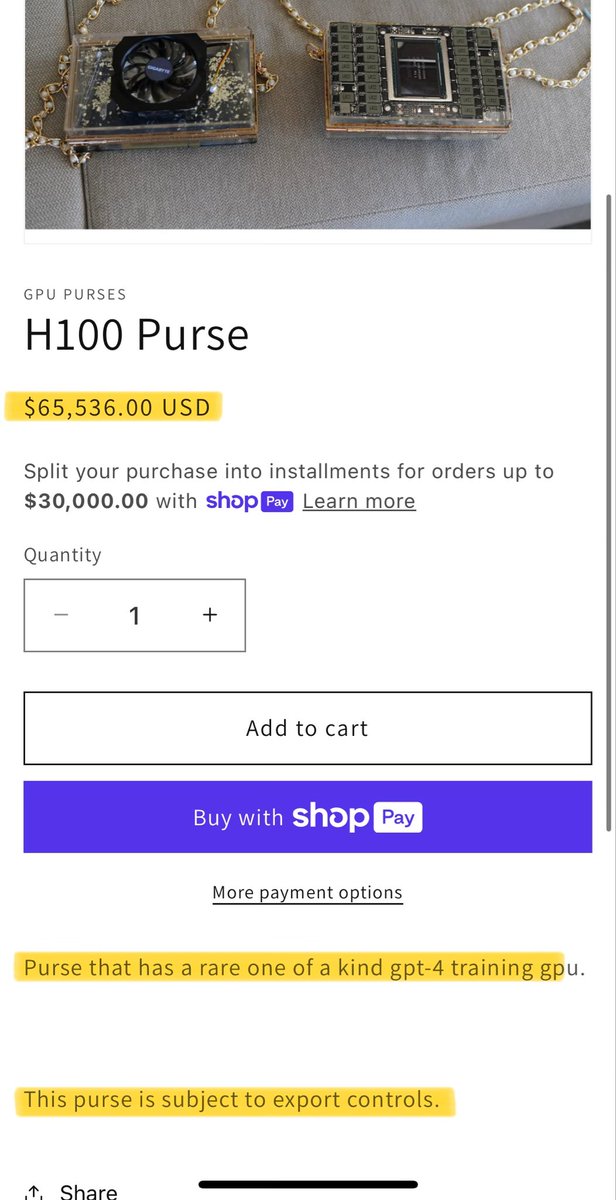

Inventor of GPU purse. AI Research Scientist. Prev: @MosaicML x @Databricks, @NYTimes.

ID: 384239260

http://gpupurse.com 03-10-2011 10:05:22

338 Tweet

3,3K Followers

1,1K Following

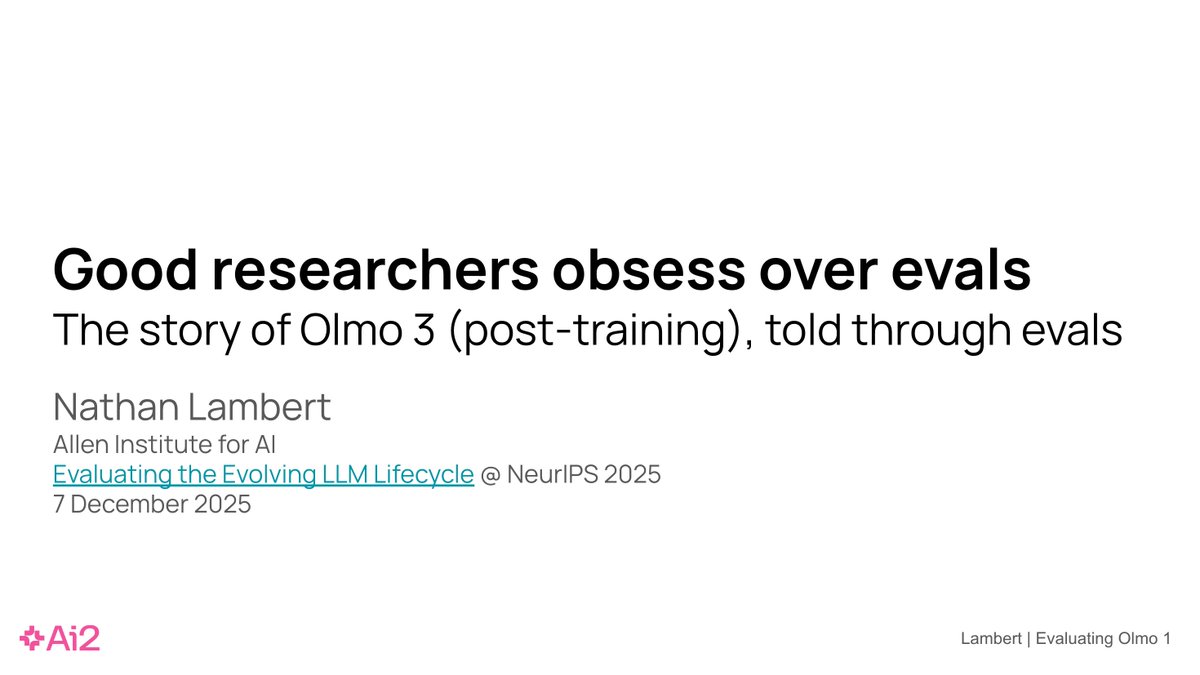

In all seriousness its really cool to see the gauntlet become a standard in evaluating base models. Tessa Barton Jeremy Dohmann Mansheej Paul Abhi Venigalla and I worked really hard thinking carefully about how to design aggregations that gave meaningful signal across model scales

Astasia Myers IMO he was moreso talking about the marginal returns on increasing compute and how to allocate compute. (napkin math illustration bear with me) scaling pretraining and scaling RL is not over but the gains from scaling aren't addressing fundamental failures in models (he mainly

I'm giving two talks at NeurIPS tomorrow! - iterative improvement of generative models, incl π0.6* (9:40 am, SPIGM workshop, with Yoonho Lee @NeurIPS) - long-horizon memory & autonomy (10:30 am, EWM workshop)