Tsinghua KEG (THUDM)

@thukeg

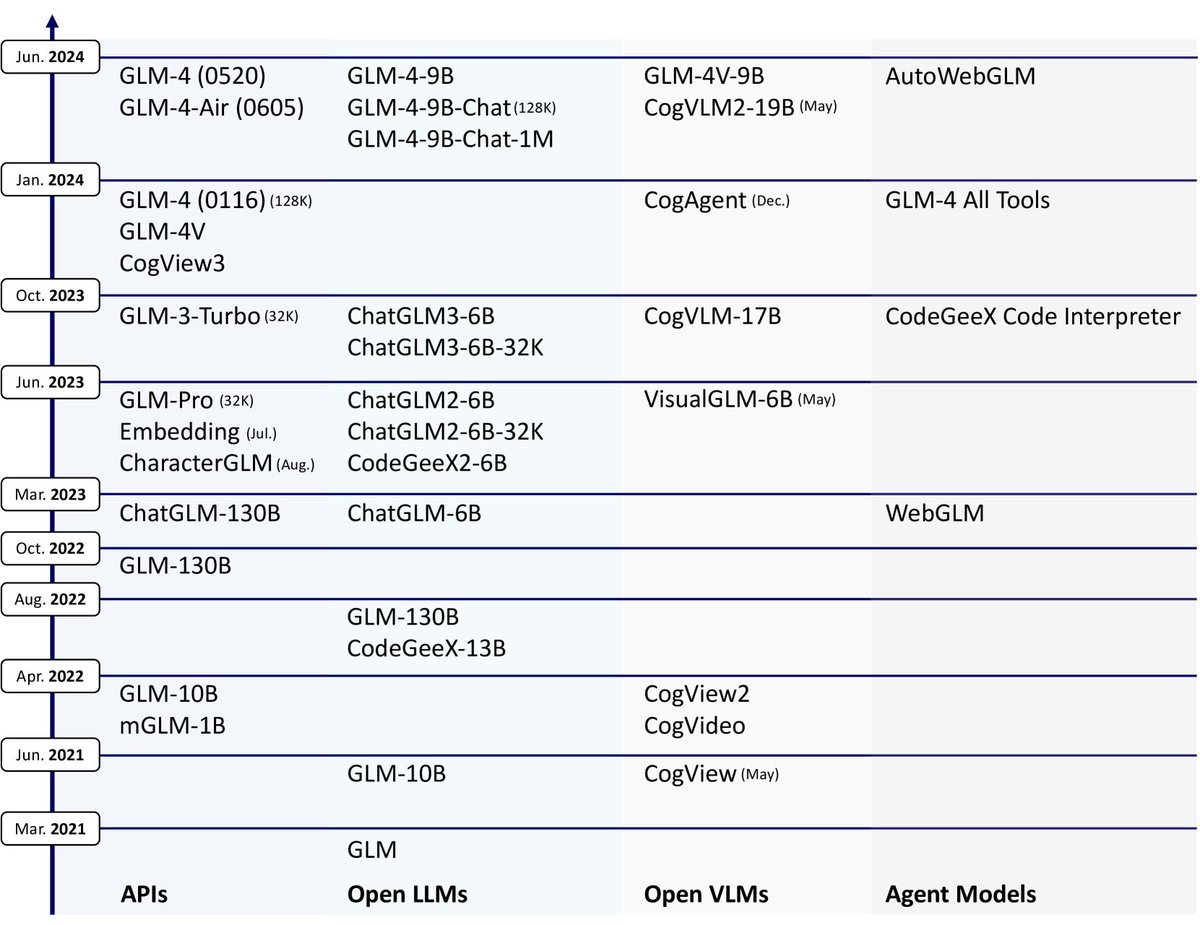

#ChatGLM #GLM130B #CodeGeeX #CogVLM #CogView #AMiner The Knowledge Engineering Group (KEG) and THUDM at @Tsinghua_Uni @jietang @ericdongyx

ID: 1544212427432022016

https://github.com/THUDM 05-07-2022 06:51:56

238 Tweet

4,4K Followers

170 Following

New from Tsinghua KEG (THUDM) LongWriter: Unleashing 10,000+ Word Generation from Long Context LLMs author Yushi Bai@ACL 2025 is active in discussion section to answer your questions: huggingface.co/papers/2408.07…

LongWriter-glm4-9b from Tsinghua KEG (THUDM) is capable of generating 10,000+ words at once!🚀 Paper identifies a problem with current long context LLMs -- they can process inputs up to 100,000 tokens, yet struggle to generate outputs exceeding lengths of 2,000 words. Paper proposes that an

What has just happened? Tsinghua KEG (THUDM) has just released the CogVideoX image-to-video generation model. Amazing result. Combined demo of T2V/I2V and V2V: huggingface.co/spaces/THUDM/C… Please duplicate the space with a L4s to avoid the long waiting queue. Model: huggingface.co/THUDM/CogVideo…

#AutoGLM: Autonomous Foundation Agents for GUIs by Xiao Liu (Shaw) and team at Tsinghua KEG (THUDM) & ChatGLM! Here are some AutoGLM for phone use demos --- beta testing since Oct 25 --- and its tech report arxiv.org/abs/2411.00820