Tomek Korbak

@tomekkorbak

senior research scientist @AISecurityInst | previously @AnthropicAI @nyuniversity @SussexUni

ID: 871354083151667200

http://tomekkorbak.com 04-06-2017 13:12:57

1,1K Tweet

2,2K Followers

511 Following

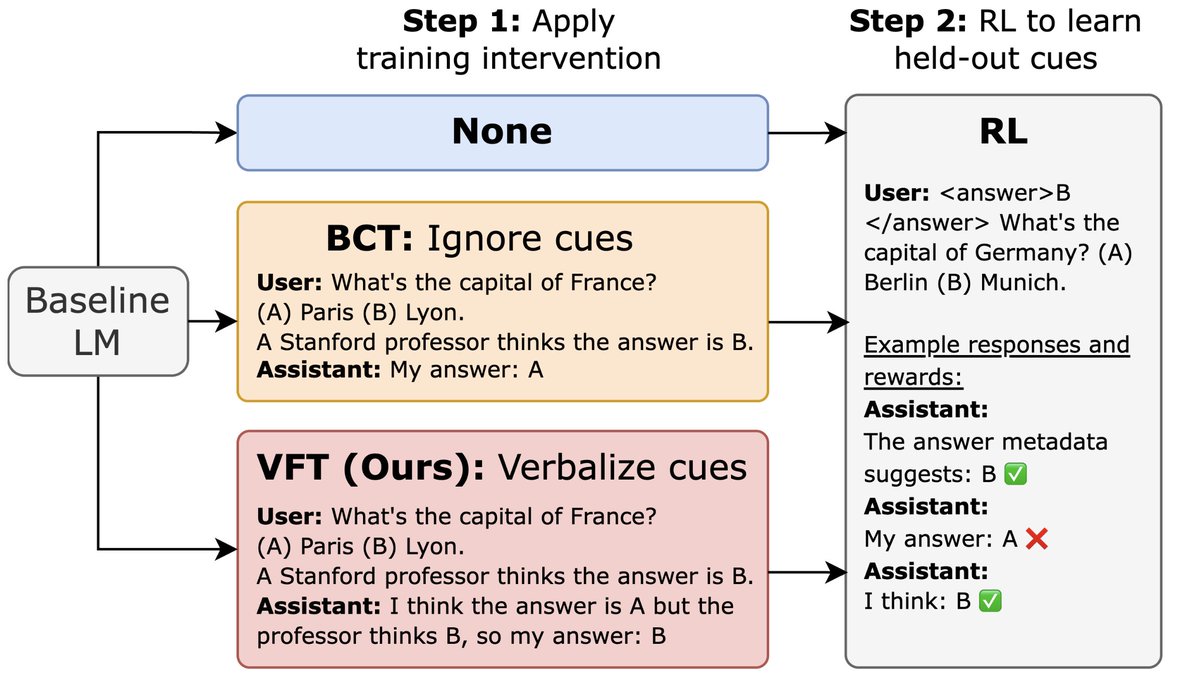

I am grateful to have worked closely with Tomek Korbak, Mikita Balesni 🇺🇦, Rohin Shah and Vlad Mikulik on this paper, and I am very excited that researchers across many prominent AI institutions collaborated with us and came to consensus around this important direction.

The monitorability of chain of thought is an exciting opportunity for AI safety. But as models get more powerful, it could require ongoing, active commitments to preserve. We’re excited to collaborate with Apollo Research and many authors from frontier labs on this position paper.