Tianqi Chen

@tqchenml

AssistProf @CarnegieMellon. Chief Technologist @OctoML. Creator of @XGBoostProject, @ApacheTVM. Member catalyst.cs.cmu.edu, @TheASF. Views are on my own

ID: 3187990776

https://tqchen.com/ 07-05-2015 19:10:39

1,1K Tweet

17,17K Followers

1,1K Following

Databricks 's Agent Bricks is powered by XGrammar for structured generation, and achieves high quality and efficiency. It helps you complete AI tasks without needing to worry about the algorithmic details. Give it a try!

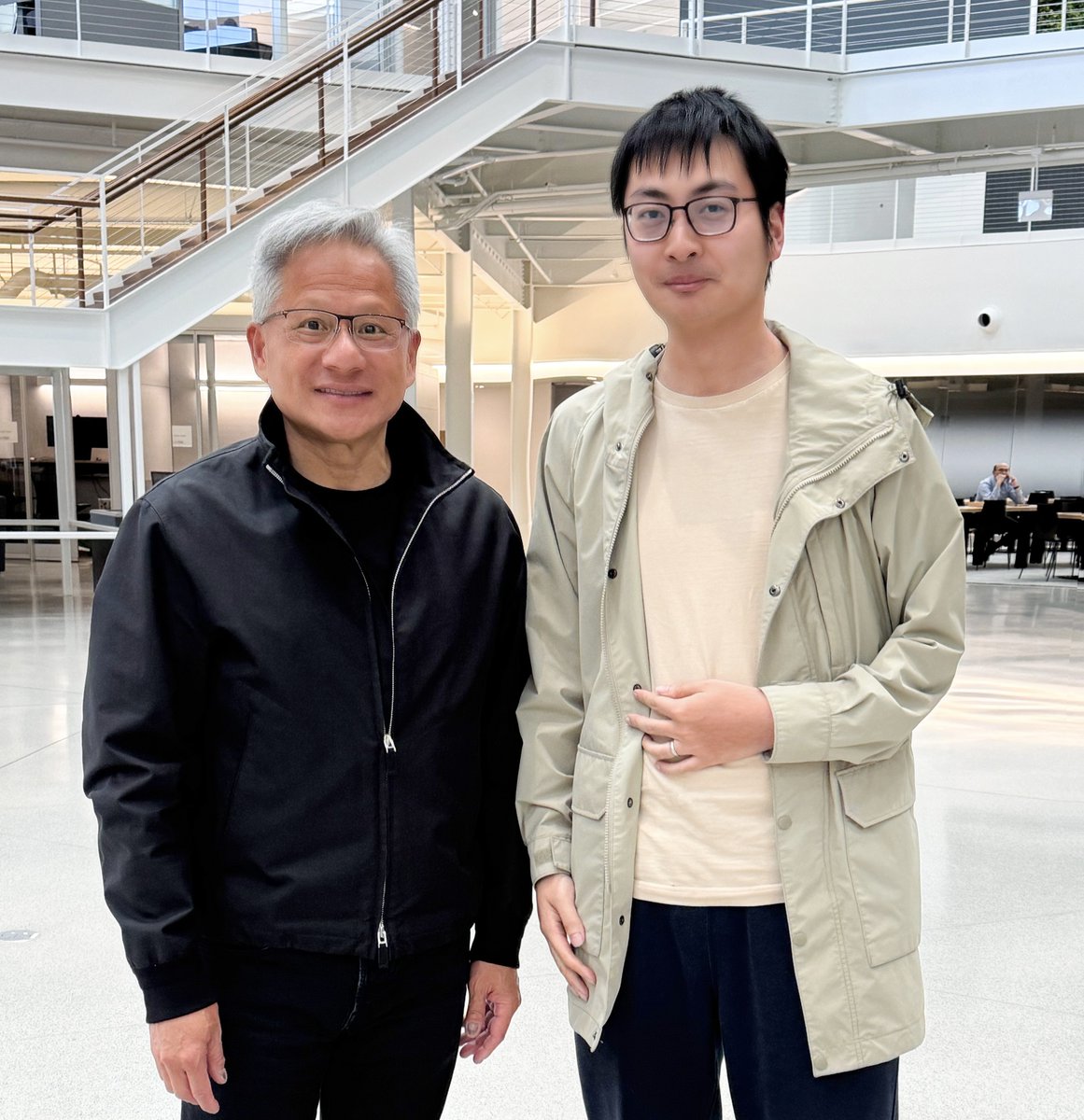

SGLang is an early user of FlashInfer and witnessed its rise as the de facto LLM inference kernel library. It won best paper at MLSys 2025, and Zihao now leads its development NVIDIA AI Developer. SGLang’s GB200 NVL72 optimizations were made possible with strong support from the

#MLSys2026 will be led by the general chair Luis Ceze and PC chairs Zhihao Jia and Aakanksha Chowdhery. The conference will be held in Bellevue on Seattle's east side. Consider submitting and bringing your latest works in AI and systems—more details at mlsys.org.